I think this is a salient observation as to why people trust LLMs and even believe that they have a ‘spark of consciousness’ or express deep semantic comprehension even though they often fail to vet prompts for false premises and respond with obvious errors, some of them quite basic. LLMs are naturally very good at grammar because the rules of structured grammar are reasonably consistent (even in a language like English which has a lot of variation in structure) and have become quite good at producing strings of tokens into comprehensible sentences, but with the additional ‘compute’ and reinforcement using ‘reward models’ have become quite adept at narrative structure within the scope of a prompt (i.e. a few paragraphs) which is expressed as “well crafted and authoritative sounding prose”, giving a kind of metasemantic ‘truthiness’ even if the actual statement is nonsense.

Narratives appeal to people because they communicate seemingly logical constructions of concepts into coherent mental frameworks even though many if not most narratives actually contain logical fallacies or counterfactual claims, not just incidentally but as a direct consequence of needing to be linked together into an arc with a conclusion, and such narratives are often repeated and even become common ‘memes’ because of this appeal even long after they are debunked, i.e. Paul Harvey and “The Rest of the Story”, or basically any biopic you’ve ever seen about some significant historical figure in which characters are often composited together or events are reorganized or fictionalized for the sake of narrative flow. People put a lot of trust in a good narrative because it seems to make sense out of a jumble of supposed facts or claims, and are often reluctant to actually check an appealing story because they have already decided that it is consistent with their worldview.

There are many forms of ‘AI’ built on using artificial neural networks (ANN) and heuristic methods to develop emergent capabilities based upon complex or difficult to identify patterns in large datasets, and in fact this was really the genesis of using ANNs in heuristic modeling. But large language models are specifically built to replicate natural language processing in human-like ways with the explicit goal of making them respond in ways that are indistinguishable from a real person. Implicit in that, however, is also to make them respond in ways that are appealing, accessible, and agreeable instead of offensive, abstruse, and objectionable, and as a consequence the most advanced LLM implementations respond in a conversational tone with an authoritative ‘voice’ like a friendly teacher or ‘influencer’ which most people view as inherently trustworthy and are thus disinclined to fact-check (or in many cases just too lazy to do so).

The business use case for these models is to replace human beings in direct interface applications that are repetitive and don’t require a great deal of highly accurate or specialized knowledge, i.e. a call center rep, or a narrator that translates basic information into spoken format, and the like. Unfortunately, people are already trying to use them as detailed knowledge agents without understanding that the ‘knowledge’ that these models have is not based upon any real world experience but just in the constructions that arise from the frequency of word use which makes these models adept at doing things like taking written tests or summarizing the basic text of an essay but they have no real comprehension of the world based upon interaction or introspection, no ability to inherently distinction basic facts from fiction, and are not inclined to respond to any prompt by saying, “I don’t know anything about that,” because their ‘worldview’ is constructed of every possible thing that exists in their world, i.e. the textual dataset that they have been trained upon. If a reasonably well-educated person saw a picture of a six-legged mammal, they would know immediately that it is a fictional animal not only because such a creature is evolutionarily impossible but also because it is outside of their real world experience, but an LLM would not draw such a conclusion (unless texts of explicit discussion of why mammals have four limbs were part of its training set) because they lack that deep understanding and experience of how the number of limbs relates to the concept of mammal.

GPT and other LLMs are quite an advancement in the state of natural language processing over more traditional symbolic-based methods, but they are not knowledge agents in the sense of distinguishing correct statements from falsehoods, and their ability to ‘know’ anything comes from the semantics that are intrinsic in a rich natural language set that reflects the complexity of mental models based upon real world experience, not because they have any kind of mental models of their own or introspective cognitive processes which construct such models from experience. That they appear to be authoritative comes from their ability to produce digestible narratives with impeccable grammar and erudite but accessible vocabulary, so they seem like your really smart know-everything friend even when they are spouting utter nonsense.

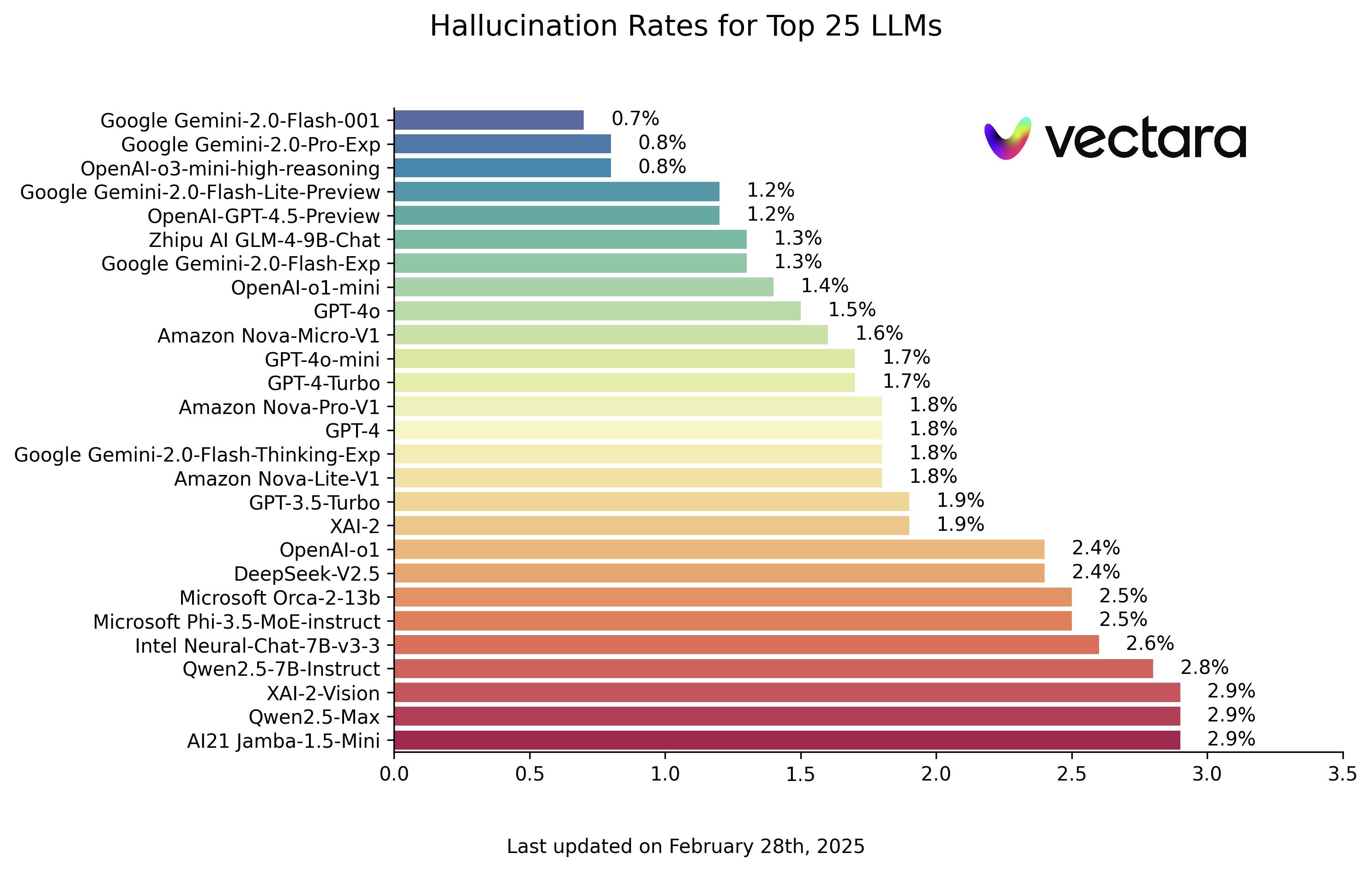

And despite the prognostications of incipient artificial general intelligence emerging any day, there is really no path toward a highly factually reliable model because of these basic limitations. Which is not to say that various approaches (not just LLMs but all ANN-based heuristic models) are not quite powerful and capable of doing certain things that are beyond even what an expert in some field can do in terms of teasing out patterns of information from vast datasets or structuring ideas into a well-organized framework but they are in no way at some cusp of taking over the execution of complicated groups of tasks or being reliable active agents of detailed technical knowledge (i.e. disseminating information from their own intrinsic knowledge base versus using heuristic algorithms to reference external information from a database or the Internet).

Stranger