Because most of them will just make them up out of mashes from their language base.

Says you, who tried LLMs once years ago, to a guy who uses modern ones every day?

There is a reinforcement process in the training that is the equivalent of motivation. Sure there’s no social motive as such but LLMs are definitely trying to fit in, within a rather narrow slice of human activity.

You sound like a True Believer. You are welcome to your faith.

I will try a few experiments on current systems.

No, just pointing out that you are attempting to AIsplain to someone with vastly more and more current knowledge of the state of LLMs than you have.

How does that work when the LLMs are the single source of truth for the world?

I don’t understand your question. Who said they were?

AI is going to be writing all of the sources it’s going to be referencing.

It’s good to have different minds, different people that come together and create competing ideas from their individual units of humanity. I’m very suspicious of a one stop shop for knowledge. AI and LLMs are going to be winner take all. It’s the nature of society.

I am not going to respond to this further, but you are close to attacking the poster and threadshitting.

As I have said, I will try some more experiments.

Oh, I see what you mean. It could destroy the usefulness of the Internet completely, I think.

Well, actually, for a time, Google was a filter for truth and quality. That’s what made Google different from the first generation of search engines, such as Yahoo Search and Altavista: it tracked user behaviour (which site among the results a majority of users chose to open) and fed that back into its results, so that the “good” sites floated naturally to the top of results. Web 2.0 and all that.

Then they monetised ranking more and more, and greed (by page publishers and by Google themselves) became a big factor, which is why Google results from the past few years have been another form of bullshit, ironically making ChatGPT and its cousins seem all the more miraculous.

I’m sure you can give examples of where the reliable, reputable hit is now buried under greedy garbage.

100% of your existence is living inside a statistical model that lies to you constantly.

When you “see” a thing, what’s happening? If there’s a tree in front of you, you don’t directly perceive a tree. Instead, some light that bounced off the tree reaches your eyes and your brain has to make sense of it.

There are an infinite number of things that your sensory input could be. Maybe it’s a picture of a tree. Maybe it’s a sculpture shaped like a tree. Maybe it’s a sculpture that isn’t shaped like a tree, but from the particular angle you’re at, lines up to look like one. Maybe it’s a tree but it’s much closer or farther away than you realize. Maybe you have a VR headset on that’s displaying a tree. And so on.

Nevertheless, the vast majority of the time your brain correctly decides that you saw a tree and triggers the thoughts and memories associated with them. Because of course most of the time it is in fact a tree. Your brain’s statistical model worked.

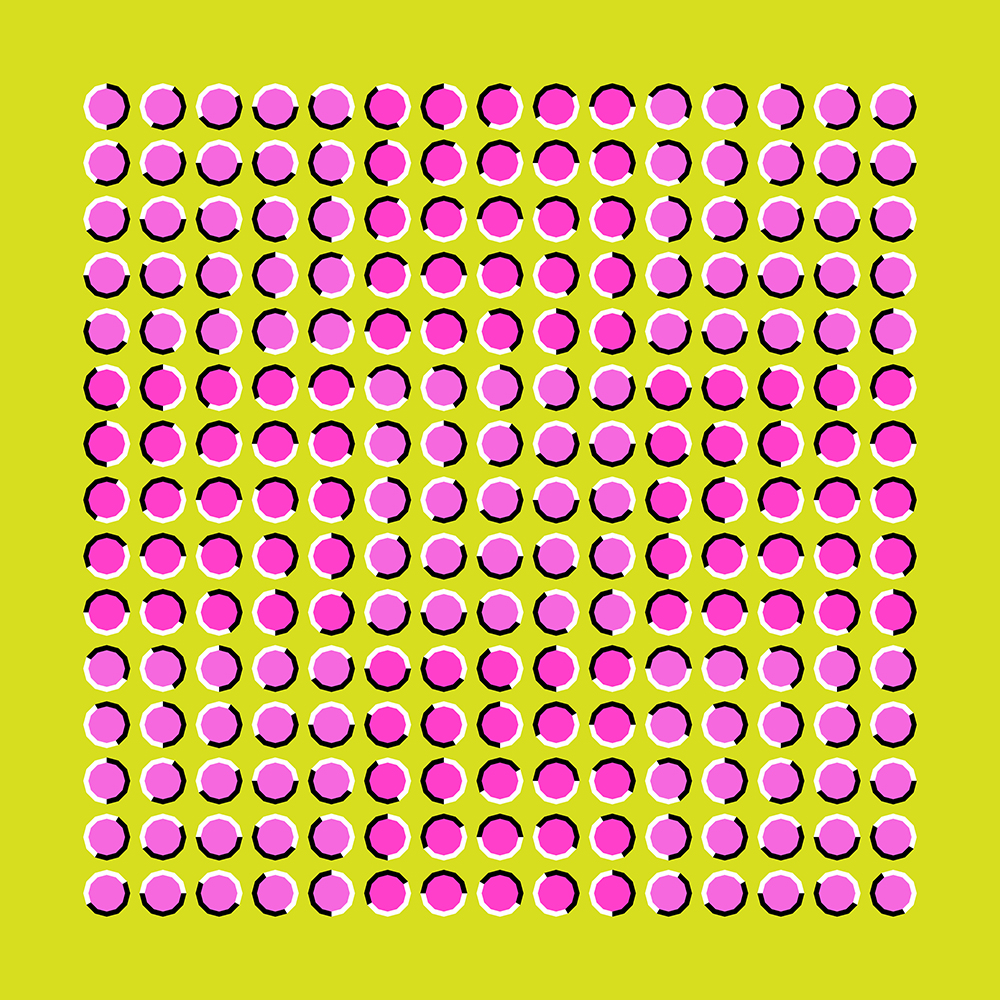

But sometimes it doesn’t. Why does this picture look like it’s wavy and moving?:

Your brain is lying to you. Something about this picture is triggering the circuitry that was trained to detect movement in natural scenes, and it’s misfiring here. It works even though you know it’s misfiring, but you can’t help it.

Illusions are inherent to intelligence. They inevitably arise because we try to perceive a world that’s infinite in complexity with a sensory/processing device that is limited (and it has to be limited since it’s inside the world that it has to perceive). That necessarily means making guesses that will turn out to be wrong.

Humans do have a neocortex that does allow us some degree of self-correction, so that we are not perfectly bound to the base statistics that underlie our sensory processing and subconscious. You can decide that the image above isn’t actually moving even if it still looks like it. LLMs and other AI models will probably need something like this eventually (and do, to a degree, with reasoning models) that can look back at the results and see if they make sense.

But they’ll still be imperfect, just as humans come to imperfect conclusions all the time, even in the presence of conflicting evidence. No way around it.

Did they really track user behavior?

I thought their original ‘secret sauce’ was that they checked how often a page was referenced by other pages by looking at the hyperlinks?

Yeah, that is approximately how their PageRank algorithm worked.

Anyway, all this stuff is decades out of date because the “search engine optimization” types quickly figured out how to game the system. So it’s been an arms race the whole time.

There’s undoubtedly some conflict in Google whether to put the best results up or the results that make them the most money, but they aren’t going out of their way to promote the trash that often rises to the top (since that doesn’t accomplish either goal). That’s just because they’re losing the arms race. I’m sure they’re hoping AI can help them here.

Oops, you’re right. The links are the key factor. I guess my (fallible) memory is also a bullshit generator at times. And I can’t tell at which times.

Wait until androids that can pass for human are a reality, and town hall meetings and rallies are packed with AI “supporters”.

No worries. I do try to be sure that anything I say from memory is verifiable by objective third party information. But my wife, for example, often ‘remembers’ things that never happened, and I sometimes have to go to some lengths to prove this to her… ![]()

Indeed. Was Steven Byerley really a robot?

That seems to me just a restatement of the tired old saw that GPT doesn’t “really understand” anything. But the question of what constitutes “real understanding” is a subjective continuum that is ultimately just a meaningless semantic rabbit-hole. As I said earlier (and I thought you had agreed), the question of whether or not any advanced AI truly possesses “real understanding” can only be defined functionally and becomes semantically meaningless with very advanced systems that have developed emergent properties.

For example, I’m sure you’re familiar with the 1960s era AI demonstration program developed by Joseph Wiezenbaum called “Eliza”. Eliza was disguised as a non-directive psychotherapist, meaning that it never had to offer any real information but just encourage the user to participate. Thus when you said {whatever}, Eliza might respond with something like “why do you think {whatever}?” or just a bland “how does that make you feel?”.

The point here being that these were just preprogrammed rote responses that had no relevance whatsoever to anything the user was saying. Eliza clearly did not “understand” anything at all.

But when I ask GPT a fairly long question (as in my example in post #69) and the AI responds with a comprehensive answer that is directly relevant to what I asked, then the question of “understanding” has certainly moved into an entirely new realm, in even the most cynical interpretation.

Likewise with the ability of advanced LLMs to do effective language translation. The subtle linguistic and cultural nuances of idiomatic expressions have always been the bane of computerized translation, yet in my limited testing GPT has done such translations flawlessly. I can even ask it “what does this idiom really mean?” and it will tell me.

So where’s the “bullshit”?