You may as well try to include derivatives of complex order; why not…

Laplace transforms are cool, too. Transform your differential equation into a polynomial equation, solve that, and reverse the transform to get your solutions.

For something like n x n matrices, it is well worth noting that it is relatively easy to define e.g. a holomorphic functional calculus so that things like \sin(X) and so forth are well-defined.

If I’m not misunderstanding you, that’s in effect the “interest rate” version I mentioned. Taking e = (1 + \frac{1}{N})^N for large N, we can also get e^D = (1 + \frac{D}{N})^N.

Since f(x + h) = f(x) + hf'(x) for small h, we can apply our definition step by step:

(1 + \frac{D}{N})^Nf(x) = (1 + \frac{D}{N})^{N-1}f(x + \frac{1}{N}) = (1 + \frac{D}{N})^{N-2}f(x + \frac{2}{N}), etc., until we get f(x + \frac{N}{N}). Larger/smaller shifts work the same way. It just follows the flow of the function at any given point.

I actually take the exponential of matrices faitly often. If you have a state transition matrix, A, that describes how a system of variables changes over an infinitesimal amount of time, e^Adt tells you how the system will change over the finite amount of time, dt. Just multiply e^Adt times the vector of variables to get the new vector at time t+dt. And the original matrix A is essentially a derivative- A*v gives the time derivative of the variables in the vector v

I recall seeing a similar application to that. Take some matrix A which applies some transition (a rotation, whatever) over some finite timestep. Then, e^{t \ln{A}} gives you a smooth application of that matrix over whatever timeframe you want. Of course, you have to compute \ln{A}…

Some of them I’ve learned. A=[0 1;-1 0] is an oscillator’s state transition matrix

Ah Rodrigues’ rotation. I can assure you it had nothing to do with cross products or even vectors. The paper was published around 1840 (and has been republished by and is available online from the French mathematical society. It does correctly compute SO3, the rotation group of the sphere, but in entirely classical form, ghastly equations involving the tangent of half the angle of rotation. It was done several years before Hamilton discovered quaternions and the formulas he gave were correct. Hamilton’s formulas were not. They gave something like the rotation of twice the angle. Nowadays, the corrected form of Hamilton’s equations, based on conjugating by quaternions, is used for curve tracing in computer graphics.

Olinde Rodrigues was an interesting person. The family had been thrown out of Spain and settled in Southern France during the inquisition and gone into banking. His actual profession was banking; mathematics was an avocation. And I don’t think he ever published anything after that 1840 paper.

I guess the version I’ve seen is a modern representation of the original in vector/tensor form

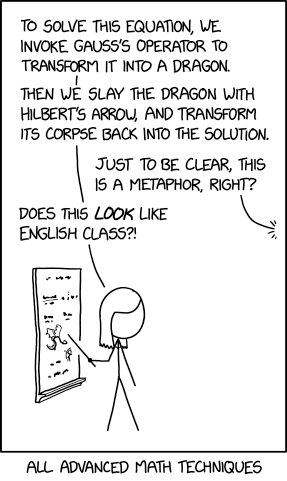

Of course, there’s an xkcd for that:

I included this in my notes when I taught differential equations recently.

Way back in my first college level math class (taken at the local junior college during my HS sophomore summer school session) the instructor informed us on Day One that “The best math magician is the best mathematician”. I very much doubt the saying was original with him, but it was the first time I had heard it and it’s stuck with me all these years.

The more sleight of hand tricks you know, the more any given problem becomes susceptible to understanding and solving slaying.

Yeah. One time I integrated x^3/(e^x-1) (if I’ve got that right - was years ago). That involved a bunch of tricks

In so many words that is exactly what is happening. We have a flow corresponding to translation.

Upon reflection, what is legitimately confusing, and important to take note of, in this thread is that there are several related things that all happen to coincide in this case: e to the power of a number (so, indeed, why would it a priori make sense to take a matrix or other operator instead of a number?), the exponential function in the function(al) sense (and its important properties, like its series expansion and the equation \frac{d}{dx}\exp(x)=\exp(x)), the exponential map in the context of Lie groups, and the exponential map in the sense of a Riemannian manifold.

Is there, in your opinion, any kind of “grand unified theory” for why these things are connected? It’s reasonably straightforward to connect them at an individual level–but harder to see why all of these interesting but disparate properties should have any relationship at all. Some things are fairly obvious, like why compound interest would relate to a function that is its own derivative, but translation operators, the exponential map in Lie groups, etc. are harder to grasp.

(e^dx)f(x)=f(x+1)

((e^dx)f(x)-f(x))=f(x+1)-f(x)

Divide by dx

((e^dx)f(x)-f(x))/dx=df/dx

Apply the engineer’s-torturing-math theorem

And we’ve got df=e^dx f

My favorite physicist-torturing-math is still Dirac’s bra and ket. Take half of an absolute value symbol and half of an expected-value symbol, and somehow turn it into a Hilbert vector or covector.

I believe the most important link comes from the differential-equation characterization of the exponential.

Forgive me (or, better, correct me) if I happen to get some details wrong, but consider any Lie group, say over the real numbers. Let X be any tangent vector at the identity. It defines a tangent to a curve, and, because everything is smooth, you will be able to integrate it to a 1-parameter subgroup \gamma(t) such that \gamma(0)=I (the identity element of the group) and \frac{d}{dt}\gamma(t) = X. This will satisfy \gamma(t+s)=\gamma(t)\gamma(s), whence \frac{d}{dt}\gamma(t)=L_{\gamma(t)}X, the right side meaning the translation of X from the tangent space at I to the tangent space at \gamma(t).

Long story short, consider the particular case of a group of matrices. The group law is multiplication of matrices, and the differential equation just looks like \dot\gamma(t)=\gamma(t)X, with the initial condition \gamma(0)=I, so you solve it and get \gamma(t)=e^{tX}.

This is probably not what your instructor meant, but the best math magician I ever met was Persi Diaconis, superb magician turned mathematician who took a discovery I had made (the “shuffle idempotents”) and turned it into a tool that he used to prove that it takes seven random shuffles to get a deck withing 1% of perfectly random

By the way, has anyone faced an integration problem that required Feynman’s trick to solve (I’ve constructed a problem or two to try the trick on integrals I already could solve, but that’s not quite the same thing).

Personally, I use the term “mathemagician” to refer to Gödel and those who followed in his footsteps. To wit: “Magic” is defined as that which cannot be explained. Gödel, in proving that there exist statements whose truth values cannot be logically determined, effectively proved that there exist things which cannot be explained. In other words, he mathematically proved the existence of magic. Magic, by its very nature, is impossible to study directly (because what can be studied can be understood), and yet, mathemagicians still manage to indirectly study it.