I don’t remember there being bitwise operators in some of the versions of BASIC I used back in the 1980s.

Which ones? C64 BASIC had bitwise ops, but the keywords were overloaded:

https://www.c64-wiki.com/wiki/AND_(BASIC)

It behaved as a logical op when it appeared in a boolean expression (such as the condition for an IF), and bitwise otherwise.

TRS-80 BASIC was much the same way; I never thought of it as overloaded - it just made sure the results of relational operations were always 0 or -1 and applied the machine language operation to the parameters.

Took me a while to figure out why they used -1 for true, that was a real light-bulb-on moment for me.

Don’t make me quote Dijkstra on that language. ![]()

For the main post, which is a must-read, there are standards (real or effective) for each level, and you can even virtualize various levels so what seems to run on hardware doesn’t.

The number of levels is growing all the time. When I was learning this stuff there was no networking level, for instance.

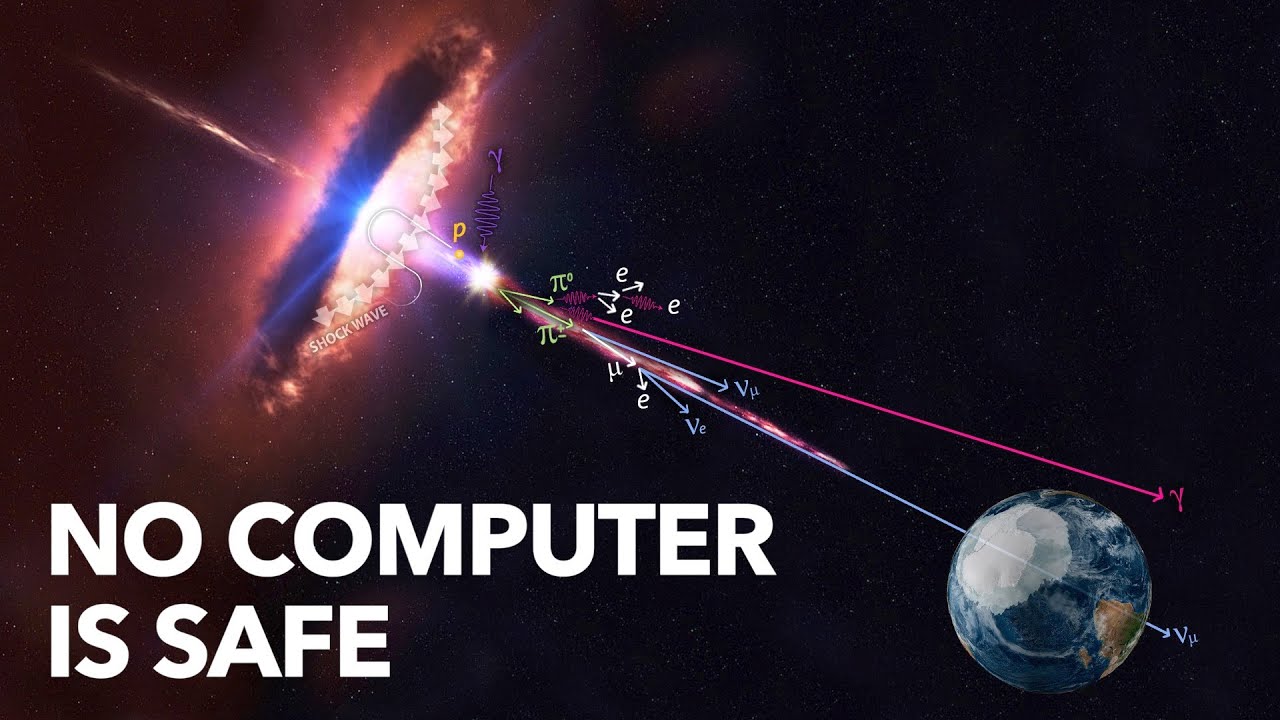

As for cosmic rays. They do hit. Our servers had a ROM inside them that captured and sent back information on them - with the customers’ permission, of course. One problem we could correlate with altitude. Cosmic rays affect internal memories primarily, since they are denser than logic. But large memories have error detection and correction and you are unlikely to see the effect of a bit flip unless you get an awful lot of them.

People have been worried about the impact of cosmic rays on logic. Random logic is not going to be a problem unless you get a flip right before the error is captured in a latch, or if there is a latch issue. I’ve seen research on how to deal with this kind of problem, but I haven’t seen any evidence it’s been an issue. I hired someone who did his PhD in this area just in case, but we never had to use him. I’m still active enough in the relevant areas so I think I would have noticed something.

BTW the one big issue we saw was from memories where the vendor changed a process without bothering to tell us.

Yeah, I suppose the difference with C-like languages is that an integer evaluated as a bool will be true for any non-zero value. “1 AND 2” is 0, which when taken as a condition is false. But in C, there’s a difference between “1 && 2” (true) and “1 & 2” (false).

Indeed. And at this point, it could be thought of as more like a tree. Something like a network controller branches off at a low hardware level, but contains its own set of layers, with the physical electrical connections at the bottom, and applications like web browsers or file shares at the top. In between, there are numerous layers building more complex things from simpler ones.

Sometimes it’s necessary to cross layers to fix bugs, and we have tools for that. For instance, an oscillosope might contain a logic analyzer, allowing you to see both the raw electrical signalling as well as the bytes that have been encoded into the signal. And possibly determine if there was an error somewhere along the way.

In the software world, we have debuggers that can display raw memory, assembly code, and high level source code at once. If there’s a mistake in translation somewhere, the debuggers can help you.

These tools can only cross a few layers at best, though.

Indeed. Our company’s products have error correction on all of the internal memories, but for external memory it depends on whether you bought the consumer or server grade product. I expect that eventually, everything will require correction due to it getting more sensitive.

I am trying to mentally model a computer process that reads data from the firmware chip and causes the manufacturer’s name to display on the monitor.

I believe the power supply unit steps down 120v 60Hz AC from the wall outlet to 5v 60Hz DC for the motherboard. But looking at the motherboard with its many chips I can’t follow the circuit visually any further than that. As I understand it the CPU microchip has a small crystal oscillator (clock) that converts this 5v 60 Hz direct current back to alternating current at the advertised clock speed (1.6 GHz for an i5, 750 kHz for the intel 4004) but I don’t know the range for the high and low state (~5v/~.5v?).

I think I have a general understanding of transistor logic, and I can envision a circuit with memory cell flip-flops inside the processing unit (registers and caches) and physical data busses with one bit per pin. I think this is why the number of pins in the CPU socket has risen from 16 for the intel 4004, to 18 for the 8008, to 40 on the 8086, to 320 for the P5 Pentium, and now modern intel chips do it the other way around with like 1200 pins coming up from the motherboard and making contact with the underside of the chip.

I assume the signal coming over the data bus does not rise and fall with the CPU clock but is a direct current. I do not understand how the data bus connects with the correct device, at least for older computers that didn’t have hundreds of pins. The 8008 apparently had a 14 bit address bus which means 14 of the 18 pins were wired to a memory controller and would be used to determine which memory cells are accessed. That only leaves 4 pins - 4 bits - to communicate the data to and from the memory controller. 2^14 = 16,384, and 16,384 x 4 bits is only 8kb, half of the advertised 16kb of accessible memory. So I’m missing something. Putting that aside, there would be no pins left to communicate data between the CPU and any other peripheral, such as a keyboard or monitor. So how did that work??? Does the monitor poll memory directly, does the keyboard wire straight into memory for the CPU to poll? How do they deal with race conditions?

~Max

Are you talking about a specific system? E.g., original IBM PC? That had an Intel 8237A for I/O. RAM refresh rate was every few milliseconds. Keyboard I/O went through stuff like Intel 8255, I don’t even remember, but if you peruse the BIOS sources you will see that the CPU itself does not have to do anything fancy. Also interrupts can be masked if you are in the middle of a critical interrupt service routine.

There’s no “Hz” for DC.

A modern motherboard has many voltages on it. A typical desktop will have inputs at 12 V, 5 V, and 3.3 V. However, these voltages will be further reduced. Modern chips run at between about 0.8 V and 1.4 V. Some chips have onboard regulators so they can take a higher voltage as input and convert it to something lower.

Inter-chip communication varies. High speed signals are generally differential. That is, the actual voltage does not matter much (often something modest like 1.2 V or 1.8 V); what matters is the difference between two lines. That difference may only be 0.1 V or so. The low voltage swing reduces power, and the differential nature makes it fairly easy to build sensitive receivers.

There are multiple crystals on the board to generate frequencies. They don’t run directly at the full multi-GHz frequency; instead they are something more modest, in the MHz range, and then get multiplied by what’s called a phase-locked loop (PLL). The PLL tracks the incoming signal and adjusts itself to provide multiple output pulses per input.

It’s producing a square wave at the clock rate, not the typical AC sine wave.

Actually it does rise or fall in sync with the clock. The same pins on the CPU may be directional and their function changes according to the phase of the clock.

In a simplistic configuration the address is put on the bus during one phase of the clock. That address selects a memory location or i/o port. In the next phase of the clock the CPU could receive data on the bus from a read operation, or the CPU could send data on the bus for a write operation. Status pins on the CPU can specify if the bus is in address mode, read mode, or write mode. It can get much more complex than that, but multiplexing of the bus is the key.

You are looking at too low a level. The power supplies don’t really matter. Modern computer systems have all manner of different power supplies for various reasons, but you can ignore them all.

Clocks are important, but again, you can ignore the minutiae of the different clocks present. Just think in terms of one single clock for the entire system. (In general all the various clocks in the system are derived from a master clock.) The idea of a clock is probably one of the most important. Just think in terms of a master clock and logic in the entire computer that changes state on each clock tick.

So, this brings you to the basic model of the system. There are logic elements that maintain state. That state is (usually) either a high or a low voltage level. One of the logic levels seen by the system is the clock. When the clock changes state those logic elements that are connected to the clock may change their state. It is this change of system state on each clock tick that steps the low level operation of the entire system.

Some logic is tri-state. This is essentially true, false, and don’t say anything. This is important because you may need to drive the output from different sub-systems onto the same wire. Only one logic output should ever drive a wire. Otherwise they fight, and things don’t end well. So some logic elements have another input that controls whether they output any value at all. One can see that this allows different parts of the system to drive logic levels onto a common bus.

Generally you can divide the system into CPU (containing the ALU (arithmetic and logic unit)), memory, and IO. Ignore caches and other optimisations.

If the CPU needs to read from memory it initiates a memory read cycle. This takes a number of clock cycles. Typically the cycle starts on the edge of a change in logic level of the clock. On the first transition the CPU may drive the system bus with the address of the unit of memory it want to read. It will also drive some control lines that indicate on the bus what sort of transaction this is. In this case a memory read. The memory controller sees the request for a read and latches the values on the bus. Internally the memory controller will step through a set of operations, one step per clock cycle, to obtain the needed data. Memory is set out in an array. In order to find the right data the memory controller splits up the address it received. One part is the column address, the other the row address. It can place these values in sequence onto the memory system’s internal bus. This bus is what all the memory chips live on. On one clock cycle it can drive the column address onto the memory bus, and also drive a wire that indicates to all the memory chips that this is a column address, this is the column address strobe (CAS). The memory chips see this and latch the value. Then the same happens for the row address. Then a logic line that requests a read is driven and the memory devices will (typically in a very simple system) deliver one bit each onto the memory bus. The memory controller latches this value. On the next system bus clock cycle the memory controller will drive the data value onto the system bus. Since the CPU had initiated the memory read, on that clock cycle it will latch the value on the system bus, thus receiving the value. Notice, that in a very simple system design like this it is possible to have both memory addresses and data on the same bus. They are all just logic levels. Elements of the system listen to the bus, and other elements drive values onto the bus. If an element is not involved in the bus cycle it leaves its bus driver logic in tri-state, so that it does not affect what is happening on the bus.

IO can be done in two ways. The system may have a separate bus for IO. This mean another whole set of bus wires coming out of the CPU. Each individual IO device lives on this bus. Operations on the IO bus occur in much the same manner as the bus used to talk to the memory. But instead of memory responding to addresses, different IO devices can be configured so that they respond at different addresses. Back in the day you used to set switches on a device card to configure what address a device lived at. (It is a bit more messy than that in some systems, but the idea is there.) A common paradigm is to have IO devices look like a slab of memory locations. Each location has a specific purpose. What gets fun is that reading or writing to one of these locations causes the IO device to perform some action.

For instance, reading from a specific location in a serial IO controller may read the next available character. So each read operation from the same location gets different values. The IO controller uses the read operation to trigger its operation. A write may cause the controller to output a character. (Really awful IO controllers were built where a read or a write to the same location did different things.)

To access a disk you may write a set of numbers, platter, cylinder, sector, into a set of device registers. Then write a command code into another device register. (All manner of stuff would be expected to happen after this.)

One might note that it should be quite possible to combine the IO bus and the memory bus onto a single general purpose bus. One result can be that IO devices just look like memory addresses. This is known as memory mapped IO. A useful aspect of this can be that it allows IO devices themselves to access system memory. So more advanced IO devices can be set up deliver or take data directly to and from memory without the CPU being involved. The is known as direct memory access (DMA). This requires a more sophisticated bus control protocol. But no matter what, these protocols just step through a set of states, one step on each clock tick.

A core part of systems are these protocols. Typically they can be defined as finite state automata, or just a “state machine.” At any given time they are in one of a defined set of states. On each clock cycle they use their current state and an input value, and step to another of these states. A lot of the system is built from cooperating state machines. The memory system is built from them, the bus controllers, really a huge part of the system.

When it comes to IO, things are happening in the outside world at a different pace to inside the computer. A simple way to manage this is to allow the IO devices to interrupt the system. This leads to all manner of evil issues. But state machines to the rescue. The control protocols realised in these state machines just becomes more complex as it deals with the additional complexity of asynchronous activity. The protocol can see an interrupt asserted (probably on yet another wire on the system or IO bus) and will simply sequence things to interleave the pending and new requests appropriately.

Eventually the CPU will see the interrupt wire asserted, and that will trigger it to save what it was doing, and then jump to code at a pre-determined location that will then start to work out what device asserted the interrupt and then deal with it. For instance, a serial line controller might have received a new character. The interrupt service routine will read the character (from the special device register) put the value somewhere, and then return to what the CUP was doing prior to the interrupt. Thus asynchronous device operation is interleaved with the normal operation of code on the CPU.

This was a long but interesting video from Veritasium on cosmic (and terrestrial) rays and how they interact with computers:

Not to put too fine a point on it, but AC is V+<->V- whereas computer clocks are mostly not zero-crossing but stay on the same side, getting close to zero but not changing polarity.

It’s even more complicated than that. The processors I worked on had a ROM in which was stored the minimal voltage required to meet the speed requirements, which was figured out during the test procedure (package test, not wafer test) and then burned in. This was done by starting with a relatively high voltage and decreasing it in steps until the test failed. That was done to save power. The on-board voltage generator read the ROM and adjusted itself.

I was going to mention the PLL, but I’m glad you did since that is analog stuff. The clock then has to be distributed across the chip, buffered so it remains powerful enough to work, and be distributed so there is minimal clock skew between the flops it drives. There is usually several clock domains also. For instance the blocks running the I/O run much slower than the blocks containing the ALU.

When we were moving to a new process node, we’d create a test chip, and then a team of people would go to Los Alamos where you could rent a beam for a few days. They zapped a bunch of the test chips (which were simple and easy to find errors in) and see what happened. The test chips carried examples of the various cells in the cell library and memory cells so that we could discover if any were susceptible to cosmic rays.

We were making chips for highly reliable servers, I doubt everyone goes to this much trouble.

It’s also done to Make More Money.

Some chips will, by luck, use just a bit less power than others (i.e., they run at a lower voltage for the same clock rate). Maybe the fab was just nicely tuned that day, or maybe the chips in one part of the wafer were just right in the sweet spot.

So you test each chip and find the relationship between voltage and reliable operation for different clock rates. It also depends on temperature and some other things. The worst chips you sell for consumer desktop systems, where power isn’t a big deal and they aren’t typically running 24 hours a day. The more efficient ones you sell in laptops, which have to run on batteries; or servers, where the power/cooling for a big datacenter becomes significant. Obviously, you charge more for these–they’re better chips.

Without this, you’d have to basically qualify everything for the same worst case. Which means leaving performance and/or power on the table, or even throwing away a bunch of chips that are perfectly fine with a bit more juice, but are worse than some arbitrary threshold.

Also known as chip binning. (Which sounds like a name for a professional golfer.)

The Intel 8008 support page - 8008 data.

If I understand this -There are 8 data lines, and the S0 S1 S2 pins indicate whether it’s the high or low byte of the address on the data lines, or data (in or out).

You can find all sorts of data sheets for assorted processors online. Somewhere in my “stuff” i have the Radio-Electronics Magazine reprint of plans for building your own 8008 homebuilt computer back around 1975, including the circuit board layout.

I also have my Comp Sci textbook from 1975, with a walk-through on how to build a (theoretical) CPU from first principles (gates) with the gates to add data onto the internal data bus, into the adder, set address bus, instruction counter, microprogramming matrix to activate the gates in sequence, etc.

The key to “minus” in binary is two’s complement math which eliminates having to do special work to handle the case of a positive or negative integer. Basically if you have 16 bits, the highest bit is the negative indicator. If an integer is negative, then it is stored as the inverse minus 1.

We called it speed binning. You might bin chips for other reasons.

But figuring out what speed a chip runs at is not as simple as it might sound. There are subtle delay faults cause chips to produce the incorrect output when a transition does not arrive at a clock at the proper time. There are two types of faults which we model. The first is a slow to rise or slow to fall transition at a gate output. Those are fairly simple to generate tests for. The second is a delay on a path between two flip flops. The first problem is that there are lots of paths, way too many to test all of. You can address this by using static timing analysis to build a list of the slowest paths in your chip. But this is way too many to test also. And there are certain paths, called false delay paths, which look bad but aren’t testable. The problem is that the slowest path may be untestable, but the next slowest isn’t.

Automatically generated delay tests are almost universally used now, since functional tests are way too hard to write and worse, it is almost impossible to diagnose the cause of a failure. We used delay tests even during bringup when you were looking for slow paths due to bad design or layout problems, and we could diagnose in a few hours what would typically take weeks.

The major reason for divergence is that the process we used was pushing the state of the art. You can plot speed versus power. Hotter parts (more power, often due to leakage) were faster, but since our chips went into our systems, faster was not better, while less power usage was. Server farms use too much power already. We never made consumer products, so that wasn’t an issue.