Fifteen years ago, Progressive Insurance invented a fake person named Flo, to try to convince me to buy insurance. Nine years ago, Amazon invented a fake person named Alexa to make it easier for folks to buy stuff from them while sitting on their couches. Earlier today, fake people named Edgin, Holga, Simon, Doric, and Yendar, who were invented to entertain me, did so. Is this a problem? Is it only now a problem?

Not sure. Were you aware they were fake people? Do you consider yourself “the most skeptical of interlocutors”? Or are you kind of talking about something completely different?

Of course I knew they were fake people. So? Even knowing that the people are fake doesn’t make advertising or movies any less effective.

You’re probably not having a real conversation with an annoying company mascot, nor are they trying to identify your current mood or vulnerabilities. Sure, the article has some handwringing but the ideas have some validity.

Hell, I’ve known them. Worked with them.

They probably pretended they were into acid jazz and wakeboarding despite not liking either. Ferschlugginer poseurs.

one and all.

If it becomes mandatory to clearly identify fake people, I’m not sure what I’d do with knowledge. It could go either way. I mean, is the probability that a fake person is evil and malicious higher or lower than a real person?

I’m only half joking. When AI passes a point where we really cannot tell even with complex and intimate interactions, that opens up the possibility of immersive VR where we can interact with a world from which the programming excludes psychopaths and assholes.

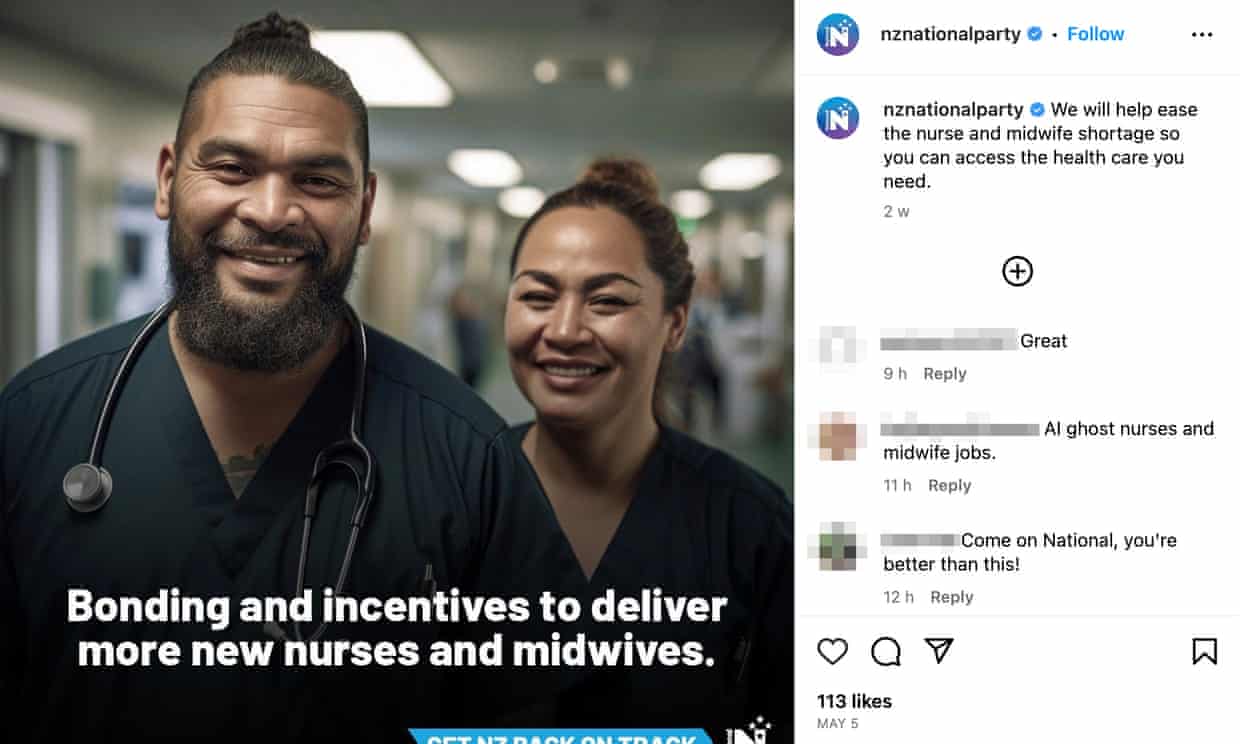

…in NZ we recently had the case of a political party inventing fake brown people for political advertisements. (one of them looking very much like the current co-leader of the Green Party)

And fake scared white people worried about crime.

And they were planning on a big campaign on this until the uproar it caused and now they’ve stopped. The images were created in Midjourney, so there was a public log of all the images they were either planning on using or didn’t use.

Using stock images of brown people to promote political messaging have long been criticised here. But literally inventing fake tangata whenua to support a political agenda?

There are a ton of ethical and cultural issues to deal with here.

I can see the blowback in creating fake minority people but the nervous white woman looking out the window is a big shrug for me. Obviously they didn’t find a real nervous woman at the moment of her peering out the window so who cares if it’s a unique staged photo, purchased from Shutterstock or AI generated?

…another image that was created (but not used) in the campaign:

I find it as problematic that fake white people that are obviously scared of fake brown people are being used as part of a dog-whistle campaign. Its the ease of generation that is part of the problem.

You mention stock photography: and I just had a look and only found a single similar image on Shutterstock. This isn’t an easy photo to create. As a (former) commercial photographer, I would have charged thousands for this. Talent, hair, make up, lighting, crew, time on set, post-production. Effective propaganda doesn’t come cheap.

Well I should say…it used to not come cheap. Now it can be mass produced with a few clicks. Ethical issues remain regardless of the colour of the skin. Its just that creating fake brown people raises more ethical issues.

What’s supposed to be going on with her putting her bag handle around her neck?

So much for AI just cribbing all the stock photos wholesale ![]()

I found a few stock photo night time images of frightened white women clutching purses with a casual Google Image search. Not exact copies but certainly good enough to say “I’ll stop this sort of thing” on a political mailer. Whether not not the message is shitty is secondary in point (here) to the fact that, again, whether the image was a stock photo, a photo shot for the ad or AI generated really doesn’t matter. I mean, the flier or online ad wouldn’t be better if they used one of the stock photos I saw, would it?

I guess I just don’t see the outrage over the AI factor.

The “make a brown person for the ad” is problematic for the same reason it was when U. of Wisconsin got caught photoshopping a black student onto their course catalog. They’re trying to claim an inclusive environment without being able to make good with even a single unedited photo. Likewise when you’re faking a couple of minority nurses supposedly supporting your campaign. On the other hand, the scared women photos are so obviously staged (the unused ones to the point of being cartoonish) there’s no legitimate argument to be made that we’re supposed to see those people as supporting the campaign. They’re just obvious props and not presented as anything other than props. Whether those props are AI generated or not seems meaningless.

…well no. Its cribbing from professional photographers who have invested thousands of dollars, thousands of hours of time, all without permission or compensation. This was a difficult shot. It takes skill, experience, and the right equipment to take a photo like this. There aren’t similar photos on the stock sites because for stock photographers, you’d be investing more in the image than the likely return.

The very fact that this photo “cribbed” from the labour and expertise of exerienced photographers is an ethical issue in itself. I’m glad you bought it up.

I don’t see a lot of outrage in this thread.

I’m not seeing a material difference here. “Endorsement” isn’t the only issue, and while this particular white-woman image was “staged”, that won’t be the case every single time. As I mentioned one of the nurses looks very much like the co-leader of the Green Party. This was almost certainly accidental on behalf of the prompters. But how many of these “fake people” will bear close resemblance to real people? (Especially, in regards to brown people)the “training dataset” probably doesn’t have that many brown people to begin with?)

This is just one of the issues.

“Obviously staged” is kind-of-a-meaningless thing to say about propaganda. Almost all propaganda is staged and designed to provoke an emotional response. Many people won’t look at that image and think “oh, its obviously staged.” The intent of the image is to blow the dog-whistle. And the target demographic will look at that image and get angry at people like me.

In sum:

- Propaganda is a problem. It makes irrational people do irrational things on command.

- AI artwork makes emotionally persuasive propaganda cheaper to produce, by a factor of hundreds or thousands.

- AI prose production makes emotionally persuasive propaganda cheaper to produce, by a factor of hundreds or thousands.

- Having hundreds or thousands of times as much propaganda as now will essentially drown out all non-AI non-propaganda sources of public discourse.

Seems to me we ought to have a hell of a lot more outrage about is in evidence so far.

Referring to your comment that “And they were planning on a big campaign on this until the uproar it caused and now they’ve stopped”

…the uproar was primarily over the fake tangata whenua.

Well, I guess me and the uproarers are in agreement – one of them feels like a problem, the other feels like a “meh”

Most of those images feel like a good argument that we’re not about to be swamped by oceans of AI made professional-grade propaganda images ![]()

:quality(70)/cloudfront-ap-southeast-2.images.arcpublishing.com/tvnz/YKQTBVXNKRFY5DZKOIENIQGHVI.png)

:quality(70)/cloudfront-ap-southeast-2.images.arcpublishing.com/tvnz/UNSGVX44JFCCFK4CMWT4MZ3BOE.png)

:quality(70)/cloudfront-ap-southeast-2.images.arcpublishing.com/tvnz/ZPIYQP4DBBFNJCVB2SLMK5BHJM.png)