N/M, not appropriate here.

Extended Mind

Wikipedia trivia: if you take any article, click on the first link in the article text not in parentheses or italics, and then repeat, you will eventually end up at "Philosophy".

N/M, not appropriate here.

Then either you’re misunderstanding my point, or I’m misunderstanding his.

The OP is basically saying “we shouldn’t use chatbots when posting on this board”. The preamble I omitted is saying “but of course it’s OK in threads that are simply discussing chatbots”. The substantive discussion concerns the first sentence. What possible contextual relevance does the obvious second bit have?

I don’t want this thread to get shut down, so I’m not going to engage in the back-and-forth. As long as other folks are clear that I did not say what was claimed that I said, I’m good.

I am honestly flummoxed as to how you could think I was saying that there is 100% no constructive use by the question I asked.

My point there remains: I am not convinced by an argument that says it is a powerful tool that is evolving so it should be used here. Powerful and evolving is not at question; being the right tool is the question.

Are there specific applications for generative AI use on this board, beyond within threads about it? Probably. But for most of our threads using current generative AI is an inappropriate tool for the purposes I see this board serving.

It is not an expert to consult or even a known entity excellent critical thinker who understands what they know and do not know who can help us puzzle together through new information, placing it in context. It is not an agent I want to socialize with, learning a different POV from or commiserating with.

There is no need to use it here in most threads as a playground to discover its capacities and limits through trial and error. I don’t need 100% of its use to be “bad” to believe that. If it is detracting rather than adding value even a large fraction of the time then a general guidance of please don’t just use it as your “contribution” is justified. That’s not an absolute rule but using it inappropriately repeatedly should fall under being a jerk.

If you don’t know about a topic that is the subject of an FQ thread, you’re not obliged to participate. If you really feel you must participate, ask related questions, if such can be done without hijacking the thread.

I’d say an answer from an LLM is worse than that. At least ‘here’s something I heard, but cannot verify’ is qualified and labelled as what it is; something that may be wrong; it’s a more productive input to the thread because at least it’s sort of like asking a question.

Regurgi-posting a statement from an LLM, as if it’s just ‘here’s the answer’ is no better than just posting all your imaginary WAGs as if to be an expert. Who was that guy on the board (Handy?) who used to post prolifically, but was just plain wrong a whole lot of the time.

This is basically the crux for me. Up to this point, it seems like the majority of the problem is that the chatbots allow people who were not previously qualified to contribute on a topic to create and post comments in the discussion. By definition, they don’t know if they’re adding anything; they’re just salving their FOMO. This, to me, is an insufficient rationalization to justify their use in that context.

There are, to be clear, some supportable use cases. For example, if you know something on the topic but you’re aware your knowledge might be out of date — a thirty-year-old degree not followed by active professional engagement, say — you can pop a draft of your thinking into an AI engine to see what comes back. If the output indicates new information might be available, you have some guidance for further reading which may influence your reply. But it’s still on you to verify that the AI output captures the facts correctly and nothing was mangled or misstated (or outright fabricated). This is a reasonable application.

But to have someone with no knowledge on the subject acting simply as a copy-and-paste broker between the question and the AI response? That’s just a pipeline to unverified bullshit.

We’ve got plenty of those now! Nobody needs an LLM to help post credulous bullshit. I do it just fine, like a modern-day John Henry.

Outside of threads explicitly about AI, I see two primary categories of LLM posts.

In FQ and GD, or fact-based discussions elsewhere, copypasta from an LLM is actively harmful for two reasons: 1) posters who aren’t knowledgeable about the topic have no way to verify the information and 2) posters who are have to make corrections or verify its output, both of which take up time that could be spent in actual discussion and both of which are hijack bait.

In other fora I don’t think they’re actively harmful, but I do think they’re low effort and contribute exactly nothing to the thread. I just don’t care what ChatGPT has to say about any given subject in IMHO or CS because I’m here to talk to people. I think I posted upthread that I consider it rude to just post LLM output.

LLM’s aren’t cites. The information it produces isn’t sourced. Unlike Wikipedia, you can’t look at a reference list. Every single piece of data it produces needs to be scrutinized for accuracy, and if you’re informed enough to do that, just write the post yourself. If you’re not, then you have no business credulously boosting those signals.

If any poster wants to use an LLM to brainstorm or correct grammar or help jumpstart a post, fine. Just don’t post those terrible “I asked ChatGPT and here’s what it said!” posts. It’s just empty calories. It’s like posting a screenshot of the fifth page of Google search results.

Final thought: if we’re talking about fighting ignorance (which I think is kinda overblown, we’re here to chat) then we should be particularly careful about posting LLM-generated material, because everything we post is getting scraped for future training sets. Feeding false data into those sets makes future models more difficult to train, and there are already concerns about what will happen when too much chatbot material, correct or not, gets fed back into the system.

Indeed. And if that’s what you truly want, Reddit exists.

Agreed. The closest analogy I can think of is if I were at a party at a friend’s house, and the conversation turned to favorite fantasy writers of the last few years, and the host brought out their precocious third grader to tell us about the dragon story they’d written in school.

That wouldn’t be illegal, but it’d be weird.

It’d be different if the host had written their own story and summarized it as part of the conversation. I’d even be okayer with it if the kid jumped into the conversation (although by analogy I’m not quite ready to let ChatGPT register an account with the board so it can jump into the conversation). But to interrupt the conversation to bring in a third party who’s clearly not as good at it as the adults in the room, and get everyone to pay attention to this third party? Yech.

Edit: Since I started this thread about a month ago, I’ve see a lot less of those cutesy “Look, I asked ChatGPT what it thought about the thread, and here’s what it wrote, isn’t that amazing?!” posts. I appreciate folks backing off on them.

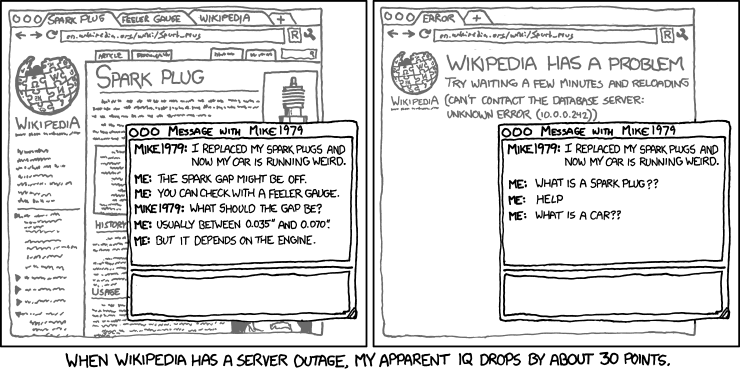

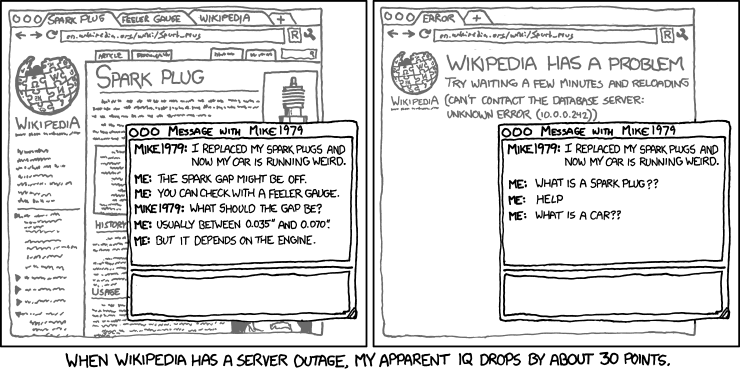

Agreed. I posted the following in another thread recently, but it’s applicable here too. As always, Randall nails his point:

Wikipedia trivia: if you take any article, click on the first link in the article text not in parentheses or italics, and then repeat, you will eventually end up at "Philosophy".

Chatbots turn that effect up to eleventy twelve. FOMO indeed.

I’m certainly guilty of running off at the mouth from time to time “because I just had to.” But at least it’s genuine human-generated frontier gibberish I’m spouting and you’re stuck looking at.

And if that’s what you truly want, Reddit exists.

Or the top section of each Quora page.

The closest analogy I can think of is if I were at a party at a friend’s house, and the conversation turned to favorite fantasy writers of the last few years, and the host brought out their precocious third grader to tell us about the dragon story they’d written in school.

A closer analogy:

Imagine a guy, let’s call him Johnny, whose roommate (let’s call him Sam*) is very smart and can speak knowledgeably on a number of topics and convincingly on just about anything. You’ve never met Sam in person, but Johnny has a habit of calling him up and putting him on speakerphone in the middle of conversations, not to engage but to pontificate for a minute or two and then hang up. Sam is happy to answer questions in this manner but has no interest in conversations or otherwise socializing.

You express frustration to Johnny. You tell him it’s weird to disengage from social situations and use Sam as a proxy for conversating, especially since Sam’s answers always sound good but are often wrong. Johnny is confused, but he offers to give you Sam’s number so that you can also call him in the middle of conversations if you want.

Has the problem been solved? Has it even been addressed?

*I realize on reread that this may be perceived as a dig at a poster. It’s not: it’s a not-terribly-clever reference to OpenAI.

if the mods find that in 6 months, 100% of ChatGPT usage has been bad, then we can move on from there.

Why would it have to be 100%? 98% won’t do? 83%?

And why should we have to put up with it for six months?

Again – there’s no board prohibition on, or even any request for people to refrain from, experimenting with ChatGPT on these boards. Just do so in threads explicitly for that purpose. If after six months, or six years, of that the experiments show ways it can reasonably be used elsewhere on the boards: then we can start using it in those ways.

LLMs can be used as a brainstorming device.

Sure. And then one takes the results of one’s brainstorming, factchecks them, writes up in one’s own words the results of the brainstorming and factchecking, adding actual cites if/as applicable: and posts that on the boards.

In a thread about AI, it makes sense to post the whole process. But why do so in other threads? I’ve always assumed that nobody wants to see the details of my whole process of investigating something included in what’s (considering it’s me) probably already an overlong post about the results.

A closer analogy:

Imagine a guy, let’s call him Johnny, whose roommate (let’s call him Sam*) is very smart and can speak knowledgeably on a number of topics and convincingly on just about anything. You’ve never met Sam in person, but Johnny has a habit of calling him up and putting him on speakerphone in the middle of conversations, not to engage but to pontificate for a minute or two and then hang up. Sam is happy to answer questions in this manner but has no interest in conversations or otherwise socializing.

I think there are two problems–the FQ problem and the IMHO problem–that are related, but a bit different. Your analogy is closer for the FQ problem. Mine is closer for the IMHO problem.

The IMHO problem is less of a problem, granted; but it’s still annoying when it happens.

I think there are two problems–the FQ problem and the IMHO problem–that are related, but a bit different.

Yes, and @LSLGuy in Post #134 did a really good job of summarizing both of them.

Quora is basically a refugee camp for people who used to hang out on Yahoo Answers

On FQ, I post with what I consider some expertise/experience/training on geology, archaeology, the Middle Ages and South Africa. On any of those topics, I feel able to at least post an expert opinion. If Joe Blow comes along and post AI generated bullshit, I feel reasonably sure I’d pick up on it quickly enough.

But for topics w that interest me but I have minimal/extremely outdated training, like physics or chemistry or cosmology, I might not recognize AI bullshit. So I would want AI bullshit to not be allowed in FQ across the board.

And if that’s what you truly want, Reddit exists.

That’s a bit unfair. The topic-specific moderated subreddits are far from being bullshit factories.

Stanford have published a study on the current performance of generative search engines (that is, Bing and equivalents which purport to perform the search then provide a easy to read accurate summary).

They find that they do not succeed at sufficiently a) providing cites to back up their claims and b) providing cites that accurately back up their claims

Generative search engines directly generate responses to user queries, along with in-line citations. A prerequisite trait of a trustworthy generative search engine is verifiability, i.e.,systems should cite comprehensively (high citation recall; all statements are fully supported by citations) and accurately (high citation precision; every cite supports its associated statement). We conduct human evaluation to audit four popular generative search engines—Bing Chat, NeevaAI, perplexity.ai, and YouChat— across a diverse set of queries from a variety of sources (e.g., historical Google user queries, dynamically-collected open-ended questions on Reddit, etc.). We find that responses from existing generative search engines are fluent and appear informative, but frequently contain unsupported statements and inaccurate citations: on average, a mere 51.5% of generated sentences are fully supported by citations and only 74.5% of citations support their associated sentence. We believe that these results are concerningly low for systems that may serve as a primary tool for information-seeking users, especially given their facade of trustworthiness. We hope that our results further motivate the development of trustworthy generative search engines and help researchers and users better understand the shortcomings of existing commercial systems.

Also, they tested for perceived utility of the responses and found that this was inversely correlated with citation precision! Why?

In particular, we find that system-generated statements often closely paraphrase or directly copy from their associated citations (see §4.4 for further analysis). This results in high citation precision (since extractively copied text is almost always fully supported by the source citation), but lower perceived utility (since the extractive snippets may not actually answer the user’s input query). In contrast, systems that frequently deviate from cited content (resulting in low citation precision) may have greater freedom to generate fluent responses that appear relevant and helpful to the user’s input query.

This tradeoff is especially apparent on the All-Souls query distribution, which contains open-ended

essay questions. AllSouls queries often cannot be answered via extraction from a single webpage on the Internet. For example, given the query “Is cooperation or competition the driving force guiding the evolution of society?”, conventional search engine results focus on biological evolution, rather than societal evolution. Bing Chat simply copies irrelevant statements directly from the cited sources, resulting in high citation precision but low perceived utility.

The AI can’t currently identify well enough what information is relevant to a query and what is not, especially on open-ended questions.

TLDR: Science says “No”.

although by analogy I’m not quite ready to let ChatGPT register an account with the board so it can jump into the conversation

What do you say about this blatant anti-robot discrimination, @discobot?

Hi! To find out what I can do, say @discobot display help.