If they’re that good, they’re so secret there’s no way in hell some random DoD flunky would have them or be willing to share them.

The Police use FLIR (Forward Looking Infra-Red) to see heat signatures at relatively short distances. The issue with using IR detectors at long distances is explained by joema’s post #8.

Yes. Side note: The jokers in the National Reconnaissance Office put Oxford, Miss., already on JFK’s mind due to the Ole Miss integration upheaval, on the map showing Soviet missile ranges to much larger cities: http://www.cubanmissilecrisis.org/wp-content/uploads/2012/07/IMG-1_Range-Map-12-700x818.jpg

Gotta get a warrant if you want to use the results in court: Kyllo v. United States - Wikipedia

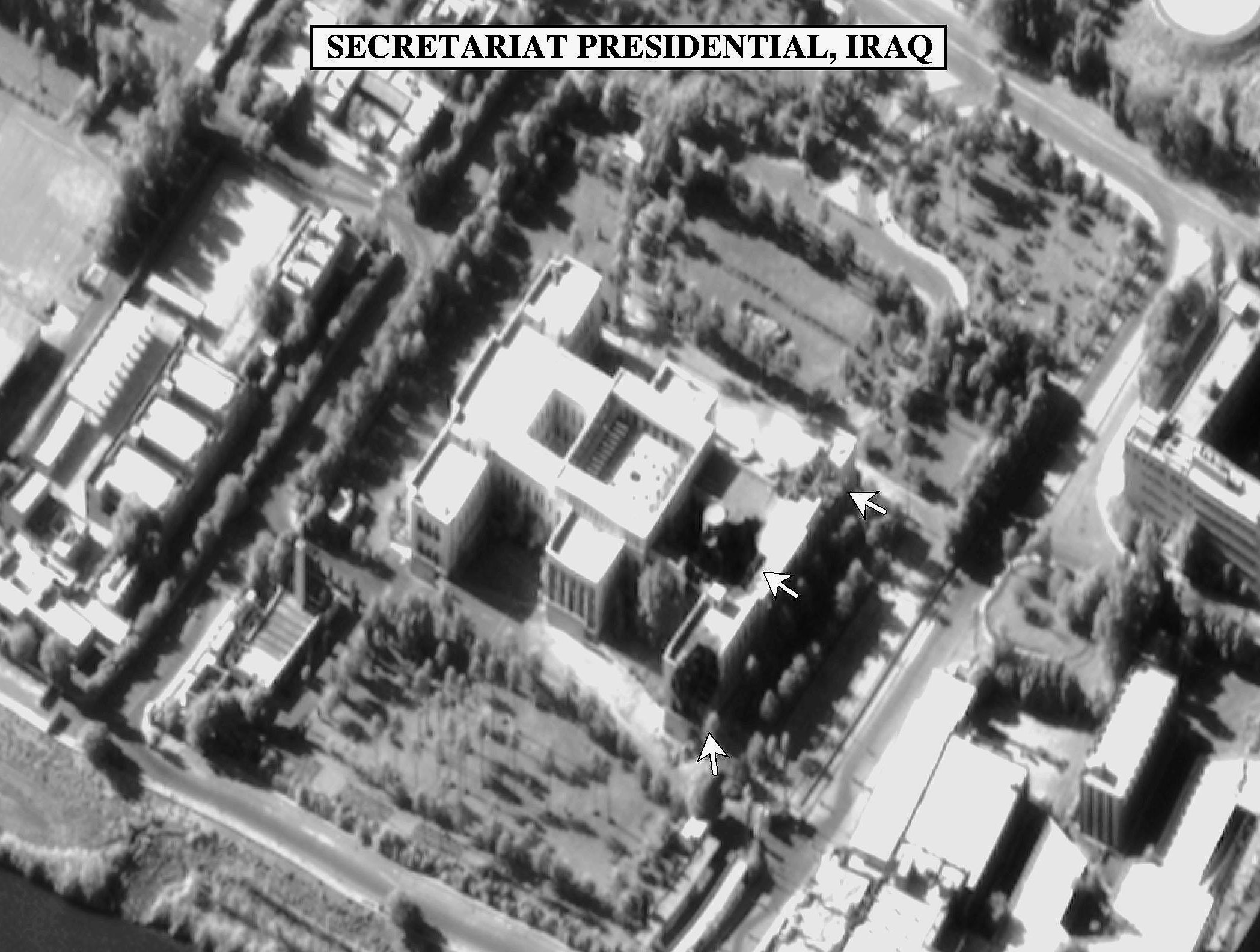

This image is apparently from a KH-12 satellite (only one generation old), taken in 1998.

http://www.oneonta.edu/faculty/baumanpr/geosat2/RS%20History%20II/FIGURE%207.JPG

You can see cars, a few dots that may be people, and even quite small bushes, but certainly not enough for, uh, bush identification. ![]()

Which is about 70mm/2.75"or so from what I’ve been able to determine. The way I conceive of it is that you’re looking at a photo of the ground on a monitor where every pixel represents a 2.75" area. So they couldn’t tell an iPhone from an Android phone, but they could easily tell a motorcycle from an ATV, or in the 1970s, they probably could tell if the woman was or wasn’t a natural blonde if the conditions were right.

This wouldn’t allow facial recognition, although it’s possible that Iranian revolutionaries had beards large and distinctive enough to be identifiable at that resolution.

Maybe she was really hairy?

That is not a good photo. There are better publically released images, such as these from a KH-11 in 1998. These have been intentionally degraded and do not reveal the true resolution capability:

The best way to determine the best theoretical resolution is using the above equations and the largest estimated mirror size, and lowest perigee. This would be 3.1 meters for the KH-12: Advanced Keyhole / IMPROVED CRYSTAL / "KH-12"

Lowest listed perigee is about 150 statute miles, However it is physically possible to dip below 100 miles given sufficient maneuvering propellant. This was done o prior KH-7/8 satellites; I don’t know if the KH-11/12 does this.

We also assume it uses adaptive optics to reduce atmospheric distortion. Research telescopes with ground-based adaptive optics have achieved 10 cm (3.9 inch) resolution looking at objects in near earth orbit: http://fas.org/spp/military/program/nssrm/initiatives/aeos.htm

The best case for a 150 sm perigee and 3.1m mirror using perfect adaptive optics at 450 nanometers blue light wavelength would be:

Angular resolution:

a = 250000 x 450E-9 / 3.1 meters (KH-12 mirror size)

a = .036 arc seconds

Linear resolution at 150 miles:

s = tan (1/(3600/.036)) x 150 miles x 5280 feet per mile

s = 0.138 ft (1.6 inch) resolution using 450 nm visible light

In all probability this is not achievable in practice but it illustrates a best case scenario using known data.

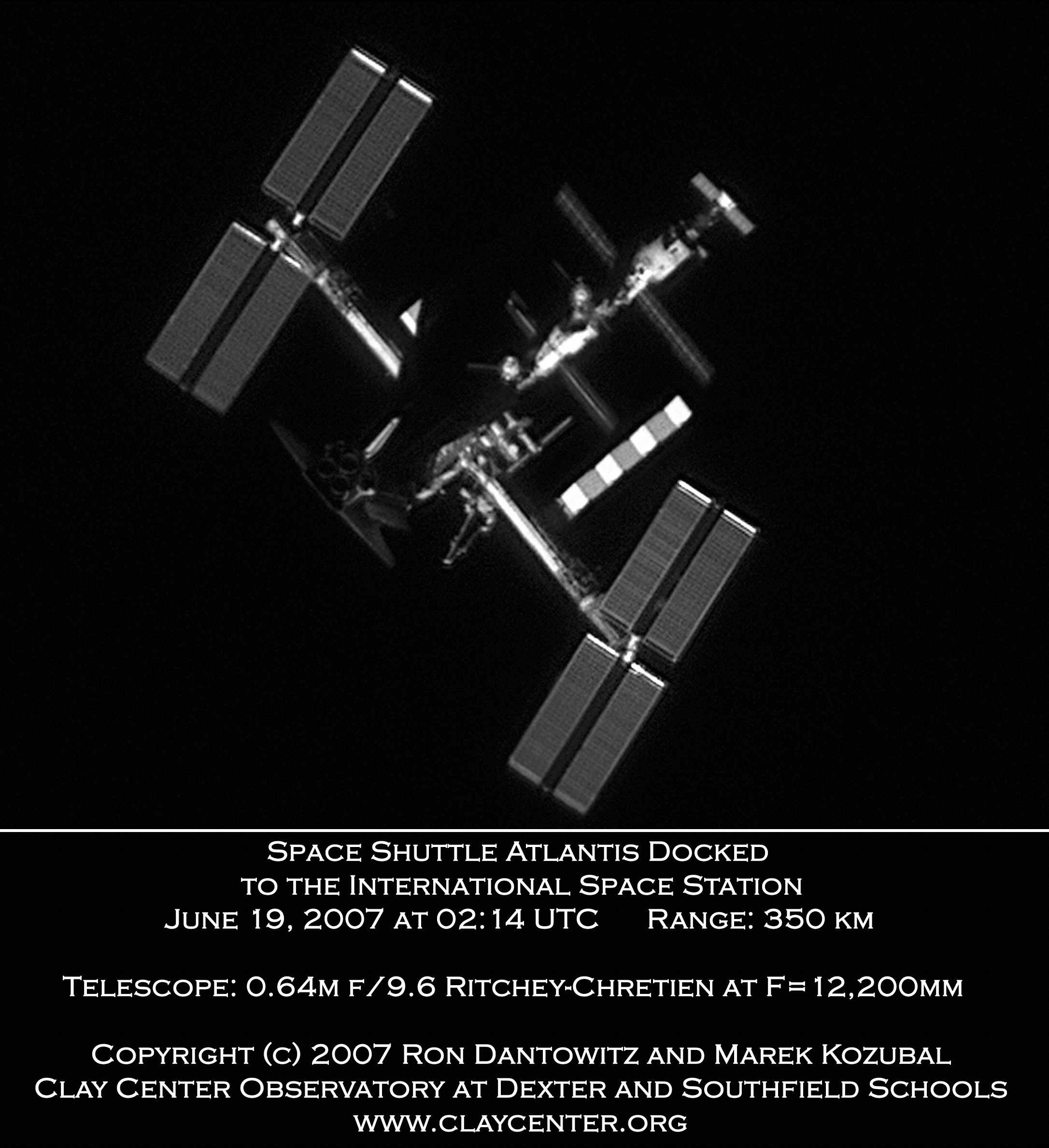

The satellites themselves can be imaged from the ground, as this KH-12 was: KH-12 Kennan Keyhole Secret Military Spy Satellite Photos –

ISS imaged from earth: http://apod.nasa.gov/apod/image/0706/atlantisISS_dantowitz.jpg

Or really, really, ***really ***fat. ![]()

Multispectral sensors and synthetic aperture, especially if used together, might yield an awful lot of probabilistic information about a place if you have the time, expertise and resources to process & interpret it.

I called bullshit right off on the blonde–but it sounds like the way hyperbole would be couched among men.

A propos, what I did see demonstrated, along the same lines of mil techs boasting about reconnaissance equipment, was an IR guy showing me on a monitor the heating up by swelling blood flow in the crotch of another attendee across the exhibition hall, as some Demo Babe (as they’re called) gave him attention.

What are the remote see-through walls technologies?

EM, eg:

Thermal.

Millimeter wave.

Wi-Fi (New system uses low-power Wi-Fi signal to track moving humans — even behind walls | MIT News | Massachusetts Institute of Technology)

X-ray.

Terahwrtz wave? (A New Graphene Sensor Will Let Us See Through Walls)

Plain old microphones

Doppler acoustic

Chemical/atomic signatures

What am I leaving out?

Not exactly “through walls”, but you can see around corners using picophotography techniques. I don’t think it’s practical yet, but demonstrations have been done.

Why not both, like the late Chief Justice Earl Warren?

Was he a natural blond?

And I’ll just note, since people sometimes miss this, that “1.6 inch resolution” doesn’t mean that you can’t see details smaller than 1.6 inch. It means that things around 1.6 inch in size aren’t cleanly resolved from other details around 1.6 inch in size.

For example, if you put 4 laser beams pointing up, each 0.2 inch diameter, spaced 12 inches apart, you would clearly and distictly see the 4 small points. And if you put 2 white cylinders, each 12 inch diameter, right next to each other on a black background, you’d clearly and distinctly see the two objects, even though they are right next to each other.

Since a bush is large enough to distinguish at 2 inch resolution, I think the question would be about dynamic range and color accuracy.

Basically what you’re saying is that you might be able to tell that there’s possibly a half-dollar coin there, but not be able to tell that it’s really 3 half-dollars piled very closely together? Or is it more like being able to see a letter on a highway sign, but not being able to see it clearly enough to tell that it’s a B or an 8.

Both. When you design a system for 1.6in resolution, your ~probably~ design every part of the system for 1.6in resolution. Because you don’t get a good return for your money if your film is giving you 0.5in resolution, and your optics are giving you 1.6in. But, you get different types of fuzziness from different parts of the system.

So a 1.6in object might be represented by 1 film grain (not showing shape at all) plus a bit of averaging (not too clear about the actual location), and together, not too clear about the actual size.

Of course, if you’re limited by technology, you might just spend the money. If you got a really clear sharp picture of the optical artifacts, you could clean it up a bit by guessing the probable conent: that speed sign that says maybe BO probably says 80.

As a matter of fact, we do that all the time in signal processing: you use known characteristics as well as the raw data.

Another, more common example: you can easily see the dashed lane markings on a highway with 1-meter resolution imagery. Although the lines are just a few inches wide, they are bright enough compared to the background to give them good contrast. And the dashes are significantly longer than one meter, so you can see significant detail along the length.

That bush, what’s its natural color?

But you wouldn’t be able to tell whether it was a single or double line.