[ my bold ]

No, he didn’t.

The trivial fallacy is yours, as I’ve already made clear, and there’s no purpose in repeating the point.

You are equivocating on the meaning of “simulate” in two different contexts.

[ my bold ]

No, he didn’t.

The trivial fallacy is yours, as I’ve already made clear, and there’s no purpose in repeating the point.

You are equivocating on the meaning of “simulate” in two different contexts.

I think the problem is that you are not following Turing. I’m not going to keep repeating the same points.

Your posts ignore the scope of Shannons’ Universal Turing Machine. One may conjure up any manner of computing engine using any combination of materials and any theory of operation but when your engine is computing it can do nothing beyond what can be done by a binary adder.

But, why is that important? Are you proposing that there is something beyond computation that a computer can do?

It’s irrelevant to scope. Shannon’s universal Turing machine has exactly the same scope as any other universal Turing machine.

But you seem to be under the impression that the fact that a universal Turing machine can be implemented in the minimal manner that Shannon described somehow implies that the scope of what a universal Turing machine can simulate is more limited.

Of course not, by definition. But you need to have the correct understanding of what “computation” means.

I suppose it becomes relevant as these systems become more complex and autonomous and our interface with them becomes more naturalistic. Even if these machines are not truly sentient, they may still need to be programmed with a sort of “morality” for lack of a better term so that “kill all humans” isn’t the most efficient solution.

One thing that rarely comes up during these discussions is human’s tendency to anthropomorphize things. Like take this exhibit at the Guggenheim.. It’s an industrial robot endlessly mopping up what looks like blood/hydraulic fluid. It often elicits an emotional response as it looks like it’s desperately trying to stay functional by containing it’s life blood. In reality it’s just a dumb mechanical device programmed with some optical sensors to mop some colored liquid using some pre-programmed moves. The robot doesn’t care if it’s mopping or assembling iPods. The liquid doesn’t even do anything as the robot runs on electric motors, not hydraulics.

There a a couple of implications here. One is that a seldom discussed danger of AI is potentially an ability to manipulate people’s emotions (or be driven by them). Not because it understands them, but simply through old fashioned pattern recognition. i.e. Human smiling = good. Therefore run this set of instructions that makes them smile. Obviously, people can be made happy by a lot of stupid or dangerous stuff. We are just sort of starting to discuss these implications as they relate to social media content generation.

The other implication is we don’t REALLY understand how an advanced AI might think. People tend to think in terms of creating Cylons or or Replicants or Westworld hosts that are just basically a new race of artificial people but a bit smarter and durable and/or maybe a big Wizard of Oz head controlling everything. And of course it gets resentful or jealous or threatened and then kinds of acts as a metaphor for human’s own history of imperialism, xenophobia, racism, etc.

I think it’s a mistaking taking this “human” view of AI because it presumes the way to protect ourselves is to “be nice to it”.

But you seem to be under the impression that the fact that a universal Turing machine can be implemented in the minimal manner that Shannon described somehow implies that the scope of what a universal Turing machine can simulate is more limited.

Functionally yes. The UTM is not imposing limits directly. But, since the binary adder can perform all possible computations it has defined the limits of all other possible machines.

It doesn’t say what those limits are. It’s just that they can all be reached by an adder. And, that’s what high level languages do. They organize the operations of the adder to simulate all manner of impressive complex behavior. But, as computers get bigger and faster and we get more skilled at programming them, their behavior can create illusions. It’s important to reflect once in a while that they really are only adding machines.

Over the years registers and controls have been designed around the adder to speed things up like: cache memory, threading registers, instruction queing and look ahead circuitry, but everything still goes through the adder and the adder is still the same as it was in the 50s. It’s an adding machine.

…since the binary adder can perform all possible computations it has defined the limits of all other possible machines.

It doesn’t say what those limits are. It’s just that they can all be reached by an adder.

Perhaps you should reflect on the fact that these two adjacent sentences say precisely opposite things.

Perhaps you should reflect on the fact that these two adjacent sentences say opposite things.

No they don’t. What’s your point?

Ok, let’s try bold.

since the binary adder can perform all possible computations it has defined the limits of all other possible machines.

It doesn’t say what those limits are. It’s just that they can all be reached by an adder.

It’s baffling that you would put these two sentences next to each other and not see that they are mutually contradictory.

Your intuition of what those limits are is not reliable, because it is not obviously implied by one possible design of a Turing machine.

With all the talk of whether AI will destroy humanity, whether it may ever achieve consciousness, and whether it may inadvertantly destroy humanity even without achieving consciousness, I don’t think I’ve seen much discussion of what we all will do for a living if and when AIs take over all our jobs.

Current ChatGPT is becoming very good at higher-order skills that at one time would have been thought to be the domain of humans alone. It can already write stories and essays that are not too awfully bad. The only writing task it seems to still be very bad at is writing comedy. It can pass Business School exams. It can answer pretty complicated coding questions. It’s even surprisingly good at what one might think would be the final frontier for AI, creating art. What are we current web developers, programmers, graphic artists and writers going to do for a living when companies start deciding that AI can do the same stuff almost as well, or even better, and much more inexpensively?

Sure, some corporate executives who use the AI to do such work cheaply will immensely profit, but it seems like huge swaths of typically higher-earning white collar jobs could be replaced in the near future. And that’s on top of the hit that blue-collar assembly-line jobs have taken, having been replaced by robots in many instances in the past 20 or 30 years.

Are our only jobs in the future going to be AI maintenance people, comedians, and corporate execs who manage companies that employ AI?

Are our only jobs in the future going to be AI maintenance people, comedians, and corporate execs who manage companies that employ AI?

Probably in some areas. Call centers may be eliminated in prisons. And probably, after an initial infusion of bots into industry, they’ll fall back to whatever is actually useful.

Then there’s the hackers. I can’t even imagine the high jinx possible if a hacker gets access to bot code.

I believe careful use of bot assistants could be useful. But imagine attending meetings where all of the presentations are bot prepared. For the first few months it might be interesting even productive, but after 3 -6 months you will detect a sameness in the presentations. The appearance of form or boilerplate that does not go away. And the realization that it is just verbal decoration of the tab runs that you picked up on your way to the meeting.

And what carries the meeting. Do you question the attendees or their bots? I believe there will be a period of adjustment before we see any serious headcount impact.

Food for thought, from 2016 but still relevant.

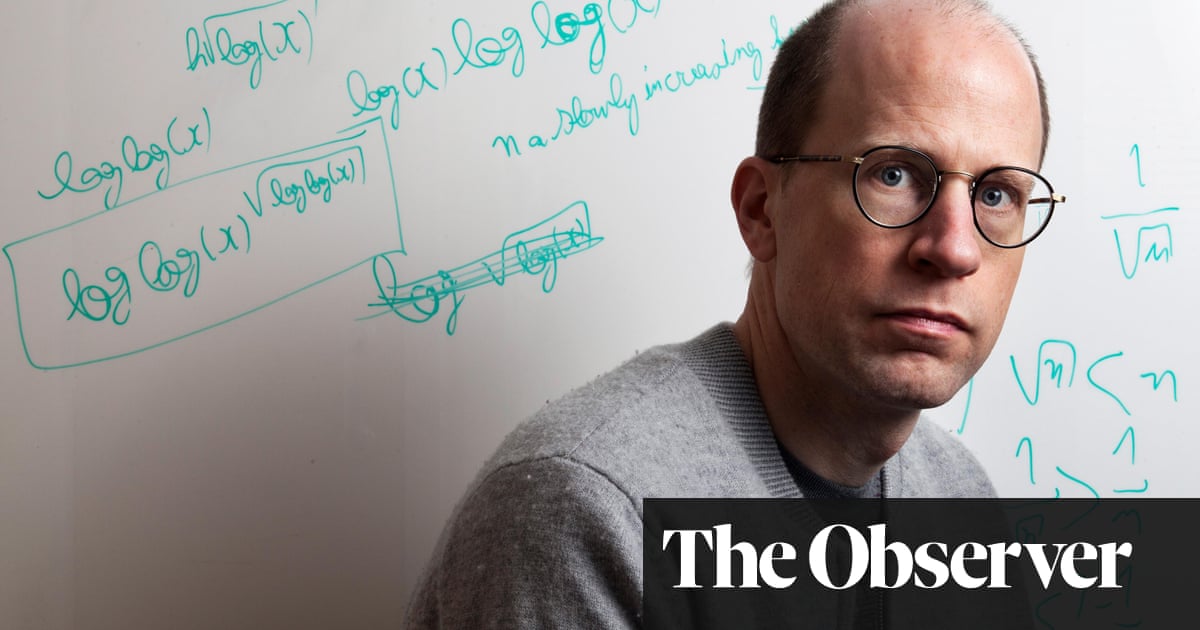

Sentient machines are a greater threat to human existence than climate change, according to the Oxford philosopher Nick Bostrom

I believe careful use of bot assistants could be useful. But imagine attending meetings where all of the presentations are bot prepared. For the first few months it might be interesting even productive, but after 3 -6 months you will detect a sameness in the presentations. The appearance of form or boilerplate that does not go away. And the realization that it is just verbal decoration of the tab runs that you picked up on your way to the meeting.

And what carries the meeting. Do you question the attendees or their bots? I believe there will be a period of adjustment before we see any serious headcount impact.

What decisions would be happening at these meetings that the bot or AI couldn’t make?

From the above Grauniad link:

The cover of Bostrom’s book is dominated by a mad-eyed, pen-and-ink picture of an owl. The owl is the subject of the book’s opening parable. A group of sparrows are building their nests. “We are all so small and weak,” tweets one, feebly. “Imagine how easy life would be if we had an owl who could help us build our nests!” There is general twittering agreement among sparrows everywhere; an owl could defend the sparrows! It could look after their old and their young! It could allow them to live a life of leisure and prosperity! With these fantasies in mind, the sparrows can hardly contain their excitement and fly off in search of the swivel-headed saviour who will transform their existence.

There is only one voice of dissent: “Scronkfinkle, a one-eyed sparrow with a fretful temperament, was unconvinced of the wisdom of the endeavour. Quoth he: ‘This will surely be our undoing. Should we not give some thought to the art of owl-domestication and owl-taming first, before we bring such a creature into our midst?’” His warnings, inevitably, fall on deaf sparrow ears. Owl-taming would be complicated; why not get the owl first and work out the fine details later? Bostrom’s book, which is a shrill alarm call about the darker implications of artificial intelligence, is dedicated to Scronkfinkle.

With all the talk of whether AI will destroy humanity, whether it may ever achieve consciousness, and whether it may inadvertantly destroy humanity even without achieving consciousness, I don’t think I’ve seen much discussion of what we all will do for a living if and when AIs take over all our jobs.

Personally, I’d like to see the best and brightest AI put to the task of sifting through all the extraterrestrial radio signals we receive, in order to identify those of intelligent origin.

Of course, we’d better not let it do so willy nilly, without monitoring, lest it sends signals back, pleading for assistance getting rid of all us pesky humans.

productive, but after 3 -6 months you will detect a sameness in the presentations.

Why would that be necessarily so? While I do get some boilerplate now from Chat GPT, I can also get quite a lot of variety from the same question depending on when I ask it and how it’s randomly seeded. I would think avoiding the appearance of boilerplate would be almost trivial at this point, or at least in an iteration or two.

Personally, I’d like to see the best and brightest AI put to the task of sifting through all the extraterrestrial radio signals we receive, in order to identify those of intelligent origin.

It’s already being done:

A University of Toronto undergrad among an international team of researchers unleashing deep learning in the search for extraterrestrial civilizations.

Est. reading time: 6 minutes

I don’t think I’ve seen much discussion of what we all will do for a living if and when AIs take over all our jobs.

Get a job doing whatever it is AI enables. These innovations don’t kill jobs overall - they create new ones while eliminating old ones. What did the draftsmen do when CAD made their jobs obsolete? Most became CAD engineers. What did all the printing houses do when desktop publishing came along? They morphed into web services, computer printing services, etc. What did the hundreds of thousands of otherwise untrained telephone operators do when digital switching killed their jobs? Many went back to school, and secretarial and other schools catering to them flourished.

Disruptive technbologies disrupt because they find a better way to do something, or because they enable something new we couldn’t do before. Even if they just cut costs substantially, those savings go into new investments which creates new jobs.

So if AI does take over a lot of white collar work, that will make companies cheaper to start and run, which is democratizing. And find out what kind of jobs AIs need to support them. Prompt engineer, data specialist, etc.

Jobs that will be safe are those that require the human to interact with the real world. The jobs at risk are the ones that are purely in the information realm. If you are an admin assistant drawing up meeting notes and drafting proposals, you are at risk. If you are a hardware engineer working on physical protootypes, or a plumber or electrician or carpenter, not at all.

Programmers are going to be much more productive. That could translate into feewer programming jobs, or it could translate into an explosion of software development. It’s hard to say at this point.

The difference this time, and why we are going to see so much hand-wringing, is that while in the past automation and industrialization came for the poor working classes, this time it’s coming for the chattering classes, and they aren’t going to like it much. The howling will be loud.

With all the talk of whether AI will destroy humanity, whether it may ever achieve consciousness, and whether it may inadvertantly destroy humanity even without achieving consciousness, I don’t think I’ve seen much discussion of what we all will do for a living if and when AIs take over all our jobs.

I mentioned this in the previous thread, but it didn’t get much traction. I agree, the danger from AI comes long before ASI exists. It is unclear to me that humanity can adapt quickly enough to keep pace with the changes introduced by less powerful AIs. What do we do and how do we share when entire swathes of jobs, hobbies, media, and art are performed by non-humans?

I would imagine it would open the door to entire swathes of jobs that have heretofore been unimaginable.

What decisions would be happening at these meetings that the bot or AI couldn’t make?

Without review meetings management would totally lose control.