How are you defining high definition? Because at one point, 1080p was high definition. And virtually everyone has had that for a long time.

I think 4k was being shown at Best Buy. I was very surprised at the sharpness and clarity. Best Buy had several tvs turned on and the one playing high def stood out.

I’ve never seen 8k.

Keep in mind that those sample 4k videos are specifically chosen to show how good it can look. I doubt in reality, watching a recording of a movie or TV show would you notice the difference.

Yep. Kinda like the early CDs we remastered analog recordings made for LPs.

ISTM not much material today is being created using end-to-end 4K capable hardware. Much less 8K capable hardware. And anything that dates from before a couple years go could not possibly be native 4K from end to end.

I’ll wait for awhile and see if more movies gets released.

I would need a fiber internet connection to stream even 4k. My neighborhood will get it eventually.

For what it’s worth… 4k has been around for quite a while now… it’s no longer some newfangled tech, and it’d be a very rare modern movie that gets shot without 4k. As for older movies shot on physical film, their resolution should still be easily able to handle a 4k digital transfer as long as someone bothers to rescan/remaster it. (Maybe @commasense has more info there?). In my experience, though, old movies rescanned for 4k typically just gets you more film grain and optical artifacts, not necessarily more detail… maybe the film media itself was capable of higher resolution, but lenses back then weren’t great. You end up seeing more detail of the process rather than the film.

You can see some 1080p vs 4k comparisons for older movies like Blade Runner (the old one) vs the 1080p vs 4k in the new one, for example.

Whether movies get released in 4k is more a matter of DVDs and Blu-Rays dying, than any inherent limitation of cameras or TVs. I would expect there to be fewer and fewer, not more, 4k Blu-Rays released over time. That market was always niche and is probably getting even smaller now that physical media was phased out from many retailers. Most people have just moved to streaming. And you absolutely don’t need fiber to stream 4k: https://help.netflix.com/en/node/306:

That’s 15 megabits. Most fiber is 1000 or higher. Even cheap home DSL plans are like 25 or so these days. Starlink is faster than that. Many cell phone plans surpass that. It’d take a very crappy connection to NOT be able to stream 4k.

Anyway… is it a huge difference? No, not often. I wouldn’t argue that you need it, especially if your DVD/BR player or TV already does some good upscaling. But there’s no real hurdles to getting it if you really want to see every last wrinkle or grain of sand in your movies.

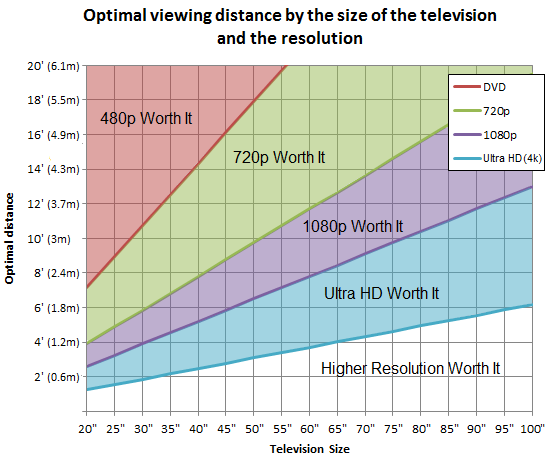

It only really matters if you have a pretty big TV AND you sit really close to it. Otherwise, many eyes (especially in old age) can’t really see the difference anyway: TV Size to Distance Calculator and Science - RTINGS.com

It’d only look pixellated if they used the absolute simplest brain-dead algorithm to do the upscaling. I don’t think nearest-neighbor interpolation was commonly used in anything, by the time Blu-Ray was invented.

If they used a quick-and-dirty algorithm of the sort that was common at the time, the image would look fuzzy, instead of pixellated. Which still wouldn’t be great, but it’d be not-great in a different way.

If they were really fancy-schmancy, they’d do that, but then also use some algorithm for sharpening the image. How well that works depends on how similar the actual image is to the sample images they used to design their sharpening algorithm. It might be good enough to look close enough to right, or it might be completely weird and wrong.

(Sorry not to get back to this sooner, especially after @Reply’s nice call out to me, but I lost track of it and then got busy with other stuff.)

Sorry to have to contradict you, @LSLGuy, but @Reply is right on both counts. Digital cameras have been advancing very quickly, and I would guess that no major features have been shot at less than 4K resolution for more than ten years. Red, the company that introduced the first popular, low-cost 4K camera, had a 6K model in 2015. Shooting at 6K and 8K, and even beyond, has been common for years.

(Point of reference: Star Wars Episode 1 was shot at 1920x1080, less than 2K, in 2002.)

Of course, most cinema projectors and home TVs are only 4K, but it has been known since the film days that shooting at higher resolution than your display system (known as headroom) yields noticeable benefits.

Also, even though the initial standard for digital cinema was 2K (2048×1080), which the experts at the time felt was equivalent to standard 35mm exhibition, it also allowed for 4K, which is more desirable for wider aspect ratios.

This is correct in the sense that end-to-end 4K digital production probably does not pre-date the Red One 4K camera, announced in 2006 and shipped in 2007. Even then, I suspect that many early films weren’t end-to-end 4K, because 4K editing hardware probably wasn’t widely available in the first few years. But films shot at 4K and initially released at 2K can be remastered for 4K.

But as @Reply says, the image quality of standard 35mm film (to say nothing of standard 70mm or IMAX) is generally considered to match or exceed 4K, depending on the stock, lighting, and processing. Some grainy stocks won’t look great, but there have been great advances in de-graining and up-resing technology in the past 20 years. So there shouldn’t be any excuses for bad remastered 4K versions.

[Emphasis mine.] Here I think you’re right. Although I’m sure there is plenty of full-8K content out there, it’s a tiny fraction of the amount of 4K material. In terms of visible differences, 8K is useful for IMAX-type* theaters, digital planetariums, and special venues like the MSG Sphere in Las Vegas. That level of resolution is not needed for most ordinary cinemas, much less most home TVs, or even high-end home theaters.

Take a look at the chart in @Reply’s post above. For anything over 4K (UHD) you have to be closer than 5 feet from an 80-inch screen to see the difference between that and 4K. Nobody does that! We have a 77-inch Sony, and sit at just about 10 feet, which is right on the cusp of being able to see the improvement over HD.

*IMAX is 4K, and AFAIK will not be going beyond that. (Their earliest digital systems in multiplexes were 2K, and some may still be out there.)

Despite the rest of your post being incredibly informative, I am most delighted by now knowing how Max Headroom got his name.

Actually, that was probably a different sense of “headroom,” from composing portraits, i.e., leaving enough space at the top of the picture for the head. Not too close to the top of the frame, and not too far.

At a certain point, the law of diminishing returns sets in. CD audio is pretty much “good enough” for most people. Then there’s content. How important is it to view Meet the Fokkers in 4K instead of 1080p?

Wiki also says…

The character’s name came from the last thing Carter saw during a motorcycle accident that put him into a coma: a traffic warning sign marked “MAX. HEADROOM: 2.3 M” (an overhead clearance of 2.3 metres) suspended across a car park entrance

Must have been a really tall motorcycle if that sign was what did it…

I had the same thought.

2.3m is ~7.5 feet.

Maybe the truck just in front of him was brought to an abrupt halt when it hit the sign or the low ceiling. Then he smacked into the back of the truck.

Or perhaps he rides his motorcycle standing on the seat. I’ve seen it done on the street around here. Idjits.

Yeah, it was dark humour on my part. He does not say the sign was what hit him. Just it’s the last thing he remembers… ![]()

The corollary to the backwards compatibility of Blu-ray players relying on independent hardware to play DVDs is that sometimes, the DVD part fails while the Blu-ray part works fine (or vice versa, I suppose).

When my Blu-ray player wouldn’t play one of my discs, I figured it was a problem with the particular disc and I watched something else. A few weeks later, I had another disc that seemed to fail so I again picked something else. A few more weeks later, a third disc failed. That’s when I figured out the seemingly obvious connection that Blu-ray discs work and DVDs don’t in my player. I’m glad new players are still widely available because I like my physical media collection.

While it can of course go either way, I’d expect that it’d be more common for the Blu-ray part to fail while the DVD part still works, just because Blu-ray requires more precision. Of course, there are also some parts in common, like the servos that move the read head, so it’s also possible for it to all fail at once.

<tangent>

That makes me wonder… since they use different lasers and wavelengths, is it possible to burn both Blu-ray and DVD data onto the same physical disc?

Why… yes, apparently it is!

The Hybrid Blu-ray/DVD disc is a combination of a traditional DVD with the new high-definition Blu-ray format. Originally developed by JVC more than two years ago, the disc has a blue laser top layer (25GB); underneath there are two more layers just like an ordinary DVD (8.5GB). The Blu-ray and DVD layers are separated by a semi-reflective film that reflects blue light while it allows the red light to pass through the DVD layer underneath. The disc conforms to the “Blu-ray Disc, Hybrid Format” specifications released by the Blu-ray Disc Association.

Now I doubly wonder… can you go one step further and use a variable-wavelength laser to burn an arbitrary amount of layers into the plastic, essentially making a 3D disc? I guess 4-layer Blu-rays are already kinda like that, or maybe holographic storage?

Just a slight correction. Episode 2 was the first one shot in 1920x1080. Episode 1 was filmed in 35mm.