To me? Sure. I have written a few.

This gets us to some of the more interesting magic of how a modern processor architecture works - although when I say modern there is a lot of scope for different ideas depends upon the use case of the architecture. Embedded device controllers provide a very different world to a PC.

The standard theology of what an OS provides tends to focus on providing an abstract machine atop the hardware. The key to interaction between the OS running on the hardware to oversee and control stuff, and user/client code running, and how to interact with devices - which operate asynchronous to the processor, are provided with a couple of common paradigms. Interrupts and exceptions. Either of these cause the processor to drop what it is doing, save the state of what it was doing, and then start to run a designated piece of code. In architectures that provide protection mechanisms, the operating mode usually switched to the most privileged mode (usually called kernel mode.) In kernel mode the processor will allow access to all of memory, devices, and enables certain reserved instructions that ordinary user mode processes cannot execute. The code that runs in kernel mode is often just called “the kernel”.

The difference between an exception and an interrupt is that exceptions are synchronous with program execution - and are caused by program execution. Interrupts occur asynchronously to program execution and are caused by hardware conditions. Perhaps the simplest interrupt is the one created by the hardware clock, which causes regular execution of the operating system interleaving with user code.

Devices can range across huge amounts of complexity. We could consider a trivial serial line input. We get 8 bit ASCII characters, one at a time. Every time you press a key, an interrupt is generated by the device. There is a mix of hardware and software that allows the operating system to work out which device caused the interrupt. This is specific to the exact hardware. The interrupt fires, the processor stores away what it was doing, and runs the interrupt hander code. First thing the handler does is work out where the interrupt came from. There may be special registers that it can examine (possibly available mapped into memory, possibly special hardware that requires special instructions to read). Once it works out what caused the interrupt, it calls the appropriate specific handler code. That code might simply read the 8 bit ASCII value from the device - again, the device might be made available as a memory location, or there might be a significant amount of messing about with reserved instructions that read the value from hardware. The handler then passes the read value up to where it is needed (which is a whole separate miracle of abstraction.) Much the same happens to write a character value to a device. However this time the action is initiated in user code. The user code calls a special routine that eventually causes an exception that causes the operating system interface to be executed. Same deal, the specific exception works out what action is needed, and in the case of device output will call the device code to perform the appropriate action. Since it is running within the operating system, in kernel mode, this code also has access to the appropriate hardware. It may be as trivial as writing the ASCII character into a specific location to cause the hardware to output the character. Commonly the operating system wants to know when the device has finished what it is doing, and so may also expect the device to cause another interrupt when it is done. Once all this is done the operating system will find the next available user process, restore its state and resume execution of it - dropping out of kernel mode as it does so. To reiterate - it is the hardware that provides the abstraction of these modes and controls transit between these modes. They are the magic that allows the OS to do its work.

This brings up the question of asynchronous IO. The above example has the IO occurring synchronously with the requests, with the requesting code blocked awaiting the device. The OS commonly provides the file system and uses device drivers that control disks or SSDs, or talks on the network. It doesn’t want to be blocked waiting for operations that take a long time. So it will make requests of the device, and expect to be interrupted by the device when the request is done. Internally the OS may maintain queues of requests, with many outstanding, also modern devices may support multiple outstanding requests. The OS is expected to manage the circus.

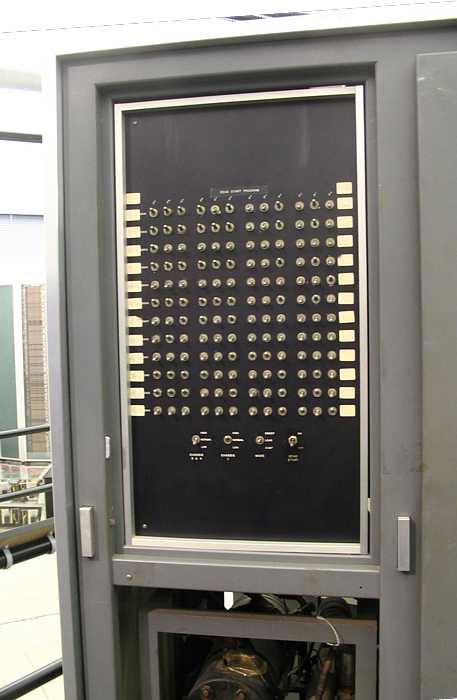

A lot of the above is operating systems 101 level. The level that worked on something like the PDP-11 I first did such stuff on. Things get more complex as you get much smarter devices, I haven’t mentioned buses, the manner in which half the devices in a modern PC are on the same die as the processor or the next door chip, nor any mention of the fabulously weird and specialised mechanisms seen in high end embedded control processors. We are in a world where devices themselves run embedded processors with their own operating systems.

Writing a real device driver is mostly about reading the device manuals, and the page upon page upon page of device register definitions and working out what to poke where to make what you want to have happen actually happen. Then dealing with the asynchronous nature of everything. Typically you end up with an event driven model of code that reacts to input and output and requests, and otherwise shovel control requests about. I haven’t mentioned Direct Memory Access (DMA) or DMA controllers. Most devices use DMA, and another part of device driver writing is setting up data transfer requests by setting DMA requests. DMA systems are like little devices in their own rite, and they exist to shovel data about in nice big chunks for you. I have also been out of the game for quite a while.