Once in a while someone will chide me or another staffer for not counting back change, apparently thinking that method is immune to error. I like to retort that humans have made more mathematical errors in the last five seconds than computers have in the last five decades.

I mean, humans have made these errors in their heads and on their hands. Input errors into a computer/calculator/cash register don’t count because the machine gave the correct answer, given the input. Which brings me to my question: Can a computer make a mathematical error, at any level of its operation? What would happen if it did? Is my snarky remark (above) even more true than I suspect?

There are corner cases where not every possible permutation of software is tested. In the case of a cash register, I assume that the testing would be good enough to eliminate all corner cases effectively, but humans, who develop the tests, aren’t perfect and may miss something.

The good news is that errors, even small ones, are often noticed soon after the software is released and the bug is fixed. The bad news is that until the bug is fixed, that error will continue to happen whenever that corner case situation pops up again.

What is the nature of these errors? Is it that the machine can’t return an answer (e.g. it’s asked to divide by zero), that it isn’t powerful enough/the universe won’t last long enough to return an answer (e.g. it is asked “what is the decimal expression of the value of TREE(3) factorial to the Graham’s Number?”), or that it returns an incorrect answer (e.g. 2+2=7.448)?

When the original array for the Pentium was compiled, five values were not correctly downloaded into the equipment that etches the arrays into the chips – thus five of the array cells contained zero when they should have contained +2.

It seems to me this was a human error; I’m guessing people did the compiling and/or downloading and/or etching? Were not these processors returning the correct values for computations given how they were made?

Rounding errors (that easily can accumulate) are a bane in programming.

What is the nature of rounding errors?

That’s a useless distinction.

Say you had a device (like an adding machine) that generated incorrect results as it wore out. That is still a human error, since humans designed the machine.

I used to be a programmer, but I’m no expert for this subject, there are others here who can explain it better. If your program does a lot of floating point calculations (floating point variables represent real numbers), there are always rounding errors, depending on the precision or bit-length of the variables. If the programmer isn’t careful, this can lead to massive errors on run-time.

Fair enough, maybe. I’m not trying to No-True-Scotsman the meaning of “human error” or “error” here, really I’m not.

There seems to be room for me to say a calculator intentionally designed to return “7.448” when asked “2+2=?”; a calculator mistakenly designed to return “7.448” when asked “2+2=?”; and a calculator that, when asked “2+2=?” returns “7.448” for reasons not related to its design (but rather its functioning, I guess, if that makes sense?) are making different kinds of errors. The first two are related, and are human. The last isn’t, if such errors occur or indeed if they can occur. That’s what I’m wondering.

Rounding functions are well defined. If rounding errors accumulate it’s because of human error.

I know, but human errors occur. Even among programmers ![]() .

.

Computers failing to operate as intended are equivalent to a human having a stroke. Is that an error of mathematics or general disfunction?

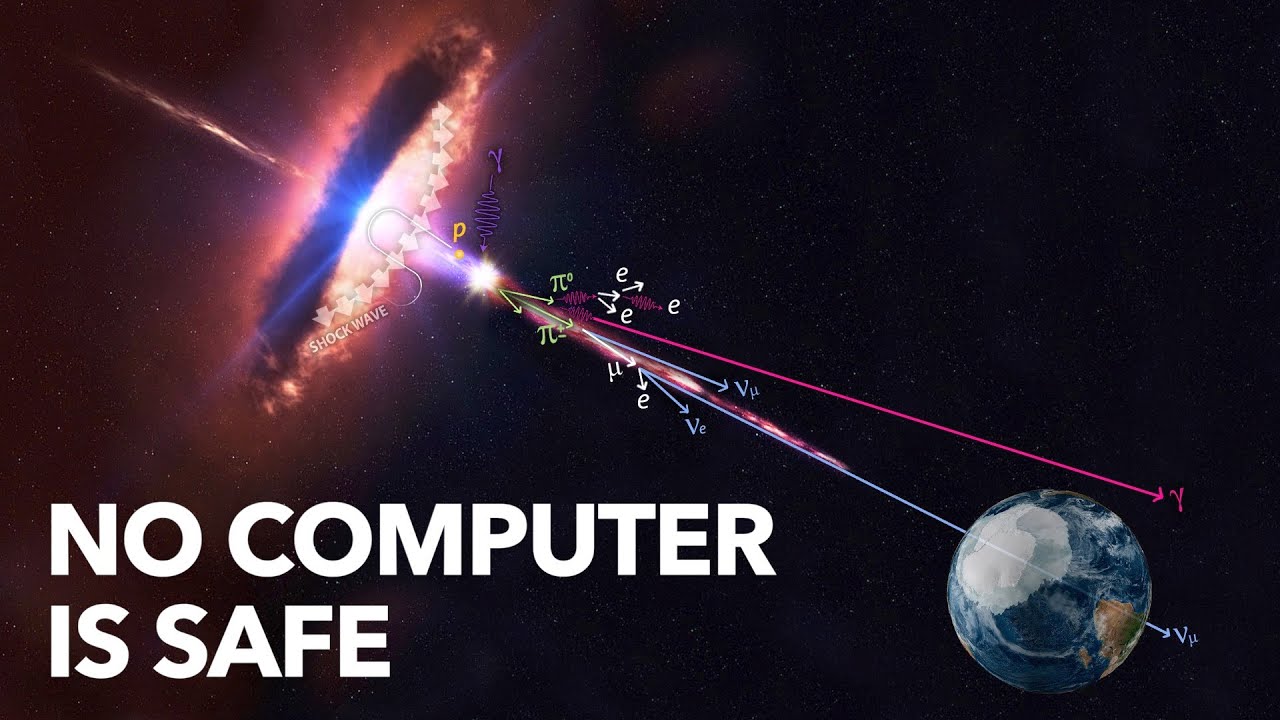

A computer that is correctly designed, running software that is comprehensively tested, can still make a calculation error if a bit in memory is randomly flipped by a cosmic ray. Here is a video from Veritasium that explains the phenomenon:

I don’t know if it counts as a rounding error, but there are numerous cases where a computer will say the answer is 0.999999999 when the answer is 1. It happens often with logarithms, but not only. Would never happen with a pencil and paper if you understand what you are doing, but the computer does not see that, because it goes thru the programming steps. It seems unavoidable.

There are a bunch of different types of errors we could be talking about.

There are of course bugs, where the human has commanded the computer to do the wrong thing. There are also hardware errors, where perhaps there is some latent defect, or the computer is being operated outside of its design range.

The most subtle kind of error is where the machine is operating as designed, but the user isn’t aware of some subtleties. For instance, in most computers, 0.1 * 10 does not equal 1.0. That’s not an error of mathematics, exactly–it has to do with the way numbers are represented. The small difference is likely not noticeable most of the time, but a user expecting that expression to hold exactly may think it’s an error because it differs from the usual mathematics where it does hold exactly.

It is possible to design correct code with as much confidence as we have in any mathematical proof. It’s also possible to design exceptionally reliable hardware. For some loose definition of “never”, we could say that such a computer would never make an error.

Even normal computers are exceptionally reliable, though. All of their basic instructions are essentially math operations: add, multiply, compare, etc. And they perform billions of these per second, for years, often with no errors. Even if your program crashes, it’s likely that the computer itself was still behaving correctly.

We made most of those ‘mistakes’ intentionally to keep our jobs secure.

Fascinating stuff. I love the Dope!

You have to be very careful doing math in a computer.

One example is with integer math. Take the number 10. Divide it by 6. Multiply that result by 6. Obviously you should get the number 10 as the result. The computer gives you 6. 10/6 = 1 in integer math, and 1x6 = 6.

You end up with similar weirdness with floating point numbers. Computers use base 2 (binary) arithmetic, and 1/10 in base 2 is an infinitely repeating number (0.00011001100110011…). A floating point number only holds a fixed number of bits, so you can’t possibly store 1/10 in a floating point number accurately. The Patriot Missile system in the first Gulf War had a famous bug where the missile tracking system became extremely inaccurate the longer it was powered on. Ultimately, this bug was due to this 1/10 rounding “bug” and the way that the Patriot did its timing calculations.

Since computers are designed and built by humans, all computer errors are ultimately human errors.