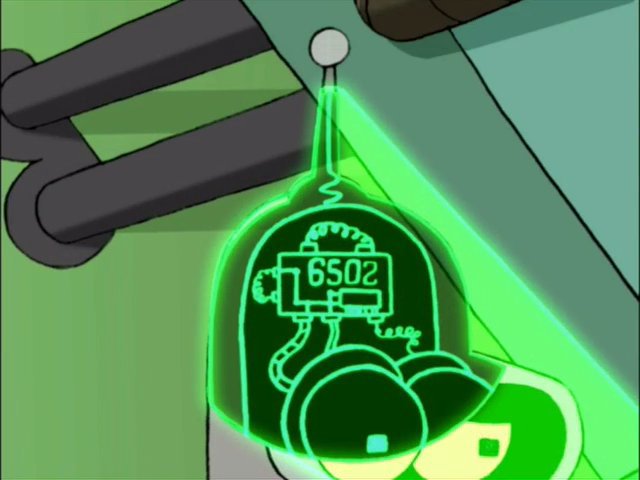

Well the 6502 has about 16 times as many transistors as the all discrete Nixie Clock. So it isn’t totally insane.

Also multi-layer PCBs are cheating. Maybe PCBs are cheating (although wire wrap might be easier than a single-sided PCB because bridging over other connections is an inherent feature)

But that ASCII art porn was hot

With the arc taking us from Univac to Control Data, it reminds me to mention the classic book A Few Good Men From Univac.

The control panel and documentation was in octal. So everything was divided into 3 bit chunks.

Another contemporary, the IBM705 was a variable word length BCD processor. It used the hex codes above 9 as delimiters - end of word, beginning of word, decimal point etc. It also used hex notation in place of octal. But the terms byte and nibble hadn’t been invented yet. As far as I remember we simply used character for both hex and octal.

Vacuum tube registers took up a lot of real estate and the 701 only had 2K of memory so no need for a large memory address register. Data was on tape and memory was just temporary storage. Memory on a 704 was 4K expandable. The Rand corporation in LA had an 8K which was considered awesome at the time.

The 704 word length was also expandable by attaching a second main frame module. This was a bit imposing because mainframe with it’s operator desk and core module was about 20 feet long. So a double precision system was around 36 feet. But, that gave you 72 bit floating point arithmetic.

These systems came with a resident maintenance crew. The IBM job title was Customers Engineer and the IBM tool kit was a very cool executive style leather brief case. That was my first job fresh out of the Air Force. I was assigned to the systems at Lockheed Burbank (704, 705, 709) and JPL Pasadena (extended precision 704).

The famous hackers’ Jargon File defines a byte as

So we have that historical note.

Revised (~1999–2011) Knuth, describing a 64-bit RISC machine , defines byte = 8 bits, wyde = 16 bits, tetra = 32 bits, octa = 64 bits.

Interesting that Knuth’s jargon went nowhere.

As far as building logic out of weird things, I was guest teaching some second grade science classes for a friend of mine, and I had the kids assemble themselves into an adder, each kid being a gate.

I didn’t consciously get the idea from the “Three Body Problem” but I had read it.

They even used zerohm resistors as jumpers. What dirty cheats!

Interestingly, a 6502 could not be made with 7400-series logic chips. It uses some tricks specific to discrete transistors. And it’s not purely static logic–that is, it depends on weird capacitive effects and such, so the chips don’t even run below a certain clock rate (unless you scale up everything else, as was done here).

My very first day of EE201 “digital circuit design” we did exactly that using college kids. It was really silly. And was not well introduced, so much of the value of the demonstration was lost on the students.

I tried getting a class to implement a basic pipeline once. I didn’t prepare it well enough and whilst it sort of worked it didn’t do much for the pedagogy.

I really wanted to do something much more detailed but it was never going to work.

We also did a version of the paging game for the OS tutorials. That worked a lot better. But was a lot better prepared as well.

Yeah, I heard that on something I was watching just this week - that a 6502 is at least partly an analogue device that just happens(by design) to function in a digital manner.

That’s why the Z80 is superior, of course…

[ducks and runs]

Not too unusual… With modern chips, when a run of chips is new, they quality-test all of them, and the ones that test at high quality get set to high clock speeds and sold for a premium, while the lower-quality ones are set to lower clock speeds and sold cheaper. When a chip has been in production for a while, they work out most of the quality issues, such that most of the chips coming off of the line are high-quality, but they still downclock some of them so as to have multiple price points.

And so, of course, some consumers do buy the cheaper chips and overclock them. Which usually works out OK, except when it doesn’t.

The quoted example isn’t binning, it is explicitly adding additional hardware to more expensive equipment to make it slower. Which someonemust have atttempted to remove.

Don’t forget that a computer center needed to not only feed the processor (and peripherals) but a big air conditioner to dissipate that heat.

My dad had a FORTRAN program to diagonalize 50x50 matrices. He got a version of FORTRAN to run it on his newfangled 8088 PC; it would run for hours, sometimes more than a day. When he got his 386DX, he set it up and started a run. When he came back from getting a cup of coffee, he saw it was at the prompt. He spent a half hour trying to debug before he realized it had finished in less than 5 minutes. Floating point was done byte by byte in the 8088 and using the math coprocessor in the 386.

(I saw an add “speed up your video games” for early PC’s. What it did was replace BASIC’s floating point library. For video games, it was only necessary to get about 3 digits of accuracy on a 480x768 VGA screen, not 8 or more digits. So this simply abbreviated the floating point claculations and your video games would run much faster. Of course, in usual misleading ad hyperbole, it was “software to turn your 386SX into a DX”)

My dad also mentioned using a electromechanical computer in the late 1950’s. Electronic memory was at a premium, so this one used a giant drum and a line of read heads. Each memory cell was at a certain point around the drum. Each cell could hold data - read and write contents of a register - or an instruction. Each instruction included the address of the cell for the next instruction. Essentially, it was a giant programmable calculator. Part of the challenge of writing (machine code) programs was looking up the number of clock cycles the instruction took, and positioning the following instruction in a cell X degrees around the drum to minimize rotational latency for the computer to read that next cell. Programs were written on a large sheet of paper with a square representing each cell, then hand-transfered into the computer drum. Those were the days. He used that skill to amass a large library of HP calulator cards that did calculations for him. I also have a Curta hand-cranked multi-digit calculator of his from the late 1950’s.

Required reading for anyone calling themselves a hacker: The Story of Mel

Still not unusual. For example, a while back, Intel was selling CPUs with half the cache disabled. If you sent them $30, they’d give you a code to unlock the other half. There are many examples of the same thing where I work. People did try to hack around the limitations, but it’s almost impossible these days as the features are locked behind fuses on the die. Once the fuse is burnt, you can’t reverse it (without a FIB machine, at least).

There was once a pencil trick.