Do they have server farms all over the world? How do they do it?

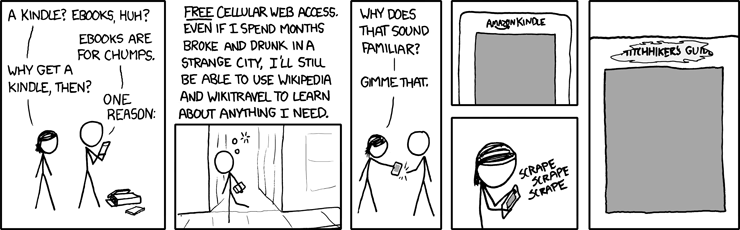

Kindle

I'm happy with my Kindle 2 so far, but if they cut off the free Wikipedia browsing, I plan to show up drunk on Jeff Bezos's lawn and refuse to leave.

Do they have server farms all over the world? How do they do it?

Yes they do have a bunch of servers all over the world:

Cluster locations:

https://wikitech.wikimedia.org/wiki/Clusters

As of 20 April 2021, the size of the current version of all articles compressed is about 19.52 GB

(From here.)

That isn’t including all the photos and video and audio clips. I don’t how much they add, but it is probably pretty safe to say that with even all of that it is a relatively trivial amount of data compared to the truly large sites (Google, Facebook, Netflix, etc.) I’d be suprised if it didn’t all fit on a single modern hard drive.

Apparently if you want the entire history of every edit, it does add up to “terabytes” uncompressed, but it doesn’t specify how many:

(The off-line version of text/tables only Wikipedia that I downloaded multiple years ago was only 10 GB compressed, 20 GB expanded and indexed. I read it with this.)

It is definitely not “big data”. And an off-line version, text only, is really nothing; you could save it to your smartphone. Even if you want all the languages, all the articles, plus user/talk pages, plus the entire history of all of it, including all the images, videos, and other media, you could keep a copy at home, though you might consider budgeting 100 TB to be on the safe side.

I wanted one of these when they were around:

I actually have a Kindle 2 that Randal Monroe went on about being a permanent Wikipedia device, with free data. Unfortunately, the idea that every single site needed to be encrypted and the fact that encryption protocols change over time ruined that part of it. But, last I checked, it still does get free data over cellular.

I'm happy with my Kindle 2 so far, but if they cut off the free Wikipedia browsing, I plan to show up drunk on Jeff Bezos's lawn and refuse to leave.

It is definitely not “big data”.

Oh, it definitely is “big data”. Hundreds, maybe even thousands, of scientific articles have been written that explicitly use this term to describe data from Wikipedia.

You can surely draw big data from Wikipedia, as, for instance, this article did (making predictions of the success of an upcoming film based on user activity on Wikipedia). But that doesn’t mean Wikipedia’s own content is big data itself.

I assume there’s a similar answer for Archive.org.

It’s based in San Francisco but simply says it has many servers. The old set were replaced in 2010, which causes me to wonder just how much maintenance a massive server farm requires.

Same thing for Google, Facebook, Amazon data servers, etc.

Exactly.

Slightly large data? There is a lot of it, in human terms. You may need to employ non-trivial algorithms to extract information from it. But the data set fits into your pocket.

I assume there’s a similar answer for Archive.org.

It’s based in San Francisco but simply says it has many servers. The old set were replaced in 2010, which causes me to wonder just how much maintenance a massive server farm requires.

Same thing for Google, Facebook, Amazon data servers, etc.

Wikipedia, though, is not big enough to require maintaining a server farm. They pay to rent facilities, or receive such services as a donation. The ones maintaining the massive server farms are Equinix, EvoSwitch, CyrusOne, and UnitedLayer.

The Internet Archive is bigger data (~100 PB) and I believe hosted it themselves at least at one point. They somehow manage as a non-profit with modest revenue.

Slightly large data? There is a lot of it, in human terms. You may need to employ non-trivial algorithms to extract information from it. But the data set fits into your pocket.

The concept of “big data” must necessarily change over time, just like the concept of a “supercomputer”. Back when Wikipedia started, a dataset of 2 or 3 or 5 TB definitely counted as big data. Now it is a couple hundred bucks worth of storage that you can (probably) buy at your local Wal-Mart.

Reminds me of when I started working on electronic road maps for car navigation systems, back in the mid 90s. We had to split California into two CD-ROMs (remember them?) because it was too big to fit on one. Still, I was amazed that all of Northern Cal — every single street — could fit on one disk.

Nowadays, of course, Google and Apple hold pretty much every street in the world, and far more besides, in their map databases. And it’s not considered truly big data compared to what else they have.

I used to have Street Atlas USA on one CD. Whole country. Roads only, no gas stations, etc.

Here’s some xkcd wisdom about the size of wiki: Updating a Printed Wikipedia

As I see it, the real point of the thought experiment in my cited article is to help us bridge the monumental gap between what constitutes “a lot of data” in some physical human readable human touchable format versus “a lot of data” in modern standard computer-accessible formats.

Storing a printed complete wikipedia would require a large warehouse. Storing a computerized complete wikipedia requires a large pocket. That’s hard to wrap our minds around.

Fascinating. Thank you all. I had no idea how compact the storage system has become.

I will go back to running my programs on my punch cards now.

Hundreds, maybe even thousands, of scientific articles have been written that explicitly use this term to describe data from Wikipedia.

And hundreds, maybe even thousands, of scientific articles (and marketers, more importantly) can’t even agree on what is meant by “big data”.

Back to the OP.

As @DPRK and @Darren_Garrison noted, the volume of data isn’t all that big. The primary reason they need hundreds of servers is because of the number of people looking at that data. A few years ago they were already serving over 600 million page views per day. I think the primary backend db is still MariaDB, which is just a fork of MySQL, and it’s going to have trouble keeping up with tens of thousands of simultaneous query hits on a single server.