I just realized an hour or so ago that I have a very poor perception of how sound waves work. How they spread out and how they react to surfaces etc. If sound waves ran into a light pole for instance, would it deflect off? Is there a simple way for me to think about this?

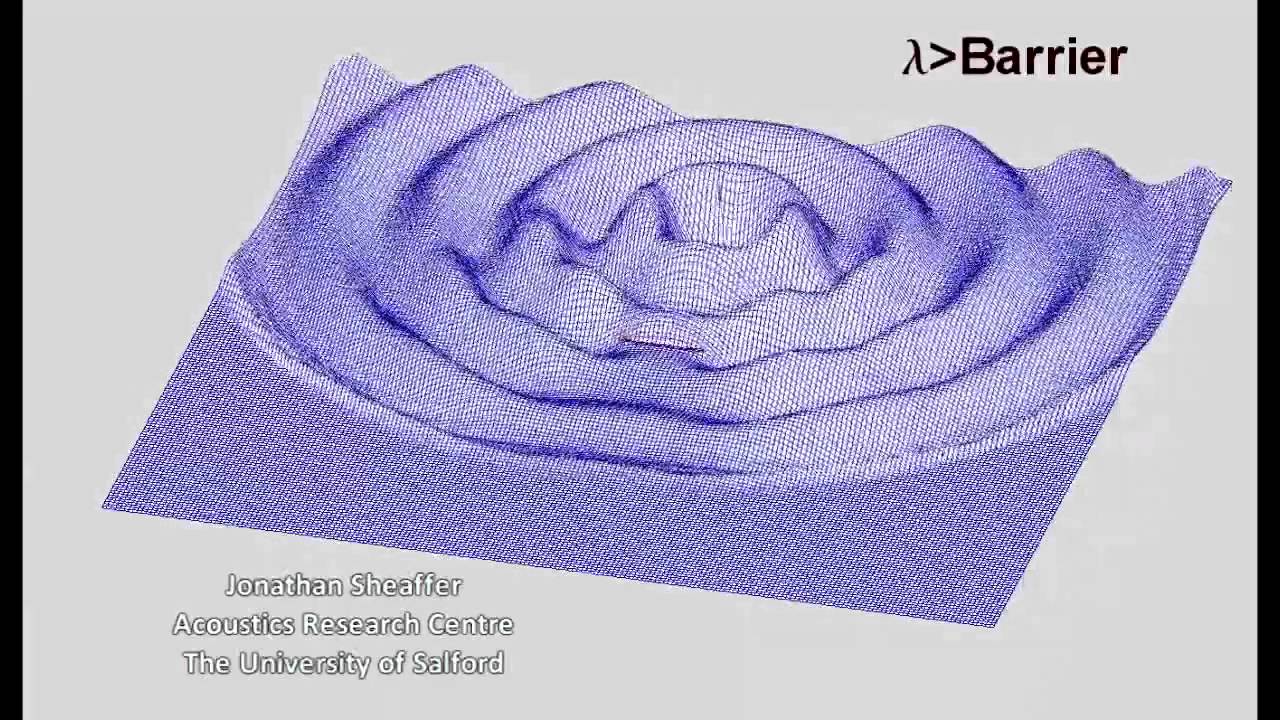

Sound waves propagate via pressure changes in a medium. In your light pole scenario, the waves could get around it via diffraction. If the wavelength of the sound is much larger than the barrier, it will not really be affected:

Here is one simple way to think about it:

you can imagine a wavefront, from every point of which propagate spherical waves

In the simulation video above you can see the wave reflect from the barrier and interfering with the incoming wave. A round pole will also reflect the wave, but every point on the pole will reflect it in a different direction, so the reflected wave would be much wider.

[Moderating]

Moving from IMHO to GQ. Unless @What_Exit beats me to it; I think we were both looking at it at the same time.

This is kind of how I imagine it.

We did this in junior school. Throw a stone into a pond and watch the way the ripples behave, They are a reasonable simulation of the way sound waves behave.

One of the things that makes sound difficult to reconcile theory with experience is the huge range of wavelengths involved.

Humans hear roughly 20 hertz to 20 kilohertz. That is a range of wavelengths from about 60 feet down to less than an inch. The result is a huge variation in the effects different audible sounds can be subject to. High frequencies will reflect off a lamppost and diffract around its edges. Lower frequencies will pass by the lamppost essentially without noticing it.

Loudspeakers have to cope with this, which is why we see multiple drivers, each suited to a different regime of sound generation. Moreover the wavelengths of mid-frequencies is similar to the size of loudspeakers, which results in all manner of evil issues.

Rooms are subject to different effects in different audible frequency ranges. Short wavelengths are easier to adsorb, long wavelengths yield all manner of nodes and anti nodes in a room making bass reproduction a mess.

This all makes acoustic design a miserable pursuit.

There’s a lot of understanding developed about how light waves interact with objects. They can bounce off of objects that are much larger than the waves (or penetrate them or be turned to heat, depending on the object). Objects much smaller than the waves do very little. Objects around the wavelength size, from maybe 1/10 to 10 times the wavelength in size, do interesting stuff. Also, if you observe from far enough away, diffraction can be very important for big objects. Asteroids, for example, for a distant viewer, block twice as much light as their profile blocks, due to additional light being diffracted around them. But from near the asteroid, it’s just a plain and simple shadow.

The technology of detecting airborne particles with laser beams has built upon all this tremendously.

There’s Rayleigh scattering and Mie scattering, if you’re interested.

I think sound and light share many wave behaviors, though there are differences too. No doubt we have built more technology around light interacting with objects than for sound interacting.

We had an odd contraption in grade school that consisted of a shallow clear pan (think baking sheet) that was filled with water, maybe 1/4". The pan was elevated about 7" above the table it sat on. There was then a vibrating mechanism that you could add attachments to and set the end in the water that would create waves/ripples. With a light source above the pan you could see the wave patterns on the table below then set various objects in the water to see how the waves moves around them.

The most notable fundamental differences are that light doesn’t (necessarily) have a medium, and so motion of the medium is irrelevant, and light is a transverse wave (and hence has polarization), while sound is longitudinal (and hence does not).

There are also practical differences from the fact that most electromagnetic waves have wavelengths much shorter than most sound waves. It’s easy to end up with diffraction effects with sound, just from everyday objects, but you usually have to make a special effort to see diffraction effects with light.

Agreed. This is a tidy examination of the limitations of light as a proxy for sound in understanding how waves travel.

I remember reading about sound lenses made by inflating various shaped balloons with different gasses such as helium and (I think) tungsten hexafluoride.

This makes me want to go make a zone plate for sound…

It is easy for me to imagine sound emanating away from a source rapidly dissipating and then I have to examine how I experience sound based on my physiology. Sound seems to carry further than what I can mentally reconcile making me feel like sound approaches us in a funnel type wave from all directions.

I have heard of directional sound beams or fields using arrays, parabolic reflectors, ultrasonics, etc. If you spend enough money on speakers and signal processors, you can certainly sculpt the sound to a great extent.

Just look at any horn loaded speaker. It is all about the evolving sound wave. It is something of a black art, as boundary conditions dominate. Indeed so much so that the standard modelling regime used now only calculates the system at the boundaries. So long as you don’t exceed limits where the air ceases to behave nicely (air isn’t linear, so the pressure levels in a horn can cause problems) this works well.

Horns can be highly directional. They also act to match the impedance between free air and the driver, raising the efficiency of the system dramatically. This allows for seriously loud systems.

In the middle ground are what are termed waveguides. These provide directional control if the sound, usually being used to provide a wide even sound field. They don’t provide the impedance matching function of a proper horn.

A huge part of public address system design is about controlling the sound field. Horns and line arrays are the basic elements in design.

If you look at any modern large venue or stadium sized sound system you will see flown line arrays. They have a characteristic J shape that very carefully sculpts the radiation pattern from what is a long line emitter. Each box in the flown array is typically a set of horns with a rectangular design set into a vertical line with mid and mid-bass drivers in the same boxes. The entire thing acts as a line sound emitter for the frequency range it is fed. Often you will see a seperate upper bass bin at the top of the flown array. Deep bass reproduction requires separate sub-bass systems since the enclosures are far too big to flown. Depends on the music what you need.

Can you say more about this tantalizing bit?

Are you saying there’s a deviation from ideal gas law that’s big enough to spoil the sound?

Air pressure can’t go negative. Do horns ever get to that regime? And then clip, or what?

Ideal gas law alone can modify the sound.

Most acoustics are adiabatic, so that makes life easier. (There are isothermal systems, those I know of are in damping systems, where a very loose high surface area stuffing can make for a local isothermal enclosure that amongst other things make the enclosure look bigger.)

The adiabatic curve is PV^\gamma = const, where \gamma is the adiabatic constant of the gas, and equals {C_{p}\over C_v} with C_p the molar specific heat for constant pressure, and C_v for constant volume of the gas.

That isn’t linear unless \gamma is unity. Which it tends not to be. For small changes of V, it is close enough. But in a horn driver, the volume excursions in the limited space of the driver enclosure are enough that you reach a noticeable non-linearity.

Next, the speed of sound is dependant upon pressure.

c = \sqrt{\gamma {P\over \rho}}

So for an increase in pressure the speed of sound is slightly higher, and slightly slower on a rarefaction. The effect is small, but again, for loud enough noises, or for long enough distances, the effect mounts up. The peaks run slightly faster than the troughs, and a sinusoid waveform becomes more sawtooth like. This means added harmonics.

I don’t think any device for music acoustics ever gets to the point of clipping the air. But any overpressure of one atmosphere would demand a vacuum as a symmetric waveform. That won’t happen. Offensive weapons are another matter. The infrasonic systems that some countries have been played with get closer to clipping I think.

That makes sense and is most fascinating!

And I’ve heard of the stuffing bit. They built a little adsorbent into cellphones, the mics I think, for this purpose.

Thank you!

The Leslie speaker is a good example of the effect of motion on sound. The rotating horns and drum take advantage of the doppler effect and produces a tremolo/vibrato sound. It’s responsible for giving the Hammond B3 organ its cool, deep distinctive sound. These days the effect is often emulated electronically, but sims don’t have the complexity, depth, and acoustic reflections of a real mechanical Leslie.

It is possible to get an illustration of the differing speeds of sound in different mediums. So years ago I observed railway workers working on the tracks some distance from the station. When they struck the track with a hammer the sound travelled to my position much faster through the steel than the air.

And in general, in physics, when you have an absolute hard limit like this, it’s not going to be an effect that shows up abruptly right at that limit. Rather, as you get close to that limit, there’s going to be some new phenomenon that becomes relevant that makes it harder and harder to approach that limit. So even though, say, an overpressure of 0.9 atmospheres could theoretically allow for a symmetric underpressure, it probably won’t.

(but I’d never thought about the effects of pressure on sound speed, and what that would do to waveforms: Thanks, that’s very interesting!)