If you wanted selfies of the mirror side, good luck. It’s in complete blackness, illuminated only by starlight. You’d need a big lens and long exposures. A tiny camera would show nothing but blackness and noise.

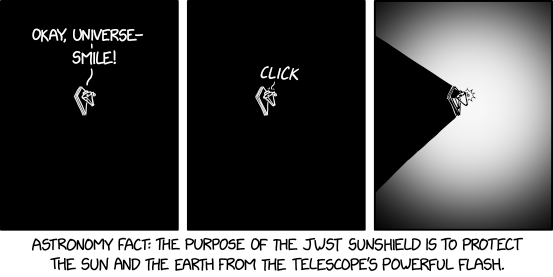

What, has NASA never heard of a flash bulb?

Since it would be pointing back at Earth, the fear is that it would blind Hubble and other Earth based telescopes. /j

Wouldn’t conservation of angular momentum force it to speed up at peri(L2)ion?

Does it have angular momentum with regards to L2? It’s in a solar orbit that takes it above/below and ahead of/behind L2, but I’m not sure the “orbit” of L2 conforms to Kepler’s laws.

And they might or might not be spectacular. Compare the Pillars of Creation images taken by HST in visible light and infrared.

The latter might be more important scientifically – note how stars hidden in the dusty nebula are visible – but it just doesn’t pop.

Well yeah, certain molecular clouds become less spectacular in infrared, but other objects become more so.

JWST has about the same angular resolution as Hubble, btw. It needs the bigger mirror to get the same resolution at longer wavelengths. But it will have significantly better resolution than those pictures Spitzer took. Spitzer’s mirror was only .85m

Aren’t infrared images always falsely coloured?

Almost every astronomical image you see has colors chosen in post processing.

Then the question is, how much of the examples in @DesertDog’s post are due to the telescope and how much are due to the decisions made by a human being in whatever the NASA equivalent of Photoshop is?

More context:

Basically Hubble shoots multiple black and white images through filters that isolate different wavelengths. Those multiple images are “developed” using colors chosen for different reasons, either for a science purpose or aesthetic reasons or both.

The colors are entirely artificial–even for visible light–but the spatial data is not. The data for those cool, dim stars in the infrared image simply isn’t there in visible light. No amount of postprocessing could extract the detail in the second image from the data contained in the first.

Thanks for that link.

It looks like the visible spectrum telescope has all the data it needs to create a true colour image so not entirely artificial - it is a composite used from different filters. So not what a human eye might see if looking through an incredibly powerful optical telescope but not an invention either. If the JWST cannot produce false colour using a combination of b&w via filters then I wonder how it will be coloured? You could certainly colour that IR image of the Pillars of Creation in a way that made them look nearly as dramatic and distinctive as the visible light image without obscuring the stars behind it.

Anyway, not sure if this bit of the thread is a hijack so apologies. The JWST is in orbit now so I hope we can get on with discussing how it works and then eventually analysing the images it gifts us!

When you’re looking at a visible-light image taken through visible-light filters, the colors chosen to represent those filters might vaguely resemble the actual spectrum passed by the filters, so you might get an approximation of true color, but there’s no reason they have to be chosen that way. And even if you represented each filter by the color white light would appear to the eyes as seen through that filter, you still won’t get a true color image, because you’re losing a lot of spectral information: If, for instance, none of the filters used were transparent at the neon lines, then a neon sign seen through those filters would look black through all of them. The only way you could get a true color image would be if you had three filters that corresponded to the color response of the human eye. The camera in your phone has filters like that, because its primary purpose is to take pictures that look like the scene did to your naked eye, but there’s no reason to equip a scientific instrument with such filters.

When they’re choosing what false colors to use for infrared pictures, they won’t have the option of approximating true color. Which just means they’ll pick some other way of choosing false colors, likely based just on what looks prettiest. The color pictures have no scientific value, anyway: All science will be done on the raw data, recorded in however many channels you used filters for (which for many objects, might well just be a single filter).

Right, for instance, we have lots of “pictures” in the x-ray spectrum, nothing that corresponds to visible color, but they still color them up pretty.

The “picture” of the black hole a little back was done in radio waves, once again, the color was completely added.

Yes and no. They are not the data that scientist are looking for for whatever it is they are trying to prove/disprove, but the pretty pictures do make the public more willing to foot the bill for these instruments.

I had a friend who was a dedicated amateur astronomer with an impressively large telescope. As he once said, you never see through the eyepiece the kinds of impressively colourful images of nebulas, galaxies, etc that are published in magazines. He had sufficiently good equipment and astrophotography capablities that he was able to produce some pretty cool images himself, but at a minimum they were the result of long exposures that captured information far beyond the capabilities of the naked eye, and sometimes involved post-processing as well.

That’s true… but you can still catch some quite spectacular views through an eyepiece.

Remembers fondly a night in the Lewis and Clark National Forest, when I had custody of the club’s 20 inch reflector…

Post / Username match-up!