Ha! Well played.

I needed a chuckle. ![]()

Honestly, I don’t know how Mr pumpkin head is even a viable candidate in any election anywhere let alone poised to win one of the most powerful positions in the world, but be that as it may, I’ll take leading in popular vote by better than a 3-1 margin any day.

A surprisingly large percentage of the electorate are dual citizens, so at least a few of your 100.

I’ve said it elsewhere, but maybe it’s more appropriate here:

Yes, polls are “broken”, because this is junk science.

Were collectively gnashing our teeth over a small change in percentages, when if we took a step back and looked at the whole we’d see that this is hardly reliable.

Let’s see…

Here’s a national poll showing that the candidates are deadlocked at 48% each. Oh no!!

And it’s based on a survey of…checks notes…2,516 voters. We should expect north of 150 million votes; you want to draw meaning from that sample?

I’m imaging a room of 10,000 voters. We want to know who they support. So a modern pollster runs up to us and says they’ve spoken to 4 people and have determined that it’s a dead heat.

Hey, they asked a man and a woman; a young person and an old person. One was black and one was white. And each of them was standing in a different corner of the room.

How could that poll possibly be wrong?

There is only one scientifically valid way to determine how those 10,000 people feel: have them vote, and then count those votes.

Any sample short of that is just guesswork. (Or more sinister; I don’t think polling accurately captures the opinions of everybody in the group, but it can certainly influence them).

That is not junk science, that is just science. You never are going to survey the whole population. You take a sample and extrapolate the whole population. It is isn’t going to be perfect, which is why you calculate a margin of error. Do you think that researching a vaccine they test it on everyone before deciding it is valid?

Polls do have the additional challenge that getting a representive sample is challenging and can skew the results, but that is an execution problem, not a scientific one. If you understand what polls can and can’t show, they are a great source of information, certainly better than talking heads or counting lawn signs.

There are biological reasons why you can extrapolate one person’s vaccine response to other people; it’s not just a function of statistics, but a reflection of the homogeneity within a species (and even then we have to sample many people to account for the inherent diversity within a seemingly homogeneous group).

Here, there is no inherent reason why there should be consistency. Just because one person has a voting preference doesn’t mean that any other person has to share that belief. So it’s not the same thing: asking one person who they vote for isn’t an insight into some universal human vote decision system, and to argue that it can be used that way is pseudoscience (as evidenced by the fact that actual results are never what the pollsters say; they are either really close to reality or they have to come back with some explanation of under or over reporting; at that point, it’s guessing, hoping for luck, and then using spin to claim precision).

Polling itself is not junk science. It is a perfectly valid method that is simply getting harder and harder to do. Trump voters are angry and distrustful of anyone not named Trump, getting accurate numbers for them is a lot harder than normal polling, which is also getting harder. In previous elections when Trump was shown being significantly down he called the polls fake and biased against him, they are neither, but his claims make his supporters even harder to reach.

What do you base that on? Because it uses statistics? Phrenologists, I’m sure, were really careful about obtaining accurate measurements of human skulls; it still didn’t mean that they could predict which people were criminals based on those observations.

So, please explain to me how a pollster speaking to somebody in my town is able to use that evidence to determine how I would vote?

Oh, they match my demographic? That might be correlated to my vote, but that’s about it. My vote remains my choice, regardless of those characteristics.

If the other voter with my demographic also matches my vote, that doesn’t mean my demographic determined that choice. And, so, it’s not something we can determine based on “sampling”.

And all of that is before we get into the ridiculously small sample sizes of these polls.

Let’s see, here’s a recent poll for Michigan, which in 2020 had about 3 and a half million votes cast.

(My bold)

Im not a math guy, but I think that’s about .02%

Of course it doesn’t explain how YOU would vote. But if done well, it has a good chance of being fairly reliable in predicting how the population as a whole will vote.

I DETESTED stats when I had to take it in college, but as I understand it, ensuring a reliable and sufficient sample is critical. I’m curious as to what your experience and expertise informs you OUGHT TO BE a sufficient percentage sampled.

Pretty sure sampling is relied upon in countless scientific and commercial endeavors. Not sure why you believe elections are uniquely inappropriate uses.

The only sincere conclusion from these samples should be that they are inconclusive, not that they are providing statistically reliable predictors of the outcome.

Look, I’ll admit to being probably too dogmatic in my opinion. But I’m frustrated by the significance

that is given to a mere sampling, when it is consistently shown to be off from the actual outcomes. I hold little faith in the process.

There are no biological reasons, true, but there are cultural ones. Anthropology and sociology and the other social sciences explain why groups might vote the same way, and how pollsters might get a representative sample within a large, diverse population.

A little more difficult to do when your task is to reach 1100 people by your 6 pm deadline, but quite feasible in general.

Actually, no there isn’t. Individuals vary and subgroup responses vary. Vaccines, cancer treatments, even responses to exercise plans. Getting large and representative enough of sample sizes matters, and understanding the possible nature of the errors of various sorts matter.

A couple of items to clarify from other threads, which also overlaps with science and medical research: pooling studies with less significant findings due to too small of n together. You were incredulous about that I believe. In clinical research that is call meta analysis. There are possible problems with these studies, such as publication bias, and being sure the studies all meet appropriate predefined criteria. But it is very valid scientific technique.

The main argument of this thread centers around the nature of the meta analysis done by the aggregators. The criticism leveled at them is that they include studies that do not meet what should be reasonable predefined criteria, justifying that by decreasing the impact they have. That action has NOT significantly changed results, it has not successfully skewed them, but it adds nothing other either.

And better to repeat this here than repetitively in all the other threads: in addition to the margin of error issue that you are concerned about, there is the item of systemic error. As a group the polling houses likely fail to exactly model the actual voting population. The average (just average, sometimes more) is for heavily polled swing states to have final poll predictions off by 3.5% one way or the other. A state with a final week poll of 50/50 tied can reasonably be expected to be anywhere from 54/46 to 46/54. And those systemic errors are, well systemic. True to the same direction at least, and to similar magnitude, in most of the states heavily polled.

So bottom line is that you are right: it is foolish to obsess over polls right now, foolish to panic or celebrated over a half point move one way or the other, and reasonable to conclude that we can’t know much about the outcome based on polling right now.

The largest poll aggregators with the largest readerships are kind of in lockstep with each other now regarding the 2024 general election.

However, there are well-reasoned “minority reports” out there (nod to Philip K. Dick) that IMHO are just as worthy of attention as the FiveThirtyEights, Nate Silvers, and NYTs.

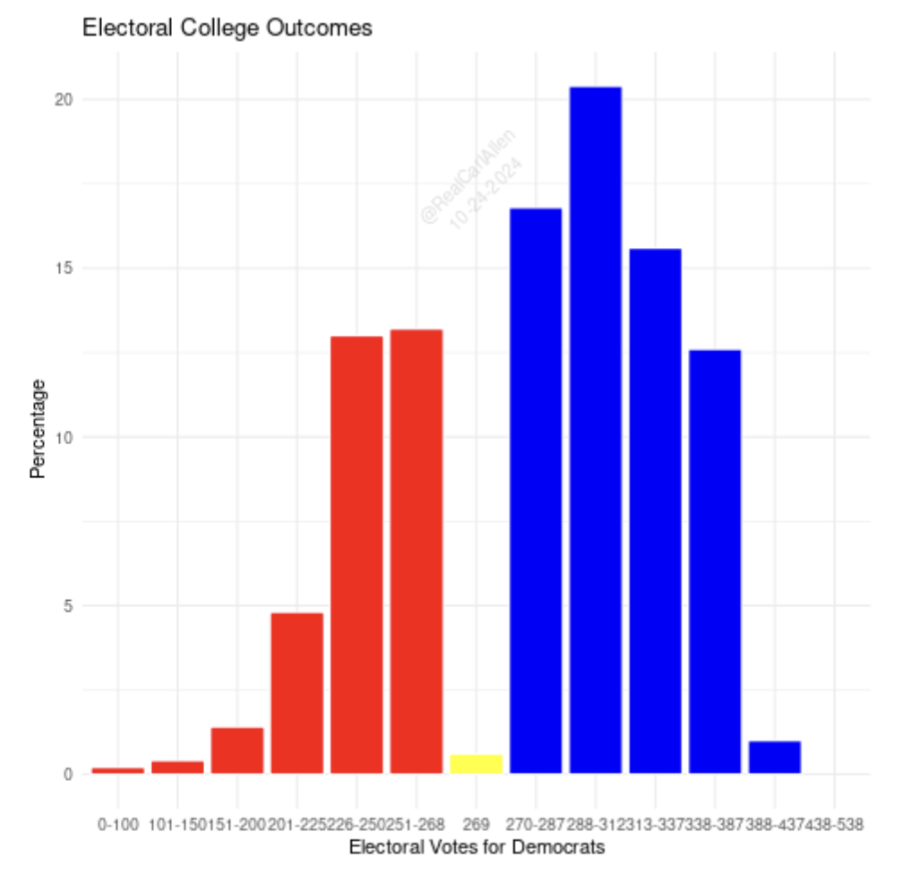

Here’s one I’m seeing for the first time today — ironically, discovered via the Silver Bulletin’s comments section. From last Wednesday, October 24th:

The Headline

Harris remains a medium favorite, 65% to win the election.

The reason:

Her advantage in the blue wall states is not “comfortable” but it is real.

…

There’s some probability in a close election that they don’t go together, despite being highly correlated.

I’m higher on Democrats in the blue wall, but my forecast is pretty close to FiveThirtyEight in the other four swing states.

As I outlined in my last forecast, if Democrats lose one or two of the three blue wall states, Georgia and/or North Carolina are viable backups. I don’t think a narrow loss in any of the blue wall states is “game over” - though it would make for a stressful week.

This is the crux of it. (Disclosure: I’ve been a practicing market researcher for 35 years.)

Polls worked pretty well, until about 10-15 years ago, back when they all essentially used the same sampling technique (random dialing, to land line phones). But, polls are only as good as being able to actually generate a representative sample, and that’s being strongly impacted by:

- People getting rid of their land lines – only about 1 in 4 American adults now even have a land-line phone.

- People screening and blocking incoming calls from numbers they don’t recognize.

- Portability of cell phone numbers, even if you move states, means that many people have a cell phone number which has absolutely nothing to do with the state or region in which they actually live.

- Attempts to move to online polling techniques are being made, as I understand it, but they, too, suffer from being skewed towards certain demographic groups, and are difficult to use to generate a truly representative sample. They also suffer from the same basic problem that telephone polling has now: non-response bias. It’s still unclear to me if online political polling is able to generate consistent results, and effectively capture all subgroups within the population.

- It is entirely possible that a certain percentage of people are willing to answer polls, but unwilling to state their true preference (the “shy Trump voter”).

Percent of the overall population being surveyed isn’t relevant in determining the statistical power / predictive ability of a survey (a poll is a type of survey, after all); statistical power increases with sample size itself. A sample of 700-ish respondents honestly isn’t bad at all; what you lose, compared to a sample of 3000 or so, is some of the statistical power, i.e., your “margin of error” is a couple of percentage points larger.

But, the gist of all of this, in the mind of this particular professional market researcher, is that, yeah, going back to the title of the OP, political polling in the U.S. is kinda broken, and it’s hard for me, who understands how it works, to put much faith in (or get too excited or worried about) the results of polls.

From the Comments section of the Carl Allen Substack I linked in my previous post. This is something I’ve wondered about, as well:

Sarah Blanchfield

[Oct 24]

Do any of the scenarios assume a certain population of registered republicans voting democrats? This appears to be the first election where a large contingent of republicans are publicly endorsing Harris and encouraging other republicans to vote for Harris.

Forest

[Oct 25]

This is what drives me crazy. We have real data that shows a shift in party coalitions, with ancestral Dem non-college whites switching in '16 and ancestral R suburban college whites shifting between '16-'24. And yet ALL the early vote analysis I see assumes that party registration equals vote.

I get they’re working with what they have, but to me what they have is sufficiently unclear to make it untrustworthy.

And all that, true though it is, ignores an even larger problem - identifying who from your sample is likely to actually go out and vote. In a nation were barely half of eligible voters exercise their right to vote, bringing your voters to the polls matters as much or more as convincing them to support you in the first place. As polarized as opinions are, it’s easier to convince someone who already supports you to get off their butt and vote that it is to persuade someone who was going to vote for the other guy to change their mind. Now in the US, with its wonky voter registration where voting age citizens aren’t registered more or less automatically, using a registered voter screen helps with polling to some extent, and of course all the polls ask people to self-report as to whether they plan to vote, but that doesn’t prevent the likelihood that the actual vote might deviate significantly from an accurate opinion poll based on relatively small differences in turnout.

Of note, the Republicans at a national level have almost no ground game (door-knockers, rides to polls, etc) compared to the past, due largely to Trump’s co-opting the national GOP for his personal benefit wrt legal expenses and similar. I remain hopeful that this might lead to a D turnout advantage that will result in the current polls being significantly off, though obviously that is merely a hope at this point.

To that end, some pollsters survey “registered voters,” while others survey “likely voters” – based, I would guess, on an introductory question along the lines of “how likely are you to vote in the upcoming election?” (probably with a five-point scale, and only continuing in the poll with those who pick a response like “defintely will vote” and “probably will vote”).

Obviously, those two approaches will include slightly different sets of people, and I suspect that there isn’t clear standardization on how to identify “likely voters,” either. And, either approach is still not going to give you exactly who will wind up going to the polls on election day.

Michigan, where Trump WON in 2016 against an objectively more qualified candidate, and lost by a whisker in 2020 even though he had mismanaged the country’s pandemic response? Where we know for a fact that their large Muslim population is angry at the Biden/Harris administration for their response to the Gaza war? Why are you leaving that stuff out? You have two actual election’s worth of evidence that Trump has enough (and remarkably stubborn) support in Michigan to win it. The polls are merely confirming what two elections have shown. And somehow that can’t be true?

Denying the polls is wishful thinking and fantasy. Michigan’s a tossup and will be decided by the slimmest of margins. The polls are right.

Polling is not broken. It works, and you’ll see that on November 5.

Denying reality seems to have taken a firm grip on some people when it comes to the idea that Michigan will almost certainly go to DJT. I have mentioned several times in the last few days how Muslims are livid and feel betrayed by Biden/Harris and the loss of their votes will almost certainly cost Harris the state.

Some of those responding to me have taken it to mean I think they will all vote for Trump which is not what I think will happen. They are far more likely to sit out the election or vote 3rd party IMO. That is still going to throw Michigan to the GOP.