From what I’ve read, facial recognition technology tends to mis-identify females and non-whites more often than others. Why would this be so? I don’t know how it all works but I thought it was based measurements. What would gender or skin color have to do with it? Fight my ignorance, please.

From what I’ve read, the tech is “taught” by feeding it known examples. One it learns that this face is a white male it looks for other faces with similar features. That means it’s as good as its database.

As an extreme example, say that a company feeds it all the faces of its employees. But those employees are 73% male and 69% white. The number of examples of female Hispanics will be low and therefore there is less information to extrapolate from.

** Facial-Recognition Software Might Have a Racial Bias Problem**

This article is very informative.

That’s a 2011 study - no brief period of time, as far as technology goes. You’d think that the developers would be well aware of the shortcomings and move to correct them. Accuracy has to be the prime selling point for this software. The training photos should be as varied as possible. How difficult could that be?

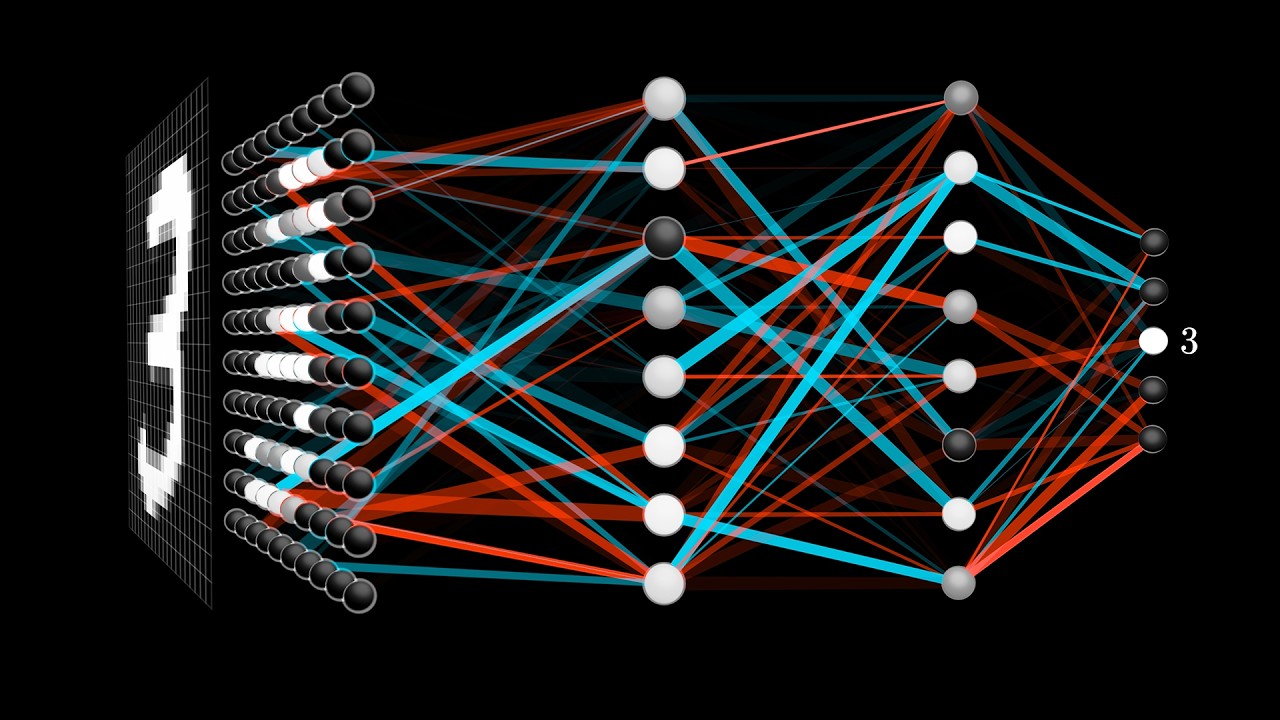

It is all about training set and how neural networks work.

Really it is a form of over or under training, but it does work in a way that is surprisingly human in the way it detects patterns despite those pattern matching not being related to intrinsic truths.

Here is a good site that will demonstrate how “fit” happens in a model that is easy to visualize.

And this video will cover over how this happens.

But it isn’t to different on how us humans commit cognitive errors, or attribution errors related to why we think Prius drivers or Bike riders or… fit some category X that we assign to them.

The best lesson for takeaway is how poorly those models often fit reality no matter if it is a machine or a human making those judgments.

I just did a google image search for “female” and the top 10 and continuing pictures were of white females. Maybe around the 15th or so female looked little bit Hispanic:)

Why is it a surprise then, that face recognition doesn’t work for darker faces ?

It’s not necessarily even a malfunction. Suppose that I’m trying to point out one person in a crowded room, to another person. If most of the people in the room are white, then I’ll have to go into more detail to identify the person: Something like “that tall guy with white hair and the big nose”. But if, in that room full of whites, there is only one black man, then I can identify him as “the black guy”. I don’t need to go into as much detail about the shape of his nose or the color of his hair, or whatever.

Now suppose that it’s a much larger room, containing 10,000 white folks and 1,000 black folks. Now, I’ll need to go into further detail for someone of any race. But I’ll need more detail for the whites than for the blacks. Or, to put it another way, if my program picks up on fewer details for the black folks, that’s acceptable.

Problems come when a recognizer is working with a population that’s very different than it was trained for. If you take that same program that works fine for a population of 10,000 whites and 1,000 blacks, and put it to work in a population of 11,000 whites instead, it’ll probably mostly do OK. But if you put it in a population of 11,000 blacks, then what was sufficient detail before isn’t any more. “That black guy over there… I mean, the tall one. No, not that tall one, the tall one with the shaved head. Uh, I guess there are a bunch of tall black guys with shaved heads, here… It’s, um, that one. What do you mean you don’t understand which one I mean?”.

It’s a well documented problem that most university research pools are drawn from whatever random university students happen to be around at the time.