Grrr. Which is due to incompetent OS design. My old Sun workstation ran for a good five years with lots of updates and new software, and had to be rebooted exactly once during that period.

And it’s not just software that has these problems, but (potentially) hardware as well.

Most processors have a component called a cache, which is a small but fast internal memory. It speeds up accesses to the relatively slow main memory. They are divided up into lines, where each line points to the segment of main memory that it is storing.

One of our products at work had a bug where, under rare conditions, a cache line would be permanently lost. Something would cause it to lock the cache line but never release it. One by one, the cache lines would disappear after running for some weeks. It was a rare bug in that it took trillions of accesses to trigger, but trillions isn’t that much for a computer. And the performance would just slowly degrade over this time until it got to be quite bad.

Cold-start the chip and the cache would be good as new. A warm restart wouldn’t do it. Eventually a workaround was found that avoided the problematic case. These kinds of bugs are pretty rare in hardware compared to software, but definitely not impossible.

No one else has been wrong, but I’d like to throw in a bit more:

Any machine that has been running for a long time will encounter errors of some kind. Software generally isn’t perfect at handling every single possible state. And, even if it were, external issues like solar radiation, overheating components, a bit of humidity, and so on could actually cause problems.

While in theory you could figure out exactly what this problem is and fix it, this is often impractical–especially since the device is already in a bad state. Restarting can fix it quickly (and cheaply).

This same logic is used when people’s computers get so messed up that they just wind up reinstalling the OS or restoring from backup. At some point, that becomes less work than figuring out the exact problem.

I also note that both of those can actually be diagnostic: it eliminates the software as part of the problem. If the problem occurs after restarting on a device with no storage, then you know the problem is a defective device. And if a problem occurs after a reinstall of Windows, it’s quite likely to be a hardware problem.

In one case I worked on memory errors were correlated with the altitude of the server. We build high-reliability servers, and we had installed EPROMS that captured various error conditions and which were programmed, at installation, with location and altitude. I could query a database and get all this information.

It’s called traceability. It just doesn’t work for computers. The bar code on your bottle of wine can lead you to, if you have access to the right databases, the location of the grapes that went into that wine down to a few meters, as well as information on the cork and the barrels where the wine was aged. It was depressing that a twenty buck wine bottle had better traceability than a million dollar server.

This was a while ago, the traceability of wine has probably improved since.

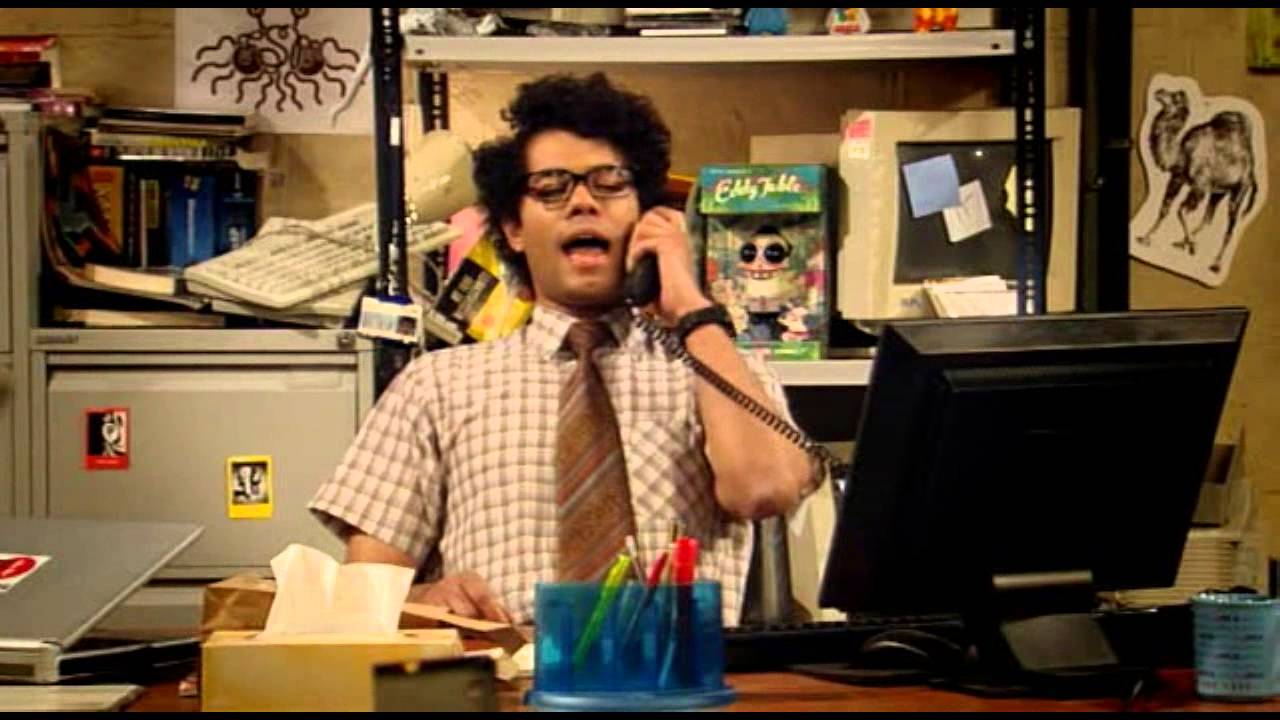

My favorite version of that is of course, Moss’ take on it.

Heh, a hard reboot won’t always do it. I’ve seen several IPMI systems that will become unavailable and potentially drag down the system until you power the system down and yank the power cable for a few minutes. Anything less, and the IMPI system will still be at best useless.

But aside from that kind of issue, if I’m working on a Linux or BSD box and it doesn’t need to load a new kernel, I see a reboot as a sign of defeat. I wasn’t smart enough to figure out what process to stop/start, and maybe what file to adjust (mayhaps delete). Sometimes it’s easier and more prudent to reboot it, even if it does make me sad.

Do I feel the same about Windows? No, you update an app or look at it funny, and it’ll start nagging you to reboot. It’s just part of Windows town. Doesn’t make it bad, just a little tiresome.

And yeah, rebooting is a great way to clear OS level memory leaks. Or clearing memory leaks on devices you don’t have fine grained control of such as killing off/restarting particular processes.

If I can offer one bit of advice on the reboots: try to use your device’s clean reboot or power down whenever possible. Even though most filesystems these days can handle an unclean reboot, the underlying programs still sometimes have a really hard time of it.

It ain’t a cold boot until the PSU capacitors have discharged. Ideally, there are LEDs you can watch. Otherwise, listen for the coil whine.

Well, yes. But that doesn’t happen these days (at least since ATX on PCs, IIRC) on a lot of systems until you pull the power cable. I’ve had several customers not believe me on this point, who just had someone press the power button after they shut it down. And they had to schedule another reboot later and coordinate it with their third party DC techs to pull the power cable and plug it in after a few minutes.

Indeed. I’ve been working from home, but all my machines are at work. And the #1 most useful piece of equipment for me has been the network power strip. Nothing beats shutting off the power for a minute when all else fails.

I considered getting a second network power strip to reboot the first one in case that failed too, but so far it’s been reliable. Sadly, I can’t just plug the power strip into itself ![]() .

.

Hehehe, the hosting company I worked for had automatically installed those for the colocated customers a couple of years before I moved on to greener pastures. That significantly increased my amount of time in the chair on the overnight shifts when I was soup to nuts as far as support staff went. I didn’t even realize how much time I wasted troubleshooting boxes with me reading output over the phone where the answer was always going to be “kick it over” until we set that up. After that, I probably gained 10 lbs just because I wasn’t walking as much.

POST Power On Self Test , runs after powering down and restarting computers. The hardware tests are helpful.

Windows computers run chkdsk before starting. It will find bad sectors on the drive. Chkdsk fixes minor errors. It can make a difference in how well Windows performs.

One of the promises of microkernel architecture is that even low-level parts of the system can be restarted independent of the rest of the system. When the kernel is monolithic and the hardware drivers are embedded into the kernel, you have to reboot the entire system. If hardware drivers are isolated, you can restart hardware drivers with disrupting the entire system. Something like VxWorks is used for highly reliable embedded OS systems because it has higher levels of modularity and isolation than Linux or Windows.

Sometimes I miss VMS - an OS that allowed clustered VAX nodes to be upgraded one by one, with running workloads being shifted from node to node as they were all upgraded. Then I remember DCL, and I stop being nostalgic. But the fact is there is no highly reliable clustered OS like VMS anymore - although I guess containerization and Kubernetes clouds are starting to fill that role.

On some motherboards you can even see a lit LED on the board itself even with the system fully powered down, as long as the PSU is plugged into a power source.

With recent versions of Windows, it’s actually the reverse.

Because a full Windows boot-up takes a long time (compared to an iPad, a Mac, a PlayStation, etc.), and because in many cases people just want to turn off the computer to save power, Microsoft added a “Fast Startup” mode which is active by default. In this mode, the “Shut Down” command is similar to what used to be called “Hibernate” : much of the software state is saved to disk, and it gets restored verbatim when you turn it back on. The “Restart” command, however, does force all software components to be reloaded from scratch.

Yep, when I last had my hands in server cases, very few of their boards shut off all of their LEDs without being unplugged for at least a few seconds.