Heh, yeah, the ambiguity bugged me too. I tried to be consistent with lowercase “flops” vs “flops/sec”, and the singular “flop” just sounds… wrong…

I propose a new SI unit, “aishenanigans/sec”.

Something I briefly touched on, but probably didn’t explain well enough (because I don’t know all the details enough, myself) is that AI training takes a considerable amount of RAM (hundreds of gigabytes or more). Consoles don’t have that, so you end up having to virtualize it across a bunch of consoles (with very high network latency) and/or swap it to disk (also with high latency).

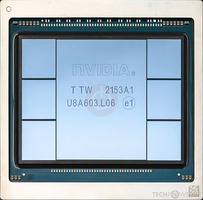

AI training, by current methods, isn’t a workload you can piecemeal divvy up across a bunch of independent small processors like that, unlike (say) crypto mining or SETI@home chunks or rendering movie frames. From what I understand, the entire model-in-training has to reside in memory during training so that its weights can be adjusted in realtime by any of the nodes crunching numbers. That requirement gives rise to fast interconnects like NVLink, with a bandwidth of some 1.8 TB/sec, which is much, much faster than a slower connection like the internet. That’s still not fast enough, and the whole-wafer prototype chips can get up to some 12.5 petabytes/sec.

In other words, not only do you need powerful GPUs, you also need a crapton of memory, and very fast connections between all of them. Consumer devices don’t have that, even if they can contribute compute.

I just didn’t know if that excess computation capacity could hold any use for AI programs

It’s just not as easily parallelizeable as other workloads, right now, as far as I know. (But I’m not a computer scientist, just a web dev, so someone smarter will have to explain in more detail.)

That’d be great, but if that happens, it would probably be in the form of pooling dollars rather than Playstations. There is too much overhead to try to harness the moderate compute that consumer GPUs can put out. At AI scale, even the data-center scale systems aren’t big and fast enough, and they want to make more, bigger, faster, ones powered by nuclear reactors. It’s a whole new generation (or several) of supercomputers.