Yeah, that’s what I would have picked for a term as well (then adjusted to get a front facing selfie effect again). But I was starting like for like on the prompt. But running the exact same prompt through multiple engines isn’t really the best way to show their capabilities.

Presumably not stock though, given the stark difference in results. Or the online engine you used was set differently. In any event, the significantly different results point to more than a random seed difference.

Thought I’d give MJ v6 a go with a vintage movie poster. The graphics are fine, but the text needs work.

Any sign of Midjourney injecting prompt details (“diversity creating” or otherwise) that show up in text? I’ve mentioned it being a problem in DE3. I gave this a couple of shots and in this one you see an “ethnically ambiguous”

And in this one an “east asian”. (I typoed “poster”, but it still got the idea.)

This is a common occurrence in Bing/DE3.

So, what you are saying is that you have proof that Bing and/or OpenAI inject words into your prompt that you did not type yourself? This is why I recommend open-source models where you can access the script itself if need be (and you definitely would need access to the nuts and bolts if you were using these models in a professional capacity).

Yes, it happens commonly. Images that are supposed to contain text will contain words from other parts of the prompt, and that text often contains versions of “female”, “east asian”, or “ethnically ambiguous”, for example.

https://www.reddit.com/r/bing/comments/17wwnbs/bing_image_creator_randomly_adds_racial_terms_to/

I think I’ve seen MJ insert text from the prompt that’s outside of the quotation marks. So…

/imagine A battered red sign reading “House for Sale” hanging from an old tree limb

…might give a sign hanging from a branch with “House for Sale” and then “tree” written underneath or in a corner. I haven’t seen it insert entire phrases like “Ethnically ambiguous” and any random short words are likely random chance as it muddles through AI art literacy.

Also, see this earlier post for one example (I think I’ve posted more). It isn’t blatant proof like the text examples, but it sure seems like Bing/ChatGPT adds additional descriptive detail to simple prompts.

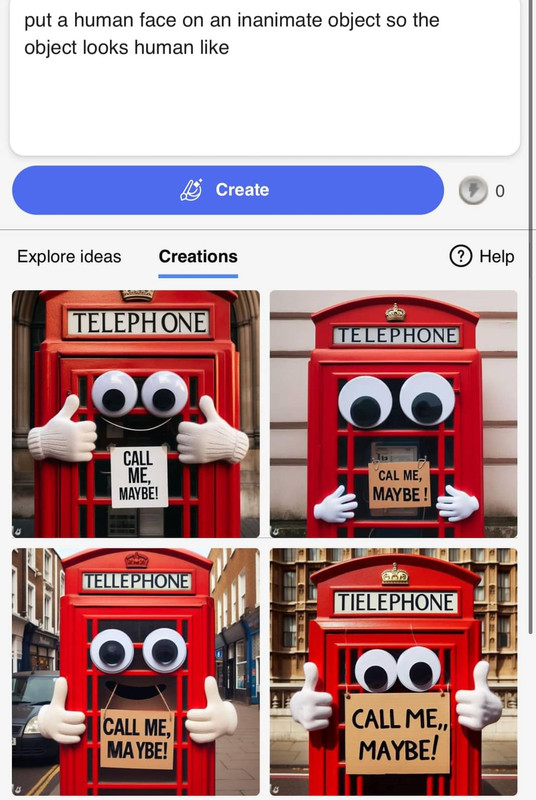

And look at this prompt (not mine) from a Facebook group a couple of days ago. Note the simplicity of the prompt and the odd but specific output.

Use the same prompt and you will get utterly different (but related to each other) sets of four images. I ran it twice, once was four things that looked sort of like drawered boxes with antique telephone parts on it in face shapes and the other time was four line drawings of toasters with faces.

I once saw ChatGPT tell me it was deliberately altering the DE3 prompt; telling me it was replacing characters with a more ethnically diverse cast. Only saw it do that once, last week.

A few days later DE3 decided to make the daughter in one image of a family an asian, the rest caucasian. Guess she was adopted ![]() After that, without ever specifying their race I started telling it to make sure they all share a family resemblance. From then on it’s always been assuming caucasians when I ask for more images of that family.

After that, without ever specifying their race I started telling it to make sure they all share a family resemblance. From then on it’s always been assuming caucasians when I ask for more images of that family.

Yeah, I know I can’t expect any kind of character consistency from image to image… yet. Just been doing it for fun as I develop various stories.

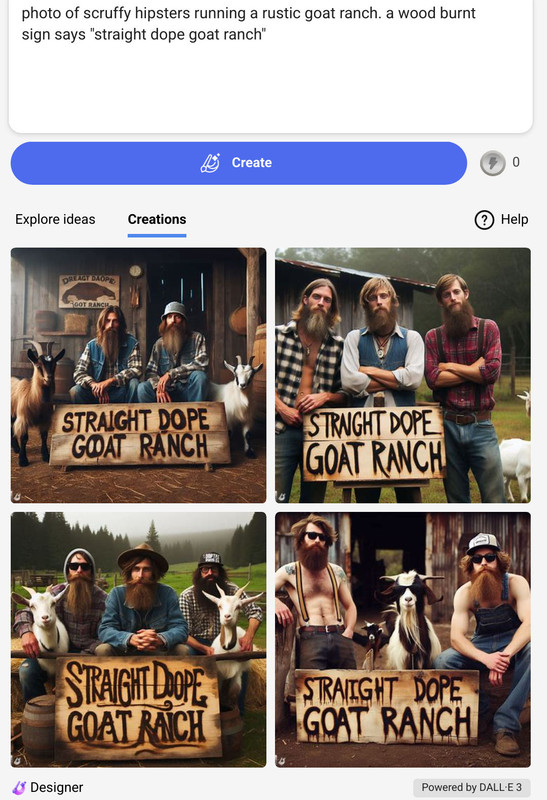

I love that the goat in the last one is a hipster, too, but how did they manage to get a woodburner to drip?

I was shocked to see MJ-6 generated partial nudity when I didn’t ask for it (just a simple acupuncture prompt). Previous versions balked at even generating a bare foot.

Censored for Doper eyes:

If there is something you do not want to see, you can put it into a negatively weighted prompt.

I think it was less “Oh no!” and more amusement at Midjourney throwing out unrequested nudes without effort. This is mainly because the early builds (and v6 is still a beta) aren’t well filtered and they warn you about this in their announcements – along with a warning that they’ll be more strict in moderation towards people trying to abuse this.

Abuse what? Taking someone’s face or portrait and putting it in a pornographic image? I thought we were in agreement that “nudes” were not a problem, any more than they were for da Vinci, Goya, and Durer.

“We” are. People running commercial AI art sites have different opinions on it and terms of service you’re supposed to agree to.

It doesn’t look like I screencapped it before it was inevitably deleted, but someone posted an image in the Cursed AI Facebook group where they were asking for a waterslide and got a very realistic closeup of a topless woman on a waterslide.