I know before a certain points mirrors in games were just blurred or showed nothing. Having games that had mirrors that reflected the movements and appearance of your character takes extra work (and an additional model I think?) but I’d be curious as to what was the earliest game that featured a good mirror.

I don’t know if it was first, but I seem to recall Duke Nukem making a big deal about its mirrors.

I’m sure processing speed was the reason mirrors were ever blank screens in video games. It is quite trivial to add the basic effect. Video games might require some adaptation for their unique hardware but as processing power increases they go through functional upgrades anyway.

I’m pretty sure that was just one level, though, not throughout the game. They faked it by having a second room on the other side of the mirror, with another Duke sprite that mimicked your movements. Dead Rising did a similar trick. The problem is, the extra room takes up space on the map, limiting how often you can employ it on a given level.

I don’t think any game has implemented full on, realistic mirrors. Cyperpunk 2077 has mirrors that you need to literally turn on before they’ll reflect anything, and that’s clearly a canned interaction with a copy of your character model, and not a true reflection.

I also remember the Duke Nukem mirror hype.

Ray tracing is extremely processor intensive. It is the reason that in top-end CGI films it can take up to hours to render a single frame.

https://www.scratchapixel.com/lessons/3d-basic-rendering/ray-tracing-overview

All computer graphics is a fake. Simulating planar mirrors by flipping the geometry across the plane is a very common technique, and doesn’t require taking up space on the map (though maybe some games implemented it that way).

Even ray tracing, while more accurate and can handle curves surfaces and more, is a total fake. It’s backwards: instead of light starting at the light source and bouncing them around until they hit the camera, with ray tracing they shoot from the camera until they hit a light (or just a lit object).

There are some techniques, such as path tracing or photon tracing, that are closer to reality. But they’re still very much approximations and leave out important effects like diffraction.

I can’t find an example, but I expect the first reflections were in 2D games where the primary sprite was just flipped vertically to show a reflection in water, ice, etc. Here’s a fairly early 3D example, though:

Most recent NVIDIA products can do real-time ray tracing. As a basic technique, it’s no longer that heavyweight. Even without dedicated ray tracing hardware, most GPUs are fast enough to do it to some degree.

Still, it needs to be used judiciously. While it’s possible to ray trace entire scenes, it’s generally more efficient to safe the ray tracing for the shadows, reflections, refractions, etc. and use traditional techniques for the remaining parts.

No, it is still extremely prcessor-intensive, it is just that now we have some very intensive processors.

There are scenes in Toy Story 4 that took up to 1,200 CPU hours per frame.

Clearly, I mean no longer that heavyweight compared to the state of the art. Even the weakest GPUs have multiple teraflops of math power.

Movie rendering is doing much more than baseline ray tracing. That will always take hundreds or thousands of processor hours per frame, because if it didn’t, they’d bump up the quality until it did.

What made the mirror in Duke Nukem memorable is the way it was placed. If I recall correctly the first time you encountered them they were placed in a fairly dark area, so you would see movement ahead, shoot all your ammo at this thing that just wouldn’t die and then when you turn the light on realize it was just your reflection.

I’m unclear what you find to be fake about ray tracing or how you would propose doing it in a forward manner? Ray tracing is accurate because it works the way light does. When a ray stops whenever it intersects a lit object it’s not an intensive process at all. It becomes intensive when every object is reflective and adding to the complexity of lighting because it has to be traced further until.until no more objects are encountered that further light an object. It also is based on solids modeling and the most realistic imaging comes from the most complex models.

“Forward” ray tracing is simple, just excruciatingly slow. You set up light sources that emit photons. You also set up a camera with a piece of “film” and a lens system. You bounce the photons around the scene, reflecting, refracting, absorbing, etc. as needed. Almost all of these will be lost, but some tiny fraction will find their way into the camera, just as they do in real life. You record where they landed on the film and that corresponds to a bright spot.

No one does it this way, except as a joke. But it’s how the world works. Everything else is a fake (though often a good one).

Tracing further is, by itself, not that expensive. What is expensive is that at each step, one must integrate across the entire scene visible to that point (except in the case of a perfect specular reflection).

Consider a simple flat white ball surrounded by large colored lights. The surface of the ball will have some smooth color variation, depending on which light sources are visible to a given point, their distance, etc. Large, bright nearby sources will have more influence than the opposite.

Even though this is a simple case, already we have to perform a difficult integral. One way of doing this is to shoot out many rays in all directions, some of which will intersect the light sources, proportional to their angular extent. If you take enough samples, you’ll get an approximation of the integral.

But that’s usually too much work, especially if there’s a chance that some of the secondary rays will themselves bounce around. If you shoot 100 new rays on each bounce, then you need 100*100*100=1,000,000 rays per pixel for three-level tracing (which is not that much).

The usual fix is “Monte Carlo” sampling, which picks a random direction (biased by the reflection function) at each stage. You don’t actually need the full multiplication of rays, since after each bounce the influence becomes less. You end up needing just 100 rays total, with each one going down a random path.

100 rays is still typically too much, so for real-time work they just use a few rays, with some deep learning mumbo-jumbo to reduce the noise. It generally works pretty well, though.

The same basic idea applies to just about any interesting graphics feature: soft shadows, diffuse reflections, frosted glass, and so on. They’re all just integrals over a sphere (or hemisphere).

Anything simulating reality is a fake, ray trace forward and most of your processing is a waste of time, but just as fake as the conventional method. Doing it backwards produces the same result except only the photons that end up in the image are traced.

This is the whole point of course. I question how much this adds to the realism of the image, it’s far past the ability of the audience to distinguish it and done for bragging rights. Which is fine, and one of the best ways to spur advances in technology.

I’d disagree. Forward ray tracing can be done to any level of accuracy desired. It’s actually trying to simulate the way the world works. Conventional ray tracing has to pile on various unphysical effects to look good. It’s trying to give the same results as reality, not actually simulate it.

Not at all. The eye is actually very sensitive to this. If you ever hear the words “global illumination”, that’s part of what I’m referring to.

Set an orange next to a white wall. The wall will look faintly orange in the vicinity. It’s not a subtle effect.

Very old-school CGI had an “ambient” term, which was just the low-level of light present on anything not directly illuminated by a source. I.e., the brightness of shadowed areas. But that’s a really crude approximation of actual global illumination, where light bounces around until it finds its way into even small areas. Nothing ever gets truly black, but some things get darker than others depending on how many bounces the light had to go through to get there. A fixed ambient term also can’t take into account any coloring effects from the objects in the scene.

There are other ways to fake global illumination, but they’re usually not dynamic. They do the hard work offline and store it as textures. Looks good for fixed scenes, but not dynamic ones.

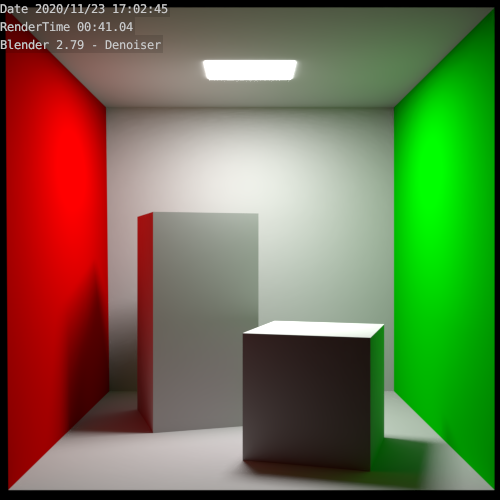

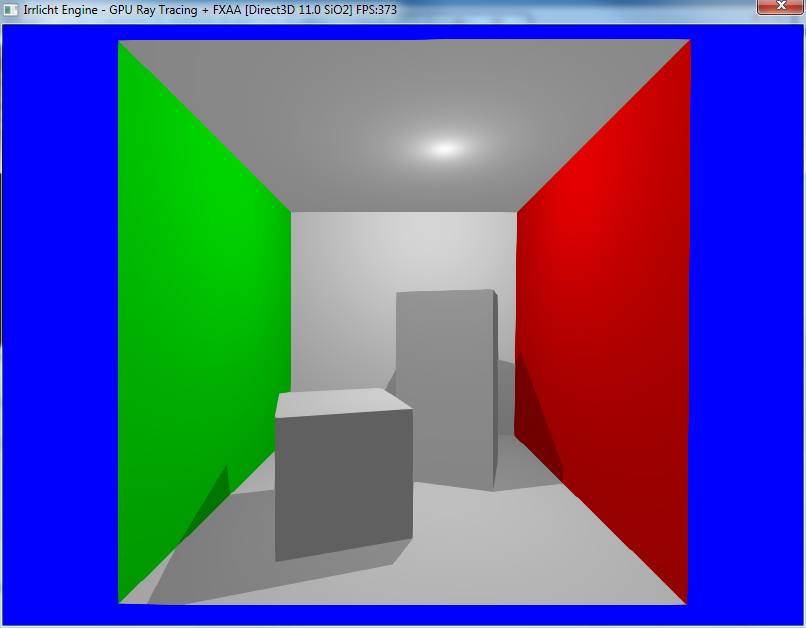

As an example, here’s the so-called “Cornell Box” rendered without global illumination:

Ignoring the mirroring, it’s the same scene. The geometry is trivial. But the second looks much, much better–it could almost be mistaken for a real scene.

The area light gives smooth shadows. The reflected light off the colored walls bounces off the white boxes, coloring them on the sides facing the walls. The corners and edges are darkened because less light can reach them. The same with the area behind the tall box. Even the shadows are slightly colored based on light reflecting off the walls.

Some renders do allow light sources emitting “photons”. Mental Ray, for instance.

Typical photon tracing works bidirectionally. You shoot photons out from the light source, but you don’t keep bouncing them until they end up in a virtual camera. Instead, they have a chance of “sticking” to a surface, at which point they work much like an ordinary light source. Once you’ve distributed enough photons around the scene, you use a more typical ray tracing technique that gathers up the nearby light emitters when the ray hits a surface.

Here’s an image of the photon map from a simple renderer I wrote back in college (~1999):

Black is a direct impact, red is one bounce, and green is two bounces. The cylinder is refracting each photon as it passes through.

You can see a nice concentration of light on the shadowed side of the cylinder, typically called a “caustic”. That’s where the virtual photons got concentrated as they passed through the cylinder and refracted. This is tough to achieve any other way.

Thanks for your insight Dr.Strangelove, this is fascinating.

Sure thing! I really had to dig through my archives for some of my old images. The photon tracer was the final project for one of my visualization classes in college. I actually kinda overshot the mark on that one. I think most people stuck with a basic ray tracer.