I was playing around with how SD deals with various ethnicities/nationalities/whatever, did a batch of 4-shots with simply (word) + Pino Daeni as the prompt. And of course the way the NC implementation of SD works, when you do a batch one image from every set has the identical seed value. This is what happened with the same-seed images. (There were a few more prompts, but in those there was no similarity in the images.)

Very nice.

I thought I had something cooking (no pun intended) with my cherry cheesecake. I did get in the top 20%, my first score in weeks. My strawberry parfait is pretty good too, but I’m having real trouble getting the images I’m looking for most of the time. I find the results at PlaygroundAI are much more interesting.

See? Very arbitrary. That’s at least as good as mine.

I wonder if it has something to do with timing. I usually get something posted right off the bat. I wonder if back-loaded entries get more action.

Guy filing a lawsuit against Stability AI (Stable Diffusion), DeviantArt and Midjourney over their use of artists’ works in their models (for DA, it’s about allowing it)

Counter-article explaining why the first guy doesn’t know what in the hell he’s talking about (not written by reps for any of the sued parties)

If you only have time to read one, read the second since it highlights the points from the first one anyway plus gives useful information about how the tech operates.

I have several prompt sets that I’ve invented/discovered that I keep revisiting over and over again that produce abstract/stylized art where you can argue that the weird parts are something that you meant to do (so basically nothing that includes what are supposed to be realistic depictions of real-world subjects.) I often think about how if I had a time machine and could travel back to the ancient days of a couple of years ago, I could probably build a following with some of the styles on a site like Deviant Art or Pivix, with people believing that I created everything from scratch. (A reasonable assumption in a world where the AI systems didn’t exist yet.)

I have zero training or natural talent in creating traditional art, but I enjoy seeing the elaborate series of steps that go into creating an original artwork (such as this panel from Usagi Yojimbo or this cover for Harrow County) and sometimes look at AI images and try to imagine the tools and techniques that would be necessary to have created the image by hand. Some of my image styles look mostly like colored pencil drawings and probably represent the most simple challenges for a skilled artist. Others look like they would need watercolors or oils or mixed media (or the digital art equivalent) and would likely require a lot more skill and talent to create.

But the biggest challenge, I think, Would the “sort of” photographic images that look almost but not quite like a real object that has been photographed. I suspect that creating an image like that by hand, getting all of the graduations of color and shading and lighting to look exactly like those images from scratch by hand would be damn hard, something requiring a very high level of artistic skills (and most baffling to a primitive Year 2020 observer).

Here are a few of those quasi-photographic images.

I think it is an uphill battle to get courts to rule that studying an image to learn how to make similar images is violating anyone’s rights. It is functionally no different than if you went to a meat-based artist and asked him to make an oil painting of Optimus Prime in the style of Raphael. The artist would look at paintings by Raphael, look at images of Optimus Prime, and then make his own interpretation. And that’s what AI models are doing.

For at least some of those, I’d assume that you made a real, physical sculpture of the almost-real object, and then took a photograph of it.

Is it? So far as I know, nobody knows just how much of any given source image is still present in the AI’s dataset. There are some people who say “none of them are”, but those same people are also the ones saying that nobody knows what’s in the AI’s dataset. And certainly not all of the training images are still entirely in the dataset, but that doesn’t mean that none of them are. You can argue that all that the AI has is information about the images, but at some point, information about an image is the image.

We can put an upper bound on the average: about a byte or two, which isn’t even a single pixel. That’s what you get if you take the model size and divide by the number of training images.

Some images, or parts of images, may be overrepresented. For instance, there is this lawsuit by Getty Images:

They cite as evidence the fact that the watermark gets semi-accurately reproduced (though not much better than a JPEG with max compression). But that makes sense–the watermark probably appears verbatim in those millions of the training images, so added up it might get a reasonable chunk of space in the weights. Of course, this isn’t stored anywhere in particular–the data is smeared across the entire set of weights, like a hologram–but we can say it’s there.

But that can’t possibly be true for everything. For the vast majority of images, there simply isn’t enough data to go around. Even popular artists aren’t going to have nearly the same representation as the Getty watermark.

Right, like I said, most images aren’t in there in anything resembling their entirety. But for any given image, we can’t tell. Maybe the algorithm is treating one image out of a hundred thousand as being particularly representative of whatever it represents, storing that more or less intact, and throwing out all the rest.

Aside from misleading the judge or jury, I have a hard time believing a lawsuit would succeed if the argument is that one in a hundred thousand images might be so well represented that it can be reproduced verbatim, when said image has not actually been identified. Even the Getty watermark probably wouldn’t rise to that level if it were treated as a creative work. It’s clearly being re-created in some fashion as opposed to being reproduced.

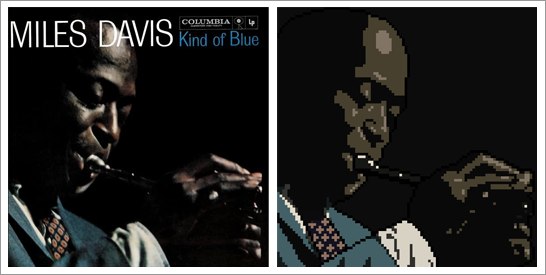

It is an interesting question what counts as re-creation for an AI system. Consider this lawsuit (not related to AI):

The original was a photograph. The re-creation was a painting. The photographer lost. Although the images are visually almost identical (aside from the mirror flip and some color tweaking), they differ in enough small details that it was very clearly a painting of the photo, and not just a pixel-by-pixel reproduction. And so the painting is considered a separate work.

How exactly does the AI re-create imagery? Not in any obvious way like JPEG compression. But it remains to be seen whether it will be viewed more like a human re-creating an image or a sophisticated compression algorithm.

Either way, copyright applies to works, and I posit that any lawsuit would have to identify a specific work that is stored in enough detail for it to be re-created for it to have any merit whatsoever. And that hasn’t happened yet.

Interesting. Don’t forget about the Kind of Bloop guy (who paid an artist to create a “pixel art” version of the famous cover),

who settled out of court because that was the least expensive option available.

I hadn’t heard about that one. It seems fairly obviously fair use, but you can be sued for anything, and settling says nothing about the merits of the case.

The Kind of Bloop author was not someone that could afford a long, drawn-out legal battle. That’s not like to be true of the orgs training these models.

Stable 2.1, long duration:

Starting image:

I like it a lot! Good one to enter if another cyberpunk challenge comes along.

![]()

I put it in today’s no theme challenge just for the hell of it.

For the no theme challenge you have to put it in a snow globe or an hourglass or you don’t win. (Just kidding, kinda)

I’m voting on some vampire challenge entries and I’m glad to see that everybody had as much trouble getting classic vampire fangs as I did.

Hence, this attempt:

That’s on the right track! ![]()