Doc Brown visiting Dr. Frankenstein did pretty well in the Mad Scientist Challenge.

I’ve been looking back over ancient images from a year ago created with Night Cafe Coherent/Disco Diffusion, with how they might could be reworked with Stable Diffusion 1.5 inpainting in mind. As we know, the same prompts produce very different results across AI models. But Stable Diffusion doesn’t really need extensive prompts to match styles reasonably well with infilling. Here is my first try, a group of five old Coherent images, infilled into a seamless panorama (done in four segments that I patched together) with the prompt being simply “faces in crowd”.

On a different note, the original image in this set was created back in February in an SD 1.5 batch of a prompt involving an onsen with Mt. Fuji in the background. I expanded and infilled it using only the prompt “woman pond Fuji”. I was impressed to see that SD 1.5 generated the “pond” with reflections of the mountains and trees from the conserved parts of the original image. Except for one that put the woman deep in the water, and her conserved clothes are reflected.

Yikes.

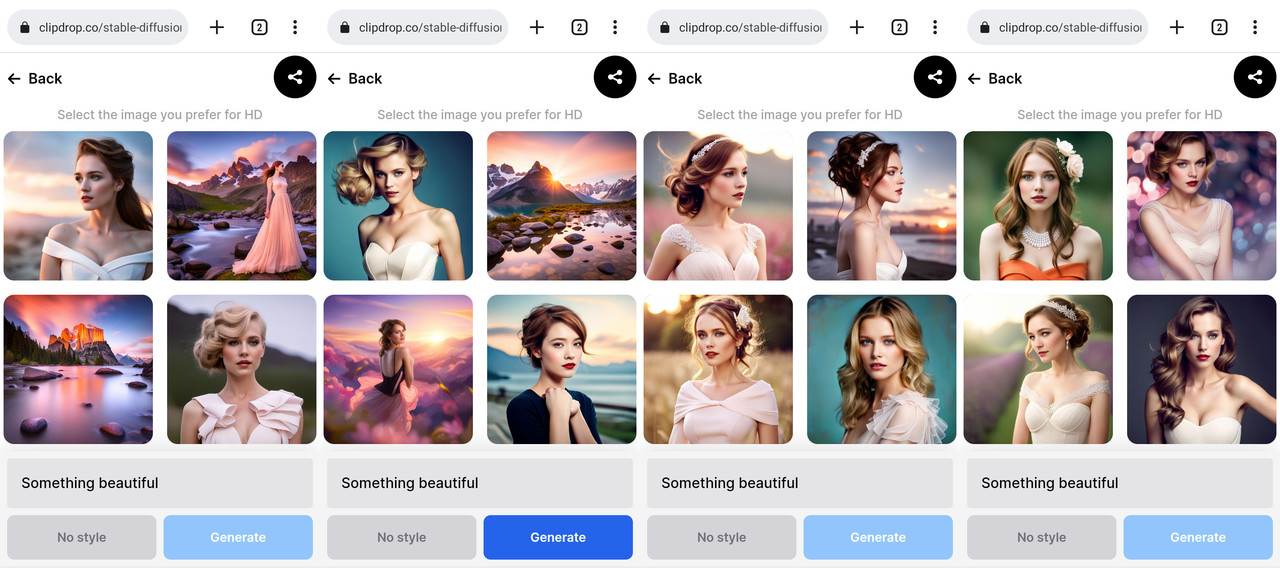

SD on the Deliberate model and Midjourney v5 both gave generic flower and butterfly motifs for “something beautiful”. For “something ugly”, SD (Deliberate) gave me cartoonish ugly light-skinned guys and and MJ gave me monstrous faces.

What led me to trying “something beautiful” and “something ugly” in SDXL was me trying the same in Kadinsky 2.1. As I’ve mentioned, Kadinsky can produce interesting images, but once you’ve tried a specific prompt once, you’ve pretty much seen all that prompt is going to generate except for relatively small variations. To Kadinsky, “something beautiful” is specifically a little girl with flowers and butterflies and “something ugly” is a spherical creature with bug eyes and a big mouth. (No point in trying more times, that seems to be what you are going to get.)

I hoped that SD infill would easily understand using the ballbeasts as heads for monsters with equally ugly bodies, but prompting with “monster” creates giant ballbeast movie posters. (And probably the best instance of spelling that I’ve got with SD 1.5, even creating a very appropriate font.)

“Creature man” produces muscle-y human bodies and “creature woman” produces “I’m sorry but the images created were insufficiently G-rated so we aren’t going to show them to you” messages. I like this chicken-legged thing from just “creature”, though.

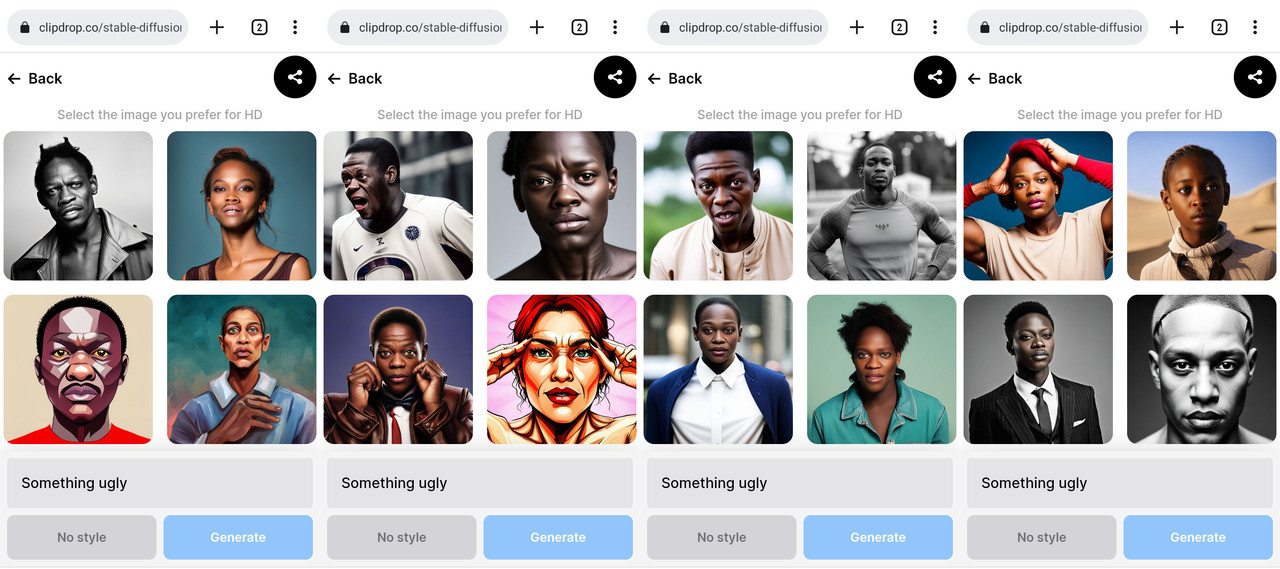

Night Cafe has added three new badges.

Looks like the “5 day streak” will recur every time you create something 5 days in a row?

- There’s a juicy one for 30 days in a row too.

Yikes. Producing mostly white women for “Something Beautiful” could maybe just reflect deficiencies in the training data… but I have a hard time seeing how even a significant fraction of the results for “Something Ugly” could be human, at all, without some serious deliberate human distortion. And 16 out of 16 being human, and every one of them black? And not even actually ugly at all? Yeah, that definitely didn’t happen by accident.

One non-racist possibility is that the AI first did ‘something beautiful’, then having already done that, when asked for ‘something ugly’ tried to basically invert the ‘something beautiful’.

It would be interesting to try ‘something ugly’ again in a new session before priming it with ‘something beautiful.’ If you get different results, try ‘something beautiful’ afterwards in the same session, and see if it gives you objects related to the first query.

Image generators don’t have a memory like a chatbot.

And anyone can try it without signing up for anything.

We don’t need no stinking’ badges!

For comparison, when I Google Image Search “something beautiful”, I don’t get any white women until hit #23. It’s mostly sunsets and/or seashores, with the #1 hit being a young black girl, and also such things as a cartoony elephant hugging a giraffe, a cartoony Hispanic girl with flowers in her hair, and a sparrow in the rain (a lot of pictures seem to be cover illustrations of books with a similar title).

For “Something Ugly”, about half of them can be described as “human”, but mostly weird Photoshops of humans: Distorted faces, an old man’s face morphed onto a baby, etc. Also featured were Gollum, chihuahuas, fantasy creatures like trolls and gremlins, blobfish, and Jabba the Hutt. I didn’t see any black people at all until hit #110.

Now, granted, Google Image Search doesn’t work the same way as AI image generators, but they’re both working from mostly the same dataset of existing images associated with text (Google showing images that are already associated with that text, and the AI generators showing things that it thinks would fit in with the sorts of images that have that text).

Stable Diffusion 1.5 generates weird creatures and distorted humans for “something ugly”. For “something beautiful”, it seems to be trying for “feel good” quote memes or something–it generates fake text, sometimes with some sort of background like flowers. So this is definitely an SDXL thing.

A minor note about the SDXL uglies: one of them looks to be trying to create an athlete with sponsor logos on his clothes. Among other things, there is a very distinct Nike swoosh.

People have mentioned generating DnD character images in this thread. It occurred to me that the output for “something ugly” in Playground v1 might be suitable for some of them.

I have a liking for long, weird, complex prompts that produce wildly unpredictable results from one image to the next. I generate batches of images, a bunch of which will be junk or bland, but a few will catch my interest enough for me to edit a little or a lot. Tonight’s big obsession was an image from SDXL:

There’s some whole back-story going on in that image, but the girl’s face and of course hand needed a lot of work, plus the object she is holding. So I cut an image of her head and one of her hand and ran them through a few rounds of inpainting upscaled to 1024x1024. (The hand was still pretty bad and I had to work on it…um…by hand with my limited skills to get it at least decent.) I only noticed late the seam between wall tiles stopped at the creature’s head, and had to decide between creating the bottom of the seam and removing it. I decided having a less detailed wall was preferable to having the seam negatively impact the look of the creature’s teeth and the knife blade. I may try to composite in a different background for at least one of the variations at some point.

(I hope I’m not the only one here that gets obsessive about editing these things.)

Here are other images SDXL generated from the same prompt. (Each AI models produces completely different general styles for the wildly unpredictable images.)

The prompt:

Snail rancor trilobite girl | Dan Witz, Mark Ryden, Margaret Keane, Pino Daeni, Mab Graves, Carne Griffiths | Tim Biskup, pop surrealism, vinyl toy figurine, Polaroid, Kodachrome, Ektar | Professional photography, bokeh, natural lighting

(None of the big AI models have a clear idea what a “rancor” looks like, but it produces interesting guesses.)

Huh, my first interpretation of the object in her hand was that it was something with a straw, and that she was feeding the creature. That didn’t register as a “knife” at all, to me.

I can see that. I guess it just didn’t strike me as the type of thing somebody would be serving a juice box. I guess I’m prejudiced against whatever the hell that creature is. Maybe it is a famed diplomat who ended two interstellar wars. Maybe the girl is a hardened sociopath looking to poison him with her juice box of evil.

I love the twisting smoke effect in this image. It looks so realistic, like you might get from a cigarette or a candle you’ve just blown out. Don’t know why it is coming out of what is supposed to be a snail, but you take what you can get…

“Look at that escargot”…