Apparently an older, wood-burning model.

I’ve noticed that “poking things with a stick” is an unprompted recurring motif in SDXL. (All images created at 512x512 at Clipdrop and outpainted with SD 1.5 because I don’t really like the square aspect ratio.)

Do not poke the Cthuhlu.

A couple of days ago I did some rapid-fire creating at Playground, creating a few hundred images with various versions of prompts. Some things created in Playground v1 (especially when using some of the filters) look Midjourney quality.

(Unfortunately, when I try again with identical prompts to the best I can recall, I can’t reproduce the types of outputs.)

BTW, Stable Diffusion and Playground are not adroit at creating flying monkeys wearing fezes, and when you add gingerbread to the equation the monkey goes right out the window.

As we live a life of ease,

Every one of us has all we need,

Sky of blue and sea of green,

In our yellow Submarine

Midjourney v5

Not very hydrodynamic.

Yes, but apparently it can fly as well, so there’s that…

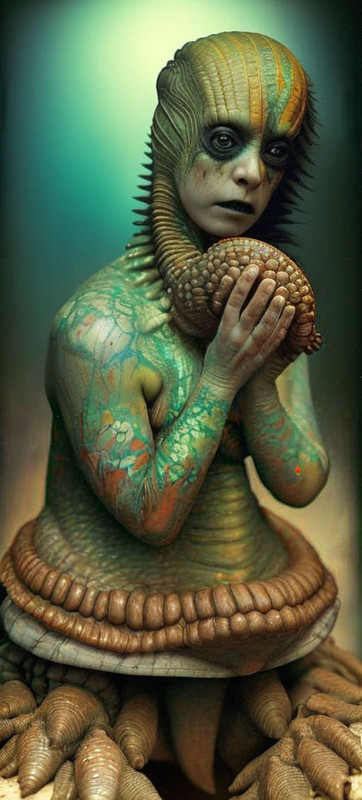

I love this SDXL image (created at 512x512 at Clipdrop, outpainted in SD 1.5). The woman has this weary thousand-yard-stare of resignation on her face. “Well, this is really my life now.”

My oddity for tonight, here is a “snail rancor trilobite tardigrade gremlin ammonite girl” that turned out wearing a moth-head hat, much like Louise Belcher’s bunny hat on Bob’s Burgers. I played around with different ideas for expanding, it (including NSFW ones for a project). Like many of my creations, I never started out expecting (or even imagining) I would end up with a snake doing insect cosplay.

(The mentioned project, collecting a number of images produced over several months into a theme.):

https://www.reddit.com/r/aiArt/comments/13g9b0s/miss_universe_contestants/

While that link isn’t very lewd, I will warn that there’s some serious nightmare fuel there…

All my other AI posts on Reddit have got at best in the low double-digits upvotes. That one is at nearly 150 in less than 12 hours. So the secret to success is lizard boobies.

I think it’s that sexy, sexy tail wrapped around her abdomen to protect the tadpoles.

Ugh. Anyway, I can’t see how any of those are NSFW. There aren’t even any naughty bits showing, whatever they might be.

So, if the creators of these things are so determined to not let them produce NSFW images, wouldn’t it have been easier to filter out all of the nudes from the training data, before the AI was trained on it? It won’t draw any boobies (lizard or otherwise) if it doesn’t know what they look like.

The Reddit ones? You know it is a set of 20 images, right? Lots of nippleage in there. (I could have posted more images, but the Reddit limit is 20.)

It is frustrating how bad the filters are. Many images that came from prompts that had no reason to produce nudity are blocked. But then many images with from subtitle to explicit nudity (intended or not) slip through. But then the system often won’t let you edit images that you have just created at that site.

And Stable Diffusion 1.5 really has a good understanding of female nudity. Like, you can’t look at this image from a few days ago without thinking “yep, that’s a naked woman alright”. (The censorship filter didn’t think so, though.)

I think some of the filters try to block violent images, too, so that may account for some false positives. Although they don’t seem to have problems with people or people-adjacent things that are covered in streaks of blood. (Those streaks come from the AI’s interpretation of “Carne Griffiths”, and are quite different from how Griffiths uses colored squiggles in her art but fit the images coherently. Another blow, IMHO, against people who say that AIs “can’t be creative”.)

Many/Most of these are trained on the various LAION datasets which are not curated. Either you find your own 400mil - 5bil CLIP-linked images to train off of and make sure there’s no boobs in there or else you use one of the ready-made sets and try to filter out the boobs via prompt moderation and/or identifying them in the rendered images.

I think some actually are trying harder to filter out inappropriate material in the model building process but that takes a lot of time and money that could be spend improving your system on the image rendering side.

They’re not curated, but they are tagged, or they wouldn’t be useful in the first place. So you use the tags to look for keywords like “nude”, “naked”, and “porn”, and remove those images from the dataset. Then you use your nudity-image-detector (the same one they’re currently using on the tail end of the process) to go through what’s left, check a few of those manually, and see what other keywords you need to exclude. You’re bound to still miss a few, but the dirty images will be much less common in the dataset, and hence much more unlikely to come up in the outputs. Counting the time spent on spot-checking to see what you’ve missed, it wouldn’t take more than an hour or two of human time, and a tiny fraction of the computer time needed for training (since you’re mostly just doing ordinary database operations, not neural-net stuff).