Looks like “Neuroscience News” is using Midjourney images.

SDXL 0.9 leaked last night and can be found on the internet. SD Devs on Reddit were in resigned good humor and mostly said that SDXL 1.0 is still due in mid-July so they hope people don’t put too much effort into making custom models or applications with 0.9 and wind up splitting the community.

But they also suggested that they were going to try to make 0.9 compatible with the Auto1111 UI so it’s not as though they’re saying not to use it. I haven’t tried yet and very well might not since full release should be around the corner and hopefully better optimized for consumer use.

It almost looks like Benny is watching them from the background, there…

Well, Benny was there in spirit. He’s an empathetic cat.

I call this: A Straight Dope Fantasy.

Tip: You can put the free Insight Face Swap-Bot into your Midjourney private server and use it to swap faces with rendered people. Works with BlueWillow, too. Good for keeping consistent characters…and for punking family members. ![]()

Some of you may be interested in this 25-min highlight video from the latest Nvidia (graphics tech company) keynote at Computex in Taiwan. It shows the direction AI is quickly heading…and it’s pretty amazing.

OK, we’ve got squid, goats, pie, Ed Zotti, Einstein, and the banner… All of that I get. But why is one of the goats riding a bicycle?

Well, in the interest of fighting ignorance: goats and bicycles may seem like an unlikely pair, but they have some surprising associations in different cultures and contexts. For example, in some parts of rural India, goats are used as a form of transportation, either by riding them or attaching them to carts. Bicycles are also a common mode of transport in these areas, and sometimes people use them to carry goats to markets or other places. Another association between goats and bicycles is the Goat Man, a mysterious figure who traveled across the US in the 1930s and 1940s with a wagon full of goats pulled by a bicycle. He claimed to be a preacher and a healer, and attracted curiosity and controversy wherever he went. A third association between goats and bicycles is the sport of cyclocross, which involves racing on off-road courses with obstacles and mud. The name cyclocross comes from the French word for goat, chèvre, because the courses were originally designed to mimic the terrain that goats could traverse.

…at least, that’s what ChatGPT tells me. ![]()

Not quite:

Blame ChatGPT. It’s not always accurate.

So this is why Clipdrop is overwhelmed today.

I haven’t been at Night Café in awhile and just noticed they’ve added more presets. “Artistic Portrait”, SDXL 0.9, long runtime:

There really was nine of her!

I’m having more fun with SDXL as I get a better feeling of what works and what doesn’t. I’ve also been experimenting more with Uncrop.

The free version of Uncrop at Clipdrop is limited to images of up to 1024x1024 pixels. Clipdrop SDXL (and Bing/Dall-E) images can only be created in a 1:1 aspect ratio, and I usually try expanding my favorites into a more comfortable 3:2 or 2:3 ratio. To preserve the most original image resolution at that aspect ratio with Uncropping, means that I need to frame it to make images of 1024x682 or 682x1024 pixels, which you do in Uncrop by dragging edges on the original image and the new intended borders. Uncrop then fills in the image without needing or allowing a prompt.

My old method of creating 3:2/2:3 images was to expand the canvas of the original image file, upload that to Playground, mask the areas I want to expand, and reuse the original prompt or create a new one. (This is a convoluted use of inpainting.) This results in an image downsized to 768x512 or 512x768, the size limit for the free version of Playground.

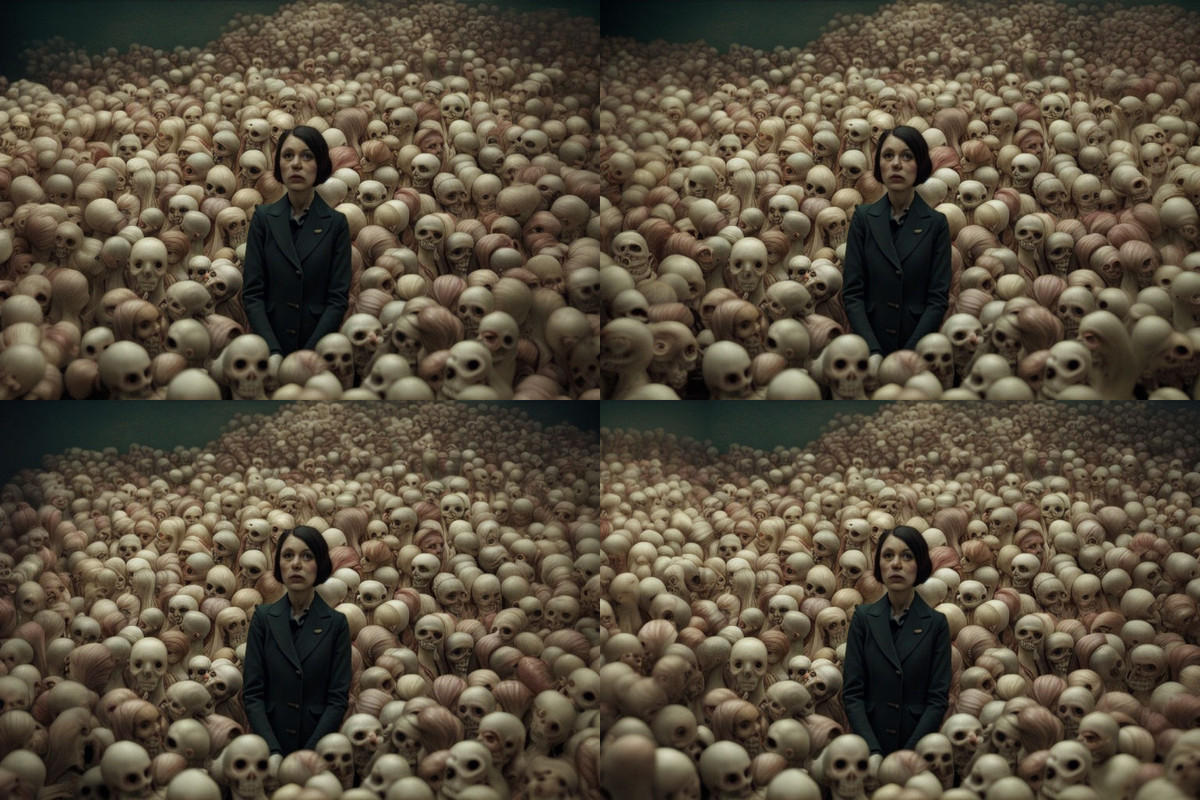

So here is a square image created in SDXL 0.9 on Clipdrop. In the first grid, the image is expanded using the SDXL-based Uncrop, also at Clipdrop. In the second grid, the image is expanded with the above-mentioned SD1.5 inpainting method and using the same prompt that generated the original image. Uncropping does a pretty good job of creating expansions that fit the style and content of the original without a prompt, but I do believe than overall SD1.5 inpainting with a prompt does at least a slightly better job. (In this example and others that I’ve tried).

I’ve used most of the AI art apps (including DALL·E, Leonardo, and SDXL) and still use my free monthly allotments of each of them. They are all quite good, each with their own features and bugs. But, I mostly use Midjourney and Firefly because I pay for those and invested more time learning them.

I usually start with MJ to get the basic image I’m looking for, then use Photoshop/Firefly to touch them up and add what I want. I’ve used Photoshop (PS) since it was released in ‘88 and consider myself expert-level using it—so it’s my go-to for enhancement, color correction, etc.

In order to save my monthly MJ time, I often use PS’s generative fill to outpaint and inpaint, instead of using MJ to upscale, do variations, remix, blends, etc. Generative Fill does a fine job of matching the MJ art.

Often, to save MJ time, I use the free AI Image Upscaler, or PS’s native upscaler to enlarge my MJ images (or PS’s Super Zoom neural filter to zoom in and retain resolution). To swap faces, I use INSwapper within the MJ bot. If I want to import a stock graphic into an MJ image, I embed the graphic as a layer on top of the MJ layer, mask it (PS’s AI selection and masking tools are fantastic), then use PS’s Style Transfer neural filter to make it appear as it belongs.

It’s fun to be at the ground level of AI art. The updates are exciting and frequent. Advanced AI is coming soon to After Effects and I can’t wait for that, too.

I use only free tools right now. If I paid for anything, it would be Playground so that I could do higher resolutions on the infills. (What I do now is resize the 768x512 output to 1536x1024 and paste back in the original full-resolution central portion, which actually works pretty well.)

I’m a relatively newbie with Photoshop–I didn’t try it until they killed my preferred Aldus Photostyler in 1994!

Oh, and for super-resolution, I usually use this site, which is back to allowing limited free use again. (They actually had a fire destroy their render farm and were unusable for anyone for a while, then were available to subscribers only for a while as they struggled to replace the hardware.)

(My fallback super-resolution source is an independent training that isn’t quite as good.)

Since the new awards started I’ve been trying to visit every day and generate at least one image but at one point I accidentally forgot a day and broke a streak. Tonight I visited thinking I was just finishing another 5 days, but actually got my first 30 day streak in addition to the latest 5, so 35 credits added. Might burn some on SDXL 0.9 images.

I spend a lot of time on creating images that are never actually going to be used for anything. But for me it is like what playing video games is for lots of other people–nothing practical comes out of playing Grand Theft Duke Nukem Dead Adventure IV, either.

I’ve been getting really great weird results from SDXL 0.9. All of these started out square and were expanded, with some having other editing done.

The girl in the top center looked like a child of a demon species from an episode of Buffy, but I definitely, definitely didn’t spend a half-hour late at night googling trying to trace down which demon it was I remembered and finding it to be a Lei-Ach demon.

https://buffy.fandom.com/wiki/Lei-Ach_demon

Definitely.

SDXL 1.0 was released today, and it already available for use at Playground.

I always know when something is coming at Playground. Their site started acting a little funky/different for the last day or so. That usually signals some tinkering and additions.