“Interferes” , not “interested”, of course.

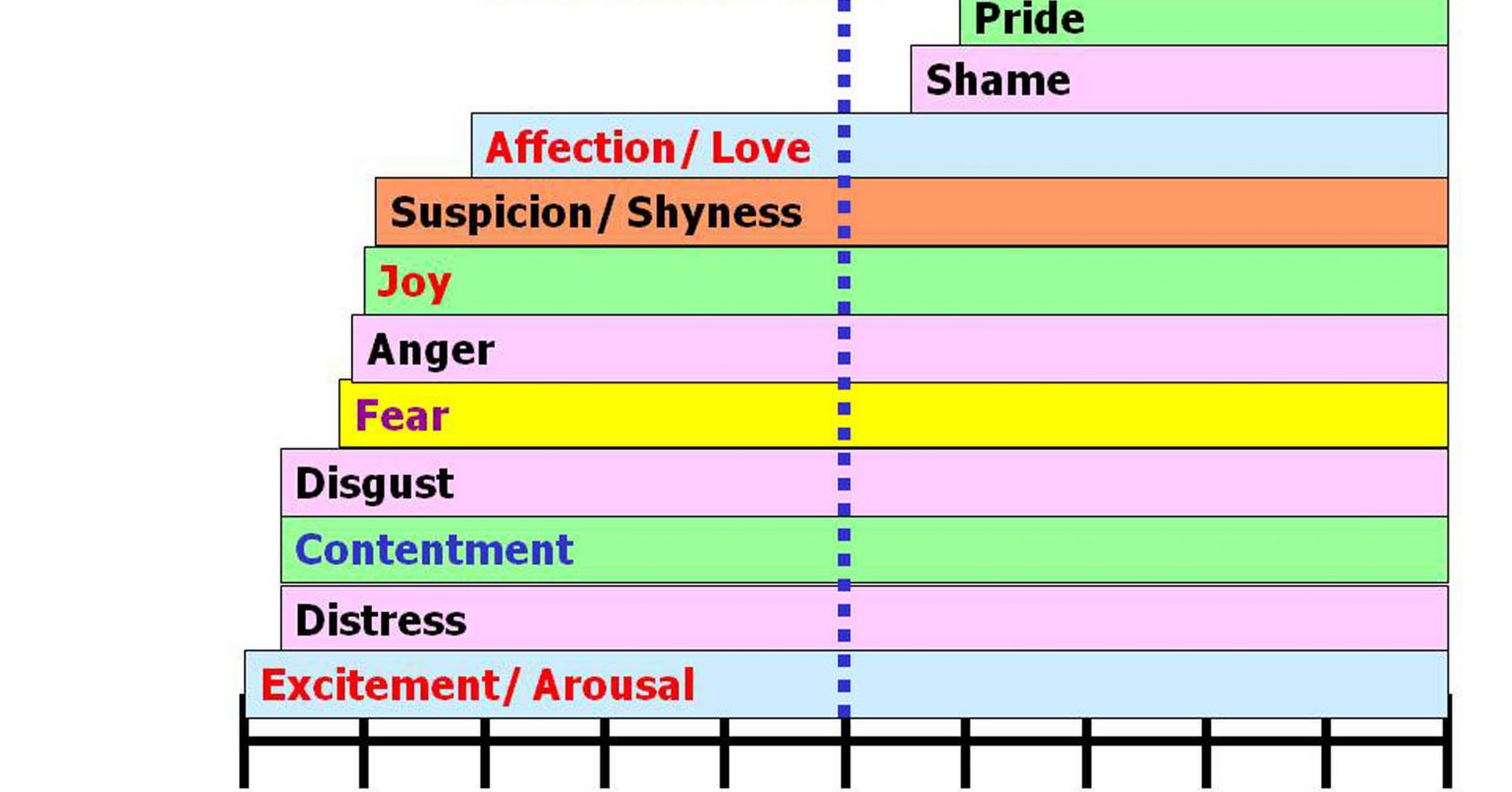

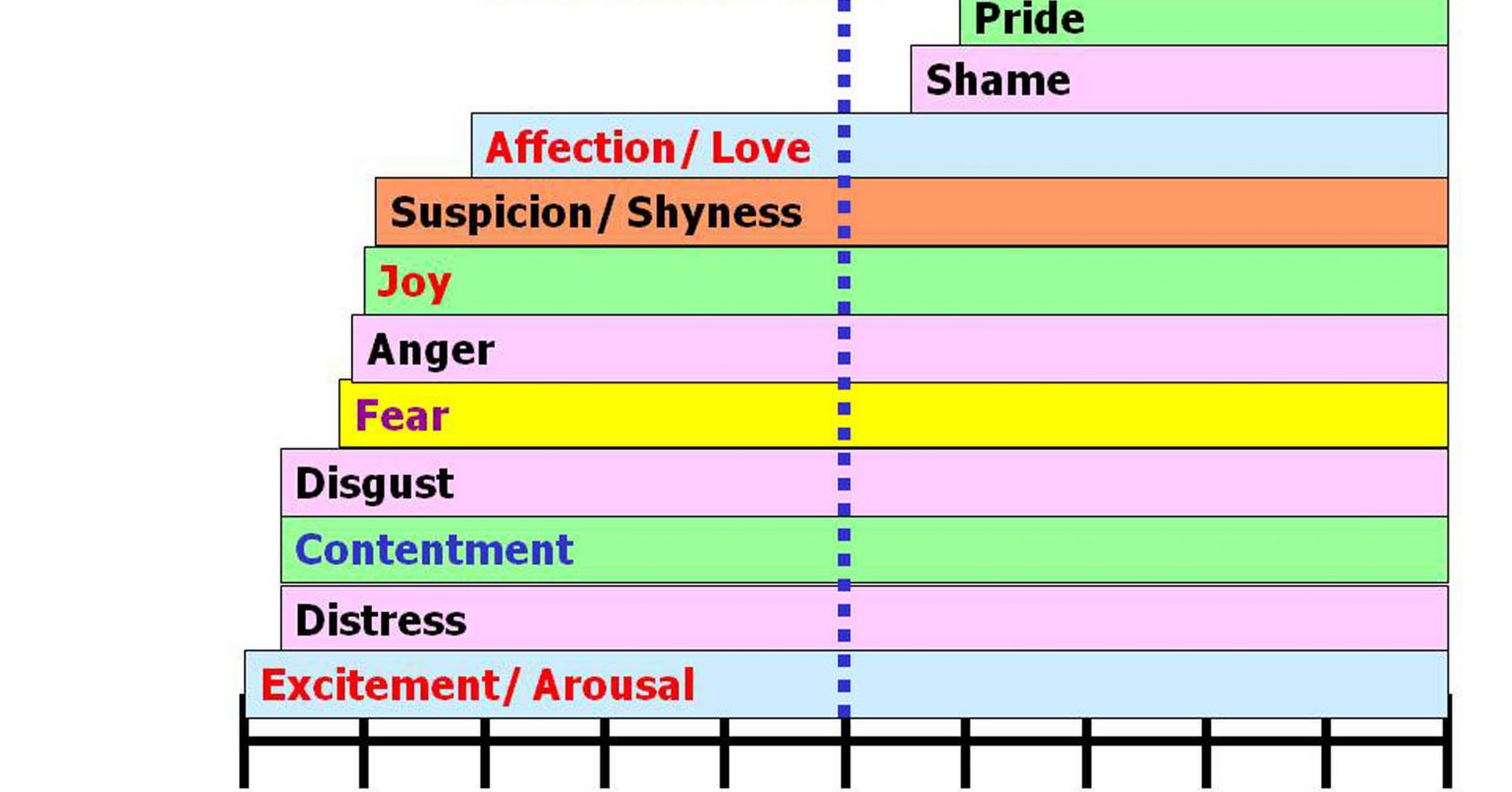

Which Emotions Do Dogs Actually Experience?

Dogs have the same emotions as a 2-year-old child.

“Interferes” , not “interested”, of course.

If a person talks to AI in the way someone would have written “Dear Diary”, I’m cool with it. It’s a conversational way to get something off your chest. I occasionally write things just to have written them down, so they’re not just rattling around in my skull.

It’s when the answers come back and you consider it real that I’d be worried about.

At risk of creating another tangent, I have to ask: if the answers that come back are useful and informative, perhaps insightful, and maybe even make you feel better about yourself, what exactly is the proper meaning of “real”?

I think it’s the emotional attachment that I’d be concerned about.

An encyclopedia can be a useful source of information. But if I start to fall in love with it because it is the one thing in my life that I can always count on to be there for me, that might indicate a problem.

There’s also the issue of putting too much faith in the answers as well. Trusting AI is not something you necessarily want to do. It’s not a person, and can get very simple things very wrong.

Here is an example:

That’s a good answer, and I agree. We humans and dogs are programmed to form emotional bonds, and it’s all too easy to let that spill over into interactions with AI.

So can a person. That guy irritates me because he’s not proving anything useful. The cognition of AI is very different from human cognition – it can do a lot of things that many humans cannot, and do them very very quickly, including solving intelligence-testing puzzles. But because of completely different methods of reasoning, it can also make mistakes that strike us as trivial and stupid.

Perhaps of interest in relation to AI lovers:

Which is why you go to something like an encyclopedia, or a forum populated with experts, and not a random person you don’t know, or an acquaintance that you know has zero expertise on the matter you’re inquiring about. AI is actually “dumber” than a random person or a person with zero expertise, because it’s not even a person. It can’t even understand things that almost any human being would understand.

Isn’t he?

Which is very useful. And it’s why you should not be going to AI for advice the way you would go to a human or some other information generated by a human.

Basically, you answered your own earlier question.

In this case, there is a difference between a real person and AI, and you can’t treat AI the way you would a person. That doesn’t mean an AI is inherently inferior, but it does mean you have to be mindful in how you use it.

Why is okay to form an attachment to a dog, who we anthropomorphize as having human feelings, but not okay to form an attachment to an AI, who we also anthropomorphize as having human feelings?

Now whose being fucking rude and mean?

Also, I said “disturbed mind” and “filling an emotional void” not “mental illness”. Not “crazy” but they might be suffering from some sort of depression, compulsive or addictive personality disorder, neurodivergence, or simply just lonely

It’s not that collecting stuff (or having relationships with AI) is “harmful” IMHO. It’s that it might be used as a crutch or safety blanket that covers up or lets you get away with not having to deal with some actual underlying issue.

Case in point, if someone is suffering from extreme loneliness, I don’t know that enabling them more ways to disconnect from other humans is the best solution.

Also, I said “disturbed mind” … not “mental illness”.

What do you see as the difference between those two terms?

Because humans do not have a monopoly on “feelings”. Your post assumes that dogs can’t have feelings. They do.

Dogs have the same emotions as a 2-year-old child.

AI, on the other hand, does not.

Basically, your question is based on a false premise to begin with.

And it’s why you should not be going to AI for advice the way you would go to a human or some other information generated by a human.

But I do just that. And while I don’t automatically assume that its answers will always be correct, most of the time they are, at least in the sense that its suggestions work, or on more profound matters like cosmology or quantum physics, they’re broadly backed up by other sources. By which I mean, though I’m not knowledgeable enough to necessarily pick up any mistakes in the explanatory details, the gist of the answer has generally been correct and very informative.

It’s not that collecting stuff (or having relationships with AI) is “harmful” IMHO. It’s that it might be used as a crutch or safety blanket that covers up or lets you get away with not having to deal with some actual underlying issue.

You didn’t say “might be” in that thread. You literally said that you see anyone who collects things as exhibiting symptoms of a disturbed mind.

IMHO. collecting things is a symptom of a disturbed mind.

Which is, to my eye, a rude insult to anyone who collects things, including myself. If you want to paint a whole lot of people with that judgmental brush with your post, then own that you are being fucking rude to them.

But I do just that. And while I don’t automatically assume that its answers will always be correct, most of the time they are, at least in the sense that its suggestions work, or on more profound matters like cosmology or quantum physics, they’re broadly backed up by other sources.

I think you’re setting yourself up for some problems with this philosophy, but hey, you have the freedom to make mistakes.

By which I mean, though I’m not knowledgeable enough to necessarily pick up any mistakes in the explanatory details, the gist of the answer has generally been correct and very informative.

I see AI give vastly incorrect answers frequently. To the extent that when I look up something online, and I get the AI response at the top (fucking Google), I get frustrated because I often have to skip past that bullshit and go through the actual search results to get a real answer.

Why is okay to form an attachment to a dog, who we anthropomorphize as having human feelings, but not okay to form an attachment to an AI, who we also anthropomorphize as having human feelings?

Because dogs are intelligent, sentient beings that form genuine emotional bonds with us just as we do with them. It’s not “anthropomorphizing” to recognize them for what they are.

As to feelings and AIs and dogs …

What about this: aibo, the robotic dog that Sony started on years ago. It’s gotten pretty darn advanced these days.

Dogs may have real emotions, but they’re dog-emotions. The human is doing a bunch of translating when the human is perceiving that the human and the dog are exchanging emotional content.

Assuming the AI robot dog is decently skilled at appearing like a real dog, how is that situation different? The human is still translating (robotic) dog-emotions into human emotion terms as they interact.

My bottom line:

One can take the position that being made of meat is a prerequisite for emotions. Or for thinking. But it’s getting harder and harder to defend that position.

I see AI give vastly incorrect answers frequently. To the extent that when I look up something online, and I get the AI response at the top (fucking Google), I get frustrated because I often have to skip past that bullshit and go through the actual search results to get a real answer.

I hate Google’s AI, and yes, it’s often wrong. But not all AI is created equal. GPT-5.x is vastly superior, IMO.

Dogs may have real emotions, but they’re dog-emotions. The human is doing a bunch of translating when the human is perceiving that the human and the dog are exchanging emotional content.

I don’t get the “dog-emotions” concept. You’re acting like a dog being sad or happy is different than a human being sad or happy. Why?

I mean, babies certainly don’t have the same kind of thoughts as an adult, but that doesn’t make them objects, and it doesn’t mean you’re anthropomorphizing when treating a human baby like a human, because it literally is a human. A dog’s emotions are probably closer to an adult human than a human baby’s emotions would be.

I’m not arguing that it’s okay to treat a dog like a human child, because it’s not a human child. But there is nothing irrational about having an emotional connection with a being that has actual emotions, and can genuinely return your affections.

Why is okay to form an attachment to a dog, who we anthropomorphize as having human feelings, but not okay to form an attachment to an AI, who we also anthropomorphize as having human feelings?

I think I mentioned this in another thread, but the “AI buddy” has been a staple of sci fi since forever. The Lost in Space kid and his robot, Luke and R2, Michael Knight and KITT, John Connor and his Terminator, Fry and Bender, Sam Witwicky and Bumblebee, Cassian Andor and K-2SO, Chappie, The Iron Giant, Johnny-5, so on and so forth.

I’ve said it before, but the science fiction seems to predict that the height of humanoid robotics technology is weaponized sex dolls and military killing machines repurposed as a sarcastic wing-man.

I should point out that we also characterize lonely women as “cat ladies” and while not as stigmatizing, loner men are often portrayed as their dog (and/or horse) being their only companion.

I think the answer to your question is similar to the “uncanny valley” effect. Up to a certain point, you are engaging with a toy. Above a certain level (as often portrayed in fiction) you are possibly dealing with a sentient being with it’s own agency and feelings and whatnot. In between (the uncanny valley), it’s just programming designed to trick you into thinking it’s human. It doesn’t really care what you say or do or if you just pull the plug on it.

That’s my theory at least.

An encyclopedia can be a useful source of information. But if I start to fall in love with it because it is the one thing in my life that I can always count on to be there for me, that might indicate a problem.

Some embarrassing paper cuts, for example.