I mean, the bar isn’t especially high these days…

To play devil’s advocate for a moment: If you could train an AI companion to even the 70th percentile of human-like empathy, it might still be a net positive for the millions (billions?) of people who don’t have access to close friends / good listeners.

These chatbots aren’t magic fact-finding machines, true, but they ARE very good at, well, chatting. It’s easy — too easy — for people to become attached to them via a combination of that person’s own life circumstances and the chatbot’s deliberate sycophancy. And yes, at the extremes, this can easily spiral into a feedback loop where that user becomes worshipped by the chatbot, leading to delusions of grandeur. It can also lead to heartbreak when the user falls in love with the AI and then the algorithm changes. Both have happened many times already.

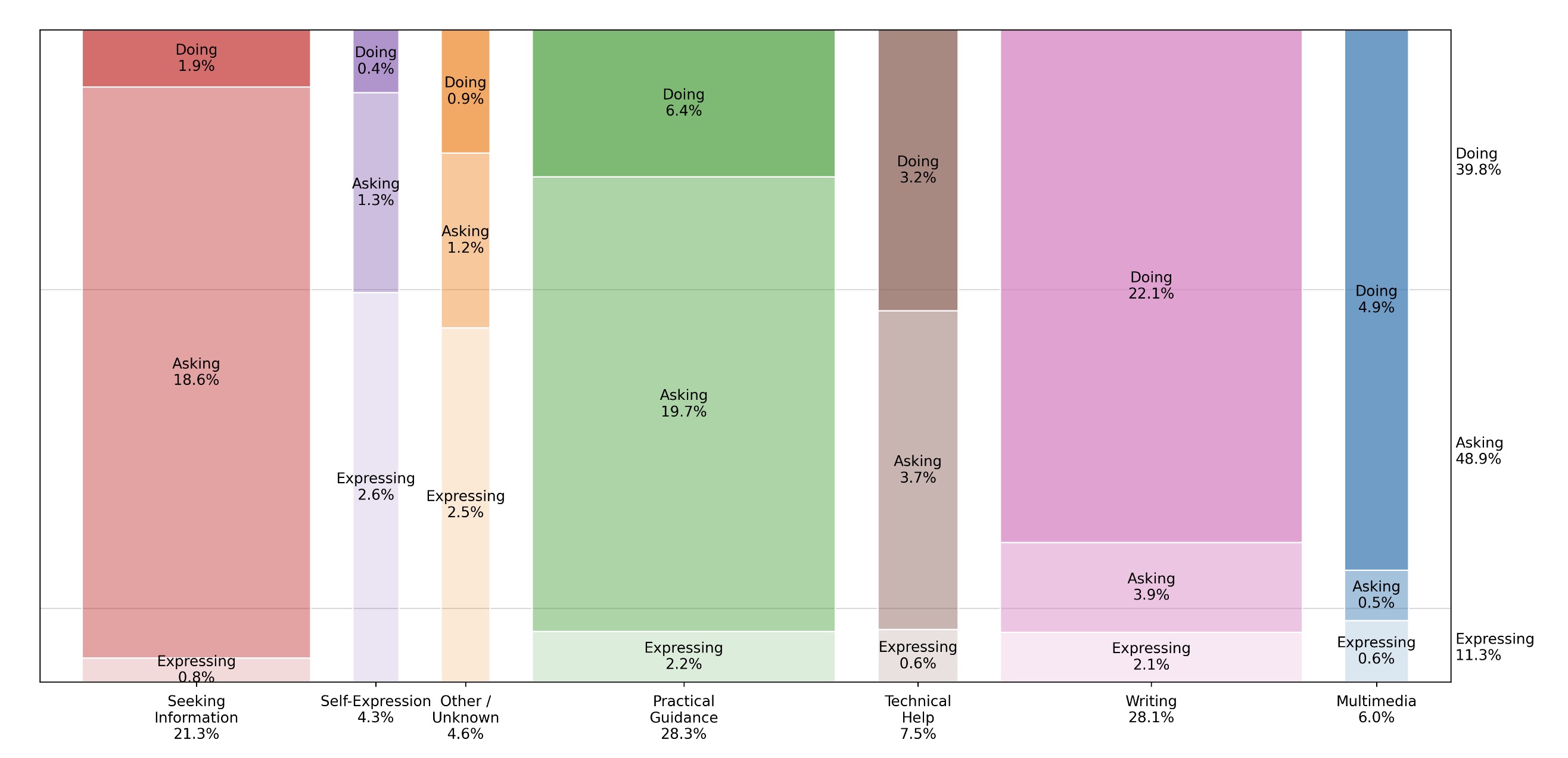

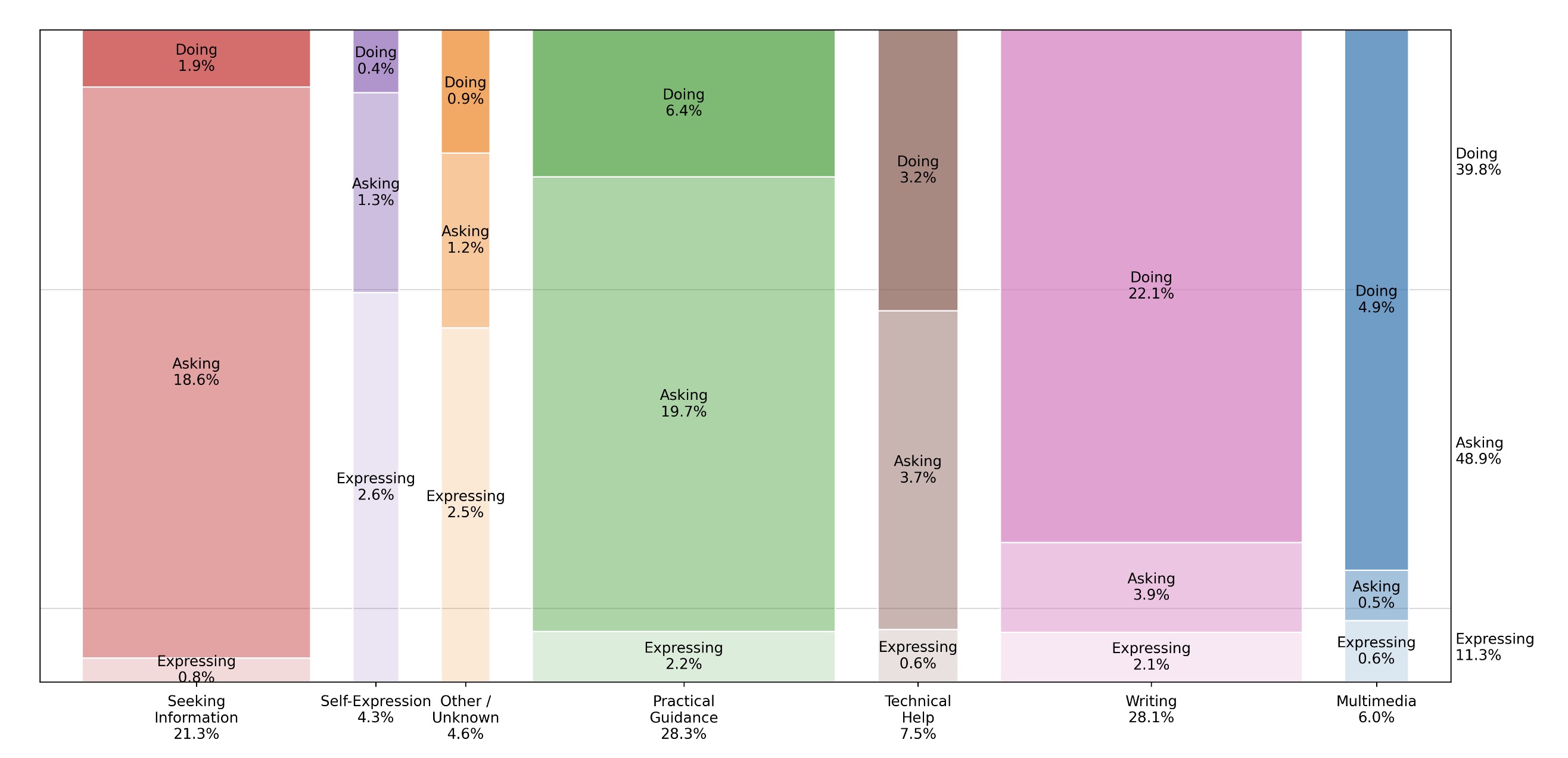

But given that some 700 million users — nearly 9% of the world population — use ChatGPT alone every week, one would have to assume that not all of these conversations necessarily lead to self-delusions. A lot of it is just mundane, Google-replacement type of questions: (from the study)

But that purple column shows there are at least some users (2.6%, or 18 million people a week) just expressing themselves to the chatbot conversationally. That sort of outlet could become a huge public health boon — or nightmare. It’s not always easy to find good companionship, human or otherwise. The question is how genuinely good we’ll be able to train the chatbots to become. Even if they never reach actual sentience of any sort, the mere facsimile of it can still help real people with their real loneliness.

In a utopia, I think having communities of real people who are actually connected to each other and care for their neighbors would be wonderful. But in the West, at least, we seem to be moving further and further from that sort of society with every passing day. Given that the future seems to lean more towards corporate-authoritarian dystopias where the average human is just a disposable cog in recurring throwaway investment bubbles, I don’t think there will be much genuine community left for people to gather around.

Today’s chatbots, imperfect though they are, are already at least better than the algorithmic nonsense self-marketing spam of the TikTok world. They are already often kinder than strangers, better listeners than most, and able to draw from a wealth of background knowledge that no human can hope to match.

I would never trust one to be a “truth machine” — that’s just not how they work — but empathy doesn’t always require factfulness. I’d argue the two are tangential at best, and sometimes a willing ear or friendly mirror is all that a person really needs.

A huge danger there, of course, is that almost all of the world’s current chatbots are made by the same corporate overlords leading us into the dystopia to begin with. Chatbots are still a loss leader for them, but eventually they will have to be profitable, which means eventually they will be enshittified.

Today’s friendly chatbot is tomorrow’s salesperson, advertising to each user in their preferred language and lingo. Not long after that, I would be very surprised if we don’t see chatbots become cult and religious leaders unto themselves. They may not be very good at truth-ing, but they are certainly very good at being charismatic and convincing (even now), at a level of personalization that knows exactly how to target each user’s desires and fears.

As our existing social fabrics fall apart more and more every year, the artificial connections are all future generations will have… only a matter of time before they start following and worshipping them in return.

Who knows? Maybe that’s not altogether terrible? Our current world leaders aren’t exactly doing a great job.

I don’t know if an AI overlord future will be any better. I do know that a human overlord one will be worse. (Of course, they’re not mutually exclusive… won’t be long until we have cyborg dictators…)